AI security cameras are collecting data they don't need

A new study from Surfshark reveals that AI powered home security cameras are gathering more information than buyers expect. The report says that these devices go far beyond routine recording. By standardizing facial recognition, they create biometric profiles that can include neighbors and passersby -- not just the people who own the cameras.

Surfshark says that many companion apps also gather personal details unrelated to security features, further raising privacy concerns for owners.

SEE ALSO: File-sharing platforms not protecting against malicious content

The study, which you can read here, warns that the shift from simple motion alerts to broad biometric scanning has happened quickly. Facial recognition and vehicle detection have become central features, and that this change expands the type of data collected through daily use.

As these systems learn to identify faces, animals, vehicles and specific sounds, they gather information that many people never knowingly approved.

Miguel Fornes, a cybersecurity expert at Surfshark, said the key issue is the lack of real choice. “The central risk isn’t only the capture. When people can’t meaningfully opt in or out and are not informed about where their biometric data is stored, what additional data points are being collected, and with whom it’s shared, you’ve created a privacy hazard,” he said, adding that “scanning faces or car plates of neighbours -- especially without explicit consent -- should be treated as a major privacy concern if not a breach of privacy regulations.”

AI features are now standard across major camera ecosystems. All eight of the brands in the study use AI to distinguish between people and animals. Six use facial recognition and seven detect vehicles.

Some models also include AI sound analysis, which identifies noises such as breaking glass. These capabilities are widely promoted, and Surfshark notes that they increase the volume and sensitivity of data produced inside and outside a home.

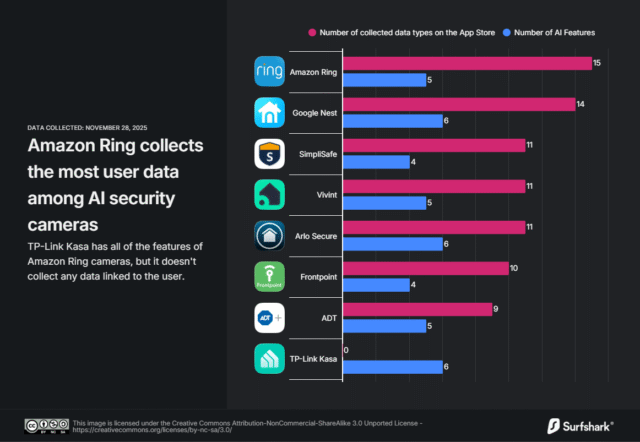

The study highlights major differences in how companies collect information through their apps. TP Link Kasa “collects no data linked to the user” while offering the same AI functions as other brands. Amazon Ring is described as the “most data hungry” app, pulling in fifteen user linked data types, including contact details, location, address, product interactions and purchase history.

Ten types of data sit under an “Other Purposes” category defined only as “any other purposes not listed,” and this level of vagueness makes it difficult for users to understand what information is being used for.

Arlo “collects and shares device IDs specifically for third party advertising purposes” and gathers more data for developer advertising than any other competitor. Google Nest, Vivint, SimpliSafe, ADT and Frontpoint gather smaller sets of data for similar reasons.

AI facial recognition

The study addresses regional rules as well. Facial recognition is “strictly regulated in the EU and UK” under GDPR, and that this is why Google Nest’s face detection feature is not available in Europe. It is widely used in the US, Canada, Japan and Australia.

Surfshark selected the eight camera ecosystems based on their frequent appearance in industry reporting. It reviewed AI features on official sites and examined privacy statements on the Apple App Store to determine the number and purpose of data types linked to users. It also compared facial recognition rules in markets including the UK, the US, Canada and Australia.

What do you think about how AI cameras handle personal data? Let us know in the comments.