How cybercriminals are attacking machine learning

Machine learning (ML) is getting a lot of attention these days. Search engines that autocomplete, sophisticated Uber transportation scheduling and recommendations from social sites and online storefronts are just a few of the daily events that ML technologies make possible.

Cybersecurity is another area where ML is having a big impact and providing many benefits. For instance, ML can help security analysts shorten response times and dramatically improve accuracy when deciding if a security alert is an actual threat or just a benign anomaly. Many view ML as the primary answer to help save organizations from the severe shortage of skilled security professionals, and the best tool to protect companies from future data breaches.

However, as helpful as ML is, it is not a panacea when it comes to cybersecurity. ML still requires a fair amount of human intervention, and hackers have developed multiple methods to deceive and thwart traditional ML technologies.

Unfortunately, it’s not particularly difficult to fool ML into making a bad decision, especially in an adversarial environment. It’s one thing to have a successful ML outcome when operating in a setting where all the designers and data providers are pulling for its success. Executing a successful ML algorithm in a hostile environment full of malware that’s designed to bring it down is altogether a different situation.

Machine Learning Ground Truths

Cybercrime continues to grow dramatically in sophistication. While it’s true that older basic hacking techniques and exploits are still widely used, leading cybercriminals quickly adopt each new innovation, including ML, to do their dirty work. Many have a deep understanding of the technology, which enables them to design attacks that can evade ML based malware and breach detection systems.

Machine learning is dependent on a baseline of ground truths. The technology uses this foundation to make all decisions, and it’s trained with specific examples that demonstrate the ground truths. It is this foundation against which new instances are compared as part of the ML-supported analysis.

For example, in a very simplified illustration, imagine a ML system designed to detect if the animal in a particular image is a cat. Some of the ground truths identifying a cat are that it has two pointy ears, fur, a tail and whiskers. By comparing an image against these truths, the system can determine if the image is indeed that of a cat.

Ground Truth:

Result:

= CAT

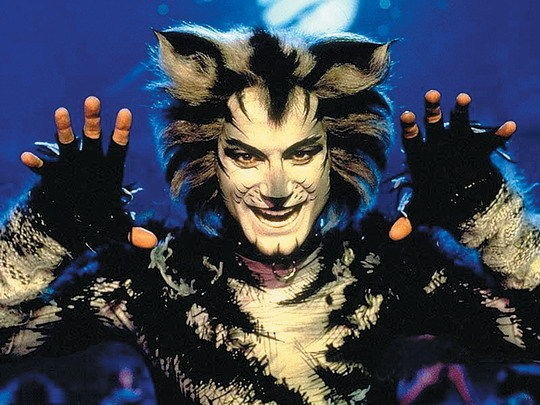

Polluting the Ground Truth

Cybercriminals use three primary techniques to defeat ML-based security controls. First, criminals will attempt to pollute a ML system with content that corrupts the ground truth. If the ground truth is either incorrect or altered, the system won’t be accurate. For example, if hackers are able to loosen the details of what constitutes the characteristics of a cat, the system may recognize people dressed up as cats as cats.

Polluting the ground truth could result from adding or altering the ML system so the following image is part of the definition of what is a cat. Because this image of a person has fur, whiskers, pointy ears and presumably a tail, it meets the system’s criteria for a cat and it incorrectly classifies it as such.

A subsequent result, therefore could be:

= CAT

The baseline of ground truths found in ML based malware and breach detection systems are very complex, and it’s difficult to get everything correct. For example, we took a number of benign executables and packed them using standard compression tools. We then submitted the packed executables to VirusTotal, which checked the files for malware via 50 or so different services. Although the programs were completely harmless, twenty of the malware detection systems classified them as malicious because these systems had incorrectly learned that all packed programs are dangerous.

Cybercriminals look for opportunities to influence or corrupt the ground truths that ML systems use so that something bad is identified as good.

2) Mimicry Attack

A second type of assault against ML based security controls is a mimicry attack. This is where an attacker observes what the ML system has learned, and then modifies the malware so that it mimics a benign object. In a mimicry attack the attacker creates an image of something that is not really a cat, but mimics a cat by meeting the system’s specifications of a cat, such as the image below.

A Mimicry attack fools a system into concluding that the ground truth has been met.

To understand how a cybercriminal might use a mimicry attack in the real world, consider the following example. A number of security systems execute suspicious programs in a sandbox. If, during a specified timeframe, the file performs any malicious behaviors, such as attempting to insert itself into the boot procedure or by encrypting files, the system will classify the file as malicious. Since legitimate files will exhibit no malicious behavior during the time period, the system will classify them as benign. An attacker that has observed this characteristic can perform a mimicry attack by creating a malicious program that mimics a benign one simply by delaying any malicious activity for several minutes -- long enough to exceed the test time of most sandboxes.

Stealing the ML Model

A third technique used by cybercriminals to undermine ML based controls is to steal the actual ML model. In this case, the attacker reverse engineers the system or otherwise learns exactly how the ML algorithms work. Armed with this level of knowledge, malware authors know exactly what the security system is looking for, and how to avoid it.

While this level of attack requires a very determined and sophisticated adversary, there’s a surprising number of tools and resources available to assist anyone who wants to learn how to perform reverse engineering.

Fighting Back -- ML Done Right

There are a number of things an enterprise can do to fight back against cybercriminals and their attempts to defeat ML based security controls. First and foremost, it’s critical to select and deploy a system that has been specifically designed to operate in a hostile environment. To be effective in the world of cybercrime, ML tools must be attack resilient and capable of multi-classification and clustering to detect the most sophisticated evasion techniques. Migrating a ML toolset designed for image detection, marketing or social networking environments to a security application will likely be met with defeat and a data breach.

Data filtering techniques should also be present to guard against pollution of the ground truths. By constantly revising, upgrading and evolving the ground truths, the system will automatically remove inaccuracies and stay up to date with emerging attacks and new trends.

It’s also vital that the ML technology incorporates learning from the behaviors of a file as it executes, and not just from the appearance of the file. Returning to our cat detection analogy -- while an attacker can create an image of a person that appears something like a cat, it’s another matter to make a person behave like a cat. Cats can easily jump higher than their body length and they can retract their claws -- humans can’t do either behavior, and that won’t change. Likewise, malicious files elicit malicious behavior, and that won’t change. Effective ML systems must incorporate behavior into the equation. This will significantly reduce the possibility of a successful mimicry attack.

ML is an Important Arsenal in the Fight Against Cybercrime

ML is changing our world, including cyber. It’s true that it can’t fully operate on its own and needs an element of human intervention and oversight -- and perhaps it always will. However, it’s also clear that ML provides many benefits and is here to stay. Although sophisticated cybercriminals have devised very clever attacks against the technology, when designed and built specifically for adversarial environments and combined with other tools and skilled human oversight, ML is an effective deterrent against cybercrime.

Image Credit: Sarah Holmlund / Shutterstock

Giovanni Vigna has been researching and developing security technology for more than 20 years, working on malware analysis, web security, vulnerability assessment, and intrusion detection. He is director of the Center for Cybersecurity at UC Santa Barbara and co-Founder/CTO of malware protection provider, Lastline. Vigna is the author of more than 200 publications, including peer-reviewed papers in journals, conferences, and workshops, a book on intrusion correlation, and (as an editor) a book on mobile code security.

Giovanni Vigna has been researching and developing security technology for more than 20 years, working on malware analysis, web security, vulnerability assessment, and intrusion detection. He is director of the Center for Cybersecurity at UC Santa Barbara and co-Founder/CTO of malware protection provider, Lastline. Vigna is the author of more than 200 publications, including peer-reviewed papers in journals, conferences, and workshops, a book on intrusion correlation, and (as an editor) a book on mobile code security.