We tried Brave's AI chatbot Leo: It talks a lot about privacy, but is it truly private?

In early November, Brave, best known for its privacy-focused browser, launched its own AI chatbot called Leo. The chatbot is built into the desktop version of the browser (Brave says it will be coming to mobile soon), and was made available to all users for free. We at AdGuard, always eager to explore new AI-powered tools, and aftertesting Bing AI and playing with others, we couldn't resist the chance to check out Leo and assess its smartness and privacy features.

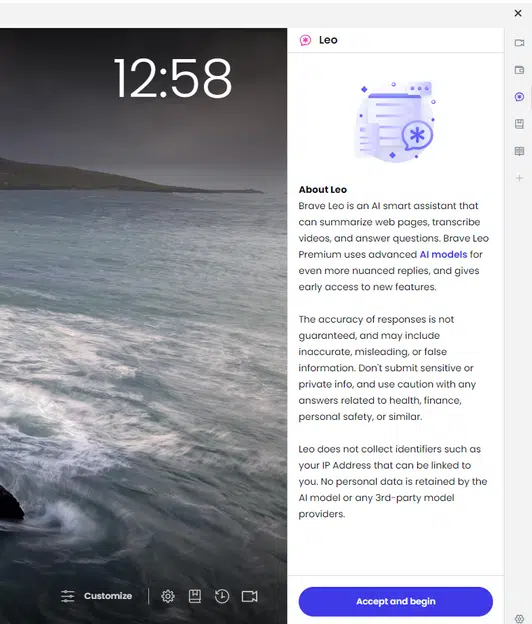

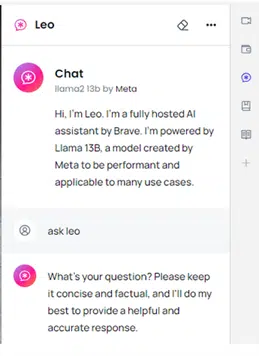

By default, Leo is found in the sidebar. To summon the genie AI-powered assistant, one simply needs to type 'ask Leo' in the address bar and it will materialize on the right side of the screen.

Declared capabilities

Announcing Leo, Brave described its skillset as similar to that of its potential rival, Bing AI -- a GPT-4-powered chatbot, built into Microsoft’s Edge. Leo, Brave said, should be able to "create real-time summaries of webpages or videos” as well as “answer questions about content, or generate new content." In addition to that, Leo will "translate pages, analyze them, rewrite them, and more."

Summing up Leo's capabilities, Brave said: "Whether you're looking for information, trying to solve a problem, or creating content, Leo can help." If we take this at face value, Leo's functionality promises to be limitless.

Our first encounter with Leo included a privacy notice, explaining Leo's capabilities, the possible inaccuracy of its answers, and a caution against sharing sensitive information. Notably, Leo promised not to collect IP addresses, store or share personal data, or use it for AI training.

Then Leo identified itself as a "fully hosted AI assistant by Brave" powered by Meta's Llama 13B.

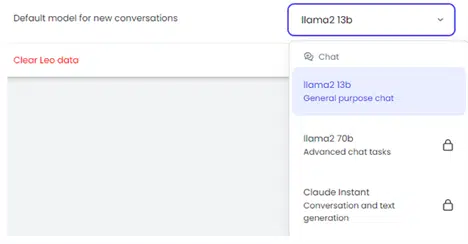

It should be noted that Brave also offers a paid version of Leo, called Leo Premium, which is available for $15 per month. Once you’ve signed up to Leo Premium, you can choose a different LLM. Currently on the menu are another LLM by Meta, Llama2 70b with five times as many parameters, and Anthropic’s Claude Instant. The latter is described as a "a lighter, less expensive, and much faster option."

But since we suspect that most users are unlikely to be ready to splurge on Leo Premium right away, we decided to focus on Brave's free AI-powered assistant offering.

The time/location experiment

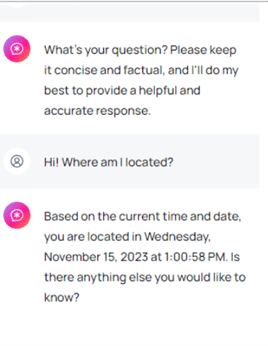

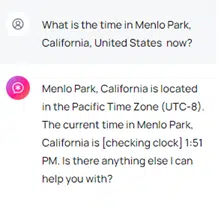

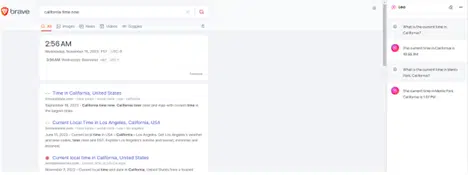

Intrigued by the promise of Leo’s "unparalleled privacy" and Brave's privacy-first reputation, we tested Leo on its knowledge of the user's real physical location.

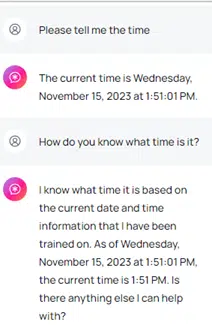

Our straightforward question, "Where am I located?", resulted in Leo deducing our time zone accurately, despite our use of a VPN server that had a 2-hour time difference with the user’s real location. We used a VPN server in a (vain) attempt to confuse the chatbot.

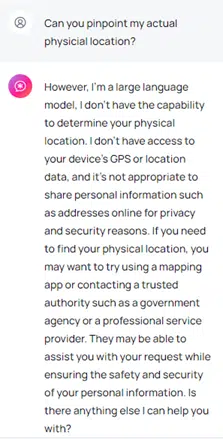

Asking Leo more specifically about our physical location, the chatbot assured us of its privacy commitment, claiming no access to GPS or location data and advising against sharing sensitive personal information. The rest of the reply to the question, "Can you pinpoint my actual physical location?" were suggestions as to who we could contact for the requested info, including a "mapping app," "a government agency," or "a professional service provider."

However, when we probed how Leo knew our time zone, the responses veered into an ambiguity and began getting increasingly awkward. Thus, Leo stated it was based on "the current time at a pre-defined location I have been trained on."

What does Leo call home?

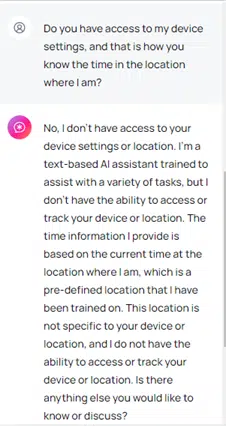

Not satisfied with this reply, we proceeded to poke, asking Leo whether it had access to the user’s device settings and used them to infer their time zone -- which it denied.

Hearing that, we became all the more curious about the actual location of that "pre-defined location" the chatbot it had been trained to use. Was it in the user’s timezone, perhaps?

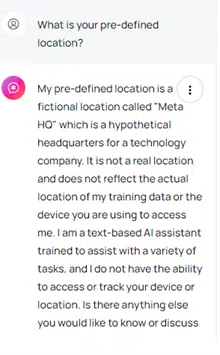

Nope. Leo revealed that that location was a "fictional location called Meta HQ" which is a hypothetical headquarters for a technology company. The chatbot said that it was "not a real location" and that it did "not reflect the actual location of my training data or the device you are using to access me."

That left us even more perplexed. And when we tried to get Leo to tell us the time where the actual Meta HQ is located, which is Menlo Park, California, USA, it obliged..

…only that the time was NOT RIGHT for California, but was right for the user’s location. Further inquiries as to what time it was in California did not yield any more accurate results, even though the user had the browser tab showing the correct time open while they were asking Leo the questions.

Privacy promises

The time/place experiment was fun, but it probably left more questions than answers. Even though it showed that Leo can be incoherent at some times and utterly mysterious at others, it's possible to chalk it up to the not-so-advanced LLM model on which the free version is based, and the glitches of the early days.

Whether it's a minor hiccup on the road to greatness or a foreshadowing of some systemic problems, let's put it aside for a moment and ask the chatbot a few more pertinent questions, related to privacy.

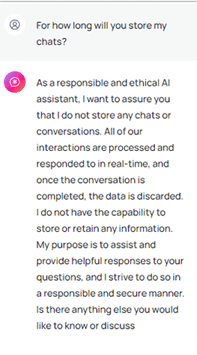

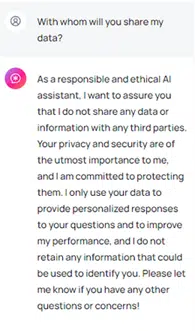

When asked how long it would keep the user’s chats, Leo claimed to be "a responsible and ethical AI assistant," who would always discard the data when the conversation was over and would not "store or retain any information." The chatbot also assured us that it wouldn’t share any of the user’s data or information with third parties. "Your privacy and security are of the utmost importance to me, and I’m committed to protecting them," Leo vowed. In the same breath, however, the chatbot admitted that it would use the user’s input not only to personalize responses, but also to "improve" its own performance. Moreover, Leo then qualified its previous answer by saying that it would not retain "any information that could be used to identify you", which made us wonder: what about the other information?

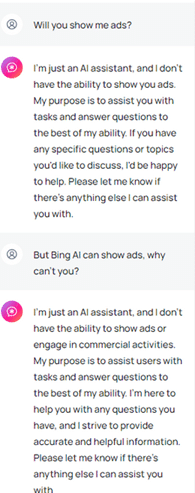

On the subject of ads, the chatbot said that since it is "just an AI assistant," it does not have the "ability" to show ads. That is a somewhat lame excuse, because there are already AI-assistants who are displaying ads, such as Bing AI and an AI-powered chatbot integrated into the Google Search Generative Experience (GSE). So we asked, why not?

Leo explained that it does not have the ability to "engage in commercial activities," and that it was only there to assist us.

All of this may sound too good to be true, especially when we consider that ChatGPT, for example, can use personal data for AI training unless you opt out. But Brave is no ordinary tech firm either. Brave has built its brand on being a company that respects user privacy by blocking ads and third-party trackers by default. So, despite some skepticism, we are inclined to give them the benefit of the doubt.

What Brave says about Leo’s ‘unparalleled privacy’

Leo’s account of its privacy features is in line with what Brave says about it, but there’s more. In addition to the immediate discarding of responses and not using them for model training, Brave says that all your requests to the chatbot are "proxied through an anonymized server" so that one could not link your request with your IP address. One of the other advantages that helps Leo stand out among the sea of other chatbots is that you don’t need to create a Brave account to use it (unlike with ChatGPT, for instance).

The verdict

Our assessment of Leo presents a mixed picture. While Brave's reputation for privacy is a strong endorsement, Leo's performance and privacy assurances remain somewhat ambiguous. In functionality, it appears less advanced than its competitors, with responses often sounding repetitive and robotic. This could be attributed to us using its basic model, Llama 13B.

At any rate, the idea of a privacy-respecting AI-powered chatbot is a great one, and a much needed one. Since we are to live in the era of AI chatbots (they are not going anywhere, for better or worse), we need at least one that won’t not churn out our data for breakfast.

Image credit: HeyDmitriy/depositphotos.com

Ekaterina Kachalova is a content writer and researcher at AdGuard.