A technical overview of Cisco IoT part 5: Exploring Cisco's competition in the expanding IoT landscape

The fifth and final article in the Cisco IoT series explores how Cisco’s competitors are navigating the rapidly expanding Internet of Things (IoT) landscape. Building on the previous installment, which covered Cisco Meraki, training resources, and certification pathways, this article shifts focus to examine how other key players in the networking industry are positioning themselves to capitalize on the IoT revolution.

IoT is transforming industries, with use cases expanding across healthcare, retail, manufacturing and beyond. It’s reshaping how organizations operate, offering enhanced security, cost savings and new capabilities through advanced sensor technologies. As this sector evolves, Cisco and its competitors are racing to offer innovative solutions. But how do Cisco’s competitors stack up when it comes to IoT? This article takes a closer look at Juniper, Aruba, Arista and other vendors to assess differing IoT strategies and where each stands in comparison.

SIEM is the shortcut for implementing threat detection best practices

The recent release of “Best Practices for Event Logging and Threat Detection” by CISA and its international partners is a testament to the growing importance of effective event logging in today’s cybersecurity landscape. With the increasing sophistication and proliferation of cyber attacks, organizations must constantly adapt their security strategies to address these advanced threats. CISA’s best practices underscore how a modern SIEM (Security Information and Event Management) solution, especially one equipped with UEBA (User and Entity Behavior Analytics) capabilities, is critical for organizations trying to adopt the best practices in this domain.

A modern SIEM with UEBA can help organizations streamline their event logging policies. It automates the collection and standardization of logs across diverse environments, from cloud to on-premise systems, ensuring that relevant events are captured consistently. This aligns with CISA’s recommendation for a consistent, enterprise-wide logging policy, which enhances visibility and early detection of threats. We've seen a rise in detection and response technologies, from Cloud Detection and Response (CDR) to Extended Detection and Response (XDR) being positioned as alternatives to SIEM. However, when it comes to consistently capturing and utilizing events across diverse environments, SIEM remains the preferred solution for large organizations facing these complex challenges.

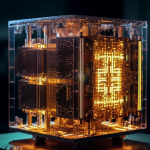

From classical to quantum: A new era in computing

Data is a business’s most critical asset, and companies today have more data than ever before. IDC projects that by 2025, the collective sum of the world’s data will reach 175 zettabytes. This data has immense potential to be leveraged for informed decision making, but across industries, organizations struggle to harness the power of their data effectively due to the limitations of traditional computing technologies. These systems are often lacking in speed, accuracy, and energy efficiency, making it increasingly difficult for businesses to harness valuable insights. The need for more powerful computing solutions is becoming urgent as businesses grapple with the ever-growing complexity and volume of data.

Enter quantum computing, which addresses these limitations by providing a powerful alternative. Representing a significant leap forward from classical computing, quantum computing offers unprecedented speed and problem-solving capabilities. Traditional computers process information using bits, which can only be in a state of one or zero. In contrast, quantum computing uses quantum bits, or qubits, which leverage the principles of superposition and entanglement. Qubits can exist in multiple states simultaneously -- both one and zero and everything in between -- allowing quantum computers to perform operations much faster than classical systems.

The importance of nudge theory in email security

It is estimated that people make 35,000 decisions every day -- or, to break that number down, one decision every two seconds. That’s not to say that each decision has a big impact, most are small and often instinctive, like taking a sip of coffee, turning the work laptop on, and clicking a hyperlink in an email.

In fact, it is that instinctive use of email that can lead to cyberattacks and data breaches. Email is the backbone of business communication. Despite remote and hybrid work driving the adoption of messaging apps and video conferencing, four out of five employees say email is their preferred way to communicate.

Weathering the alert storm

The more layers a business adds to its IT and cloud infrastructure, the more alerts it creates to detect issues and anomalies. As a business heads towards a critical mass, how can they prevent DevOps teams from being bombarded by ‘alert storms’ as they try to differentiate between real incidents and false positives?

The key is to continuously review and update an organization's monitoring strategy, specifically targeting the removal of unnecessary or unhelpful alerts. This is especially important for larger companies that generate thousands of alerts due to multiple dependencies and potential failure points. Identifying the ‘noisiest’ alerts, or those that are triggered most often, will allow teams to take preventive action to weather alert storms and reduce ‘alert fatigue’ -- a diminished ability to identify critical issues.

As the workforce trends younger, account takeover attacks are rising

Account Takeover (ATO) incidents are on the rise, with one recent study finding that 29 percent of US adults were victims of ATO attacks in the past year alone. That isn’t necessarily surprising: what we call an “Account Takeover attack” usually comes as the result of stolen credentials -- and this year’s Verizon Data Breach Investigations Report (DBIR) noted that credential theft has played a role in a whopping 31 percent of all breaches over the past 10 years. Basically, an ATO happens when a cybercriminal uses those stolen credentials to access an account that doesn’t belong to them and leverages it for any number of nefarious purposes.

Those credentials can come from anywhere. Yes, modern attackers can use deepfakes and other advanced tactics to get their hands on credentials -- but the truth is, tried-and-true methods like phishing and business email compromise (BEC) attacks are still remarkably effective. Worse still, because people tend to reuse passwords, a single set of stolen credentials can often lead to multiple compromised accounts. As always, human beings are the weakest point in any system.

RTOS vs Linux: The IoT battle extends from software to hardware

There’s certainly something happening regarding operating systems in the Internet of Things (IoT). Chips are getting smarter, devices are getting smaller, and speeds are getting faster. As a result, device developers are more often experimenting with their operating system of choice, moving away from Linux and toward real-time operating systems (RTOS).

This is an evolution on two fronts. On the software side, applications requiring low latency and deterministic responses are turning to Zephyr, FreeRTOS, and ThreadX. And now, on the hardware side, we’re seeing more chip manufacturers entering the fray with RTOS-specific hardware that rivals or surpasses performance of entry-level Linux boards. This is a big deal since these chips optimize hardware-software integration, creating a more thorough ecosystem for purpose-built solutions with RTOS.

AI for social good: Highlighting positive applications of AI in addressing social challenges -- along with the potential pitfalls to avoid

Depending on who you ask, artificial intelligence could be the future of work or the harbinger of doom. The reality of AI technology falls somewhere between these two extremes. Although there are certainly some use cases of AI technology that could be harmful to society, others have seen the technology substantially improve their productivity and efficiency.

However, artificial intelligence has even more significant implications than improving the productivity of workers and businesses. Some use cases of AI have been proposed that could have profound social implications and address social challenges that are becoming more pressing today.

Data resilience and protection in the ransomware age

Data is the currency of every business today, but it is under significant threat. As companies rapidly collect and store data, it is driving a need to adopt multi-cloud solutions to store and protect it. At the same time, ransomware attacks are increasing in frequency and sophistication. This is supported by Rapid7’s Ransomware Radar Report 2024 which states, “The first half of 2024 has witnessed a substantial evolution in the ransomware ecosystem, underscoring significant shifts in attack methodologies, victimology, and cybercriminal tactics.”

Against this backdrop, companies must have a data resilience plan in place which incorporates four key facets: data backup, data recovery, data freedom and data security.

It’s time to treat software -- and its code -- as a critical business asset

Software-driven digital innovation is essential for competing in today's market, and the foundation of this innovation is code. However, there are widespread cracks in this foundation -- lines of bad, insecure, and poorly written code -- that manifest into tech debt, security incidents, and availability issues.

The cost of bad code is enormous, estimated at over a trillion dollars. Just as building a housing market on bad loans would be disastrous, businesses need to consider the impact of bad code on their success. The C-suite must take action to ensure that its software and its maintenance are constantly front of mind in order to run a world-class organization. Software is becoming a CEO and board-level agenda item because it has to be.

The newest AI revolution has arrived

Large-language models (LLMs) and other forms of generative AI are revolutionizing the way we do business. The impact could be huge: McKinsey estimates that current gen AI technologies could eventually automate about 60-70 percent of employees’ time, facilitating productivity and revenue gains of up to $4.4 trillion. These figures are astonishing given how young gen AI is. (ChatGPT debuted just under two years ago -- and just look at how ubiquitous it is already.)

Nonetheless, we are already approaching the next evolution in intelligent AI: agentic AI. This advanced version of AI builds upon the progress of LLMs and gen AI and will soon enable AI agents to solve even more complex, multi-step problems.

The evolution of AI voice assistants and user experience

The world of AI voice assistants has been moving at a breakneck pace, and Google's latest addition, Gemini, is shaking things up even more. As tech giants scramble to outdo each other, creating voice assistants that feel more like personal companions than simple tools,

Gemini seems to be taking the lead in this race. The competition is fierce, but with Gemini Live, we're getting a taste of what the future of conversational AI might look like.

Addressing the demographic divide in AI comfort levels

Today, 37 percent of respondents said their companies were fully prepared to implement AI, but looking out on the horizon, a large majority (86 percent) of respondents said that their AI initiatives would be ready by 2027.

In a recent Riverbed survey of 1,200 business leaders across the globe, 6 in 10 organizations (59 percent) feel positive about their AI initiatives, while only 4 percent are worried. But all is not rosy. Senior business leaders believe there is a generational gap in the comfort level of using AI. When asked who they thought was MOST comfortable using AI, they said Gen Z (52 percent), followed by Millennials (39 percent), Gen X (8 percent) and Baby Boomers (1 percent).

The five steps to network observability

Let's begin with a math problem -- please solve for “X.” Network Observability = Monitoring + X.

The answer is “Context.” Network observability is monitoring plus context. Monitoring can tell the NetOps team that a problem exists, but observability tells you why it exists. Observability gives the Network Operations (NetOps) team real-time, actionable insights into the network’s behavior and performance. This makes NetOps more efficient, which means lower MTTR, better network performance, less downtime, and ultimately better performance for the applications and business that depend on the network. As networks get more complex and IT budgets stay the same size, observability has become very important. In the past two years, I’ve heard the term used by engineers and practitioners on the ground much more often. Gartner predicted that the market for network observability tools will grow 15 percent from 2022 to 2027.

Shining a light on spyware -- how to keep high-risk individuals safe

With elections across the world, there is a tremendous amount of attention placed on the threat posed by AI and digital misinformation. However, one threat we need to have more focus on is spyware.

Spyware has already been used by nation states and governments during elections to surveil political opponents and journalists. For example, the government of Madagascar has been accused of using the technology to conduct widespread surveillance ahead of its elections.

© 1998-2025 BetaNews, Inc. All Rights Reserved. About Us - Privacy Policy - Cookie Policy - Sitemap.

Regional iGaming Content