Comparing cloud vs on-premise? Six hidden costs people always forget about

To cloud or not to cloud? It's a question a lot of my clients are asking more often, and is undoubtedly one of the biggest trends in the IT industry right now. SaaS, PaaS, IaaS, and soon to be DaaS -- all acronyms which represent offloading critical functions of some sort to the cloud or into virtualized environments. All the big cloud providers are guilty of throwing fancy numbers around to make their case. But do their trumpeted cost savings really add up?

To cloud or not to cloud? It's a question a lot of my clients are asking more often, and is undoubtedly one of the biggest trends in the IT industry right now. SaaS, PaaS, IaaS, and soon to be DaaS -- all acronyms which represent offloading critical functions of some sort to the cloud or into virtualized environments. All the big cloud providers are guilty of throwing fancy numbers around to make their case. But do their trumpeted cost savings really add up?

You'll have to make it to the end of this piece to find out what I think about that personally. Because in all honesty, it depends. Too many business owners I work with make the same cost comparison blunders over and over again. Most of them are so blindly focused on raw face value costs -- the "easy ones" -- that they lose focus on the bigger picture, namely their TCO (total cost of ownership).

Too many people want to compare cloud vs on premise costs in a purely single dimension, which is akin to judging a book solely on its cover alone. For on-premise, they mistakenly believe that costs stop and start with how much new hardware/software is needed to put a solution into place. And for cloud, similarly, all they see is that recurring monthly service cost.

I can make some general statements about trends I've seen in my own comparisons, but by no means does this apply to 100 percent of organizations. And mind you, my company FireLogic specializes in 25 seat and under organizations, so my experience is a bit biased towards the needs of this crowd.

For example, on the average, I have yet to see a case where hosting email in house anymore is a worthy endeavor from a cost or uptime perspective. The same can be said for niche LOB (line of business) single app servers. Office 365 and Windows Azure are two cloud services we are offloading a lot of formerly on-premise based workloads into.

But the cloud doesn't win them all. Instances that are still usually best suited for on-premise servers include large capacity file shares (50GB in size or more) or operations that would be bandwidth-prohibitive in a cloud scenario. Offices that have smaller pipes to the internet usually have to take this into account much more acutely. My suburban and urban customers usually have plentiful choices in this arena, but rural offices unfortunately don't always have this option.

Regardless of what technology you are trying to decide on a future path for, don't just follow the crowd. Take a look into the areas I am going to shed some light on, because your initial intentions may be skewed once you find out the entirety of costs entailed with staying on premise or moving to the cloud. The real picture of what's cheaper goes much deeper than the cost of a new server or a year's worth of Office 365 subscription fees.

6) Why You Should Be Factoring in Electricity Costs

I can't remember the last business owner that actually even blinked an eye towards getting a grasp on how much his/her on-premise servers was costing them 24/7. I don't accuse them of being ignorant, because realistically, most of us don't think about energy as a cost tied directly to IT operations. It's that invisible commodity that just happens, and regardless of any computers being in an office, we need it anyway.

That's true. Business doesn't operate without it. But even though today's servers are more energy efficient than they ever were in the past twenty years, this doesn't mean their electrical consumption needs should be ignored. Unlike standard computers or laptop, the average solid server has a mixture of multiple socketed processors, dual (or more) power supplies, multiple sets of hard drives in RAID arrays, and numerous other components that regular computers don't need because the key to a server's namesake is uptime and availability. That doesn't happen without all the wonderful doodads that support such a stable system.

But with that stellar uptime comes our first hidden cost: electrical overhead. And not just the power needed to keep servers humming. All that magic spits out higher than average levels of warm air, which in turn also needs to be cooled, unless you prefer replacing your servers more often than every five years or so.

Putting a figure on your server's total energy footprint is tough because it's something that is hard to do accurately unless you have a datacenter with powerful reporting equipment. And most small business owners I consult don't keep a Kill a Watt handy for these purposes. So in the effort of finding an industry average that provides a nice baseline for discussion's sake, I found this excellent post by Teena Hammond of ZDNet that actually ran some numbers to this effect.

According to her numbers, which use an average kWh cost for energy from the US Energy Information Administration as of January 2013, she figures that an average in-house server in the USA (accounting for both direct IT power and cooling) sucks up about $731.94 per year in electricity.

While this certainly makes the case for virtualizing as much as possible if you have to keep on-premise servers, it could also sway your decision to just move your workload to the cloud. It's hard to justify keeping email or small file server needs insourced if $730+ per year per server is accurate, especially as the number of users you may have gets smaller.

If you think this point is not that serious, just ask Google or Microsoft. They manage hundreds of thousands of servers across the world at any given time. Efficiency and energy use for them is a do or die endeavor. The industry standard these days for measuring efficiency by the big boys is known as PUE (power usage effectiveness). It's as simple as taking the total energy of your facility (or office) and dividing it by the direct energy consumed by IT equipment (in this case, servers).

Microsoft's newest datacenters have PUEs ranging from 1.13 to 1.2, and Google also does a fine job with a PUE of about 1.14. Keep in mind that these entities have large budgets solely dedicated to energy usage conservation efforts. The average small business throwing servers into a back closet will likely never take two winks at this. Which is reasonable given the circumstances, but again, accentuates the notion that we should be decreasing server footprint -- potentially entirely -- if the numbers add up. I wouldn't want to see how bad the PUE would be for the average SMB client I support.

Of course your own needs may represent a different story. If your office is in a large building with a shared data closet, as is common at places like the Sears Tower in Chicago where we have some customers, then you may be able to share some of these direct electrical costs. But for most smaller organizations that are on the hook for all the electricity they use, energy needs should be on the table when discussing a potential move to the cloud.

5) Bandwidth Can Be Your Best or Worst Friend

The cloud can bring a lot of potential savings to the table, but just like energy consumption is the sore thumb of keeping servers in-house, cloud migrations can slap us with a nasty realization in another area: that we may not have enough bandwidth.

When your servers are in-house, your only limits are your internal network's infrastructure. Switches and cabling that is usually more than sufficient to serve bandwidth hungry applications inside office walls. Cloud scenarios are getting smarter with how they leverage bandwidth these days, but there is no getting away from the fact that offloading hefty workloads to the cloud will call for a bigger pipe.

Cloud email like Office 365 gets around this in two main ways. For one, no one is ever sifting through reading the entire contents of their mailbox at a single sitting. Even doing massive searches across a 365 mailbox is usually handled server-side, and only the pertinent emails are downloaded in real time that need to be opened. And similarly, in the case of Outlook users on 365, they almost always keep a local cache of their email mailbox -- which further negates any major issues here. Depending on how many users an office may have, this argument dynamic could change because it becomes tougher to predict bandwidth needs when user bases start to grow.

Another prime example of a function moving to the cloud now at smaller organizations is file share needs. With the advent of services like SharePoint Online, businesses are moving to cloud file servers (including my own) for their document sharing and storage needs.

Moving a 30-40GB file share or set of shares to the cloud is an excellent example, but in turn, we need to keep in mind that without sufficient bandwidth in and out, any savings of not running an internal server could potentially be negated. If you are hitting consumption limits (like is the case with cellular services) or just purely don't have enough pipeline to go around for all at a given time (DSL or T1, I'm looking at you) slowness and productivity loss will start rearing their heads.

This excellent online calculator from BandwidthPool.com can give you a fairly decent idea of what you should be looking at in terms of connection speeds for your office. It may not have enough detail for some complex situations, but as a broad tool to guide your decision making, I definitely recommend it. In general, my company is usually moving offices from DSL or T1 over to business coax cable in the Chicago area, and in the case of T1 -- at a huge cost savings while gaining large increases in bandwidth.

If you're contemplating any kind of major cloud move, speak with a trusted consultant on what kind of bandwidth you should have in place to have a comfortable experience. We've been called into too many situations where owners made their own moves to the cloud and were frustrated with performance because they never took into account the internet connections they needed for a usable experience.

Any increased needs in internet connection costs should be accounted for in an objective comparison of going cloud or staying in-house. Situations that call for unreasonable amounts of bandwidth which may be cost prohibitive could sway you to keep your workload(s) in-house for the time being.

4) Outbound Bandwidth from Cloud Servers Will Cost You

I absolutely love cloud virtual servers. The amount of maintenance they need is less than half that of physical servers. Their uptime is unparalleled to anything I can guarantee for on-premise systems. For my SMB customers, their TCO tends to be considerably less than a physical server box. But ... and there's always a but. They come with a hidden cost in the form of outbound bandwidth fees.

All the big players work in a similar manner. They let you move as much data as you wish into their cloud servers, but when it comes to pulling data out, it's on your dime after a certain threshold. And I can't blame them entirely. If you're moving these kind of data sets across the pipes, you're likely saving more than enough cash from hosting these systems onsite that you can spend a little to cover some of the bandwidth costs they are incurring. There is no such thing as a free lunch, and cloud server bandwidth is no different.

Microsoft's Azure ecosystem has a fairly generous 5GB of free outbound bandwidth included per month, which for relatively small workloads -- or even scenarios where nothing but Remote Desktop is being used to work on a server in the cloud -- this limit may never be touched. The 900 pound gorilla in cloud servers, Amazon's EC2, is a bit stingier at only 1GB of free outbound bandwidth per month.

Pricing after the initial freebie data cap per month is pretty reasonable for the customer workloads I consult with. Microsoft and Amazon are neck and neck when it comes to pricing for bandwidth, with a reduced pricing scale as you move up in consumption (or volume). For example, if you wanted to move 100GB out of Azure in a month down to your office, after the first 5GB of free pipe, you would be on the hook for an extra $11.40 in bandwidth charges.

On a 1TB workload of outbound bandwidth in a month, you would owe an extra $119.40. Again, depending on your comfort zone and workload transfer levels needed by your scenario, every situation may come to a different conclusion. In general, I'm finding that smaller workloads which require little in/out transfer to the cloud are great candidates for the "server in the cloud" approach.

And one other symptom of moving large workloads off to the cloud is the amount of time it takes to transfer data. If your business relies on moving tens (or hundreds) of gigabytes between workstations or other onsite servers at a time for daily operation, cloud hosting of your servers may not be a smart approach. At that point, you aren't limited by the fat pipes of providers like Azure (which are extremely large - better than most connections I see at organizations I support).

The limiting factor at this point becomes your office internet connection. Even a moderately priced 50Mbps pipeline may not be enough to transfer these workloads in timely manners between endpoints. In this case, staying in-house is likely a solid bet.

We're in the process of setting up an entire accounting firm hosted up in an Azure Windows 2012 R2 Server, which after running the numbers against doing it in-house or on a niche cloud VM provider like Cloud9, the customer is going to be saving hundreds of dollars every month. In this specific instance, the cloud made perfect sense. Putting their "server in the cloud" was a good business decision due to the uptime requirements they wanted; the geographic disparity of the workforce; and the fact that we wanted to enable staff and clients to use the system as a cloud file server for industry specific sharing needs.

For those interested, you can read my full review from earlier this year on Windows Azure's Virtual Machine hosting service, and why I think its got Amazon, Rackspace, and the others beat. I touch a bit more on Azure's credibility further down in this piece.

3) The Forgotten "5 Year Rule" For On-Premise Servers

Don't try Googling the 5 year rule. It's not industry verbiage in any way, but it is something I'm coining for the purpose of this hidden cost which is almost never discussed. The 5 year rule is very simple. On the average, from my experience, organizations are replacing on-premise systems every 5 years. It may be different depending on your industry, but again, on the whole, five years is a good lifespan for a 24/7 server used in most workplaces.

For those of you pushing servers past that lifespan, this discussion also applies, but your rule may be closer to that of a seven or eight year one -- as bad practice as that may be. I say that with all honesty because when you stretch a server lifespan so far, you're usually entailing risk of unexpected failure during the migration to a new system, or increasing the risk of paying for more costly migration fees because your software dove into a much further obsolescence than it would have otherwise had at a decent five year timespan.

Back to my original point, though. The 5 year rule is something that many decision makers don't take into account because they see the cost as being too far off to consider now. Yet, when looking at cloud vs on-premise, I think it's super important to consider your TCO (which I will touch on at #1 since it is the most important hidden cost). Part of that TCO entails your upgrade costs that hit every xx number of years when it comes time to retire old on-prem servers.

And this is where the sticker shock sets in, and where cloud services tend to justify their higher recurring costs quite nicely. Yes, while you are usually paying a slight premium to keep your needs up in someone else's datacenter, their economies of scale are offsetting what it will otherwise cost you around that nasty "5 year" mark. Even in situations where staying in house may be cheaper than going to the cloud on a monthly basis, your five year replacement/upgrade costs may be so hefty due to the size of the hardware needed or licensing entailed, that going to the cloud may still be the better long term option.

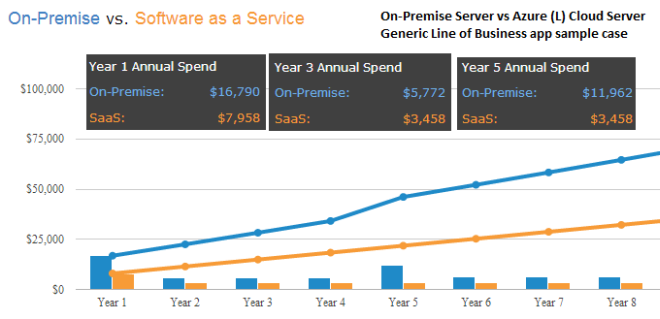

The above sample calculations I made using the TCO Calculator compare a generic line of business app server on-premise vs hosted up in a large Azure virtual machine instance. While the yearly recurring costs up to year four are fairly similar, you can see the spike that year five introduces. That extra $6190 in year five is an average aggregated cost of new hardware, software, labor fees, and related costs to replacing one physical server at end-of-life. Business owners are always oblivious to this reality in their own comparisons.

The cloud approach always entails a subscription cost which brings higher recurring monthly fees than hosting in-house, but this is not necessarily a bad thing. You aren't getting knocked with any Year 5 Rule spikes in capital expenditures because cloud providers are constantly moving their services to better servers behind the scenes, giving you the benefits of stable hardware replacements without your knowledge. It's one of the biggest benefits to the cloud route.

Not everyone will find that the cloud approach is better for them. For example, in a case where an old file server may be decommissioned in place of an energy efficient NAS box, the cloud may be tremendously more expensive if looking at providers like Dropbox for Business as a replacement. If your business was moving to Office 365 for email needs, then co-mingling SharePoint Online as a cloud file server would be ideal and fairly inexpensive. But it all depends on how much data you are storing, what your internet connection options are, etc. The variables are too numerous to put blanket statements on in a single piece like this.

The biggest thing I want people to take away is that you cannot pit on-premise cost comparisons solely on initial capital outlay of a server and the recurring monthly fees of a cloud service. Your decision making will be skewed from looking at a situation in a very strict lens, one that does not do your business long term financial justice.

2) What Does Each Hour of Downtime Cost Your Business?

The cloud sure gets a lot of publicity about its outages. Google had Gmail go down in late September to much fanfare. Amazon's virtual machine hosting on EC2 got hit with issues in the same month. Even Microsoft's cloud IaaS/PaaS ecosystem, Windows Azure, experienced its third outage of the year recently.

Hoever, compared to on-premise systems, on the average, the public and private clouds still see much better reliability and uptime. The numbers prove it, as you'll see shortly. After dealing with organizations of all ends of the spectrum in my own consulting experience, I can definitely say this is the case.

The real question here which applies to both physical and cloud environments is: what does each hour of downtime cost your business? $500? $50,000? Or perhaps $500,000? The number is different for each organization and varies per industry, but go ahead and run your own numbers to find out. You may be surprised.

InformationWeek shed light on a nice 2011 study done by CA Technologies which tried to give us an idea of what downtime costs businesses on a broad scale. Of 200 surveyed businesses across the USA and Europe, they found that a total of $26.5 Billion USD is lost each year due to IT downtime. That's an average of about $55,000 in lost revenue for smaller enterprises, $91,000 for midsize organizations, and a whopping $1 million+ for large companies.

At the study's number of 14 average hours of downtime per year per company, and using the above $55,000 in lost revenue for smaller enterprises, it's fairly safe to say that an hour of downtime for this crowd equals roughly $3929 in lost revenues per hour.

At the large enterprise level, this comes down to about $71,429 in lost revenue for each hour of downtime. You can see how important uptime is when it comes to production level systems, and why considering downtime costs is a hidden factor which shouldn't be skimmed over.

Even organizations that aren't necessarily profit bearing, such as K-12 education and nonprofits, should place some weight on downtime. Lost productivity, disruption of communications, and inability to access critical systems are all symptoms of IT downtime which can be just as damaging as lost profits.

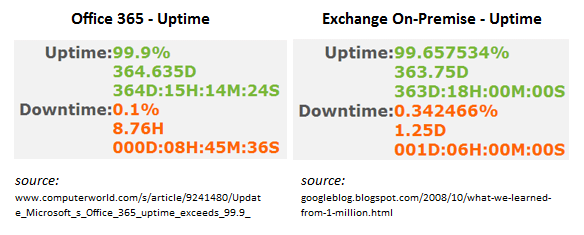

In a very common example, we can look at an easy candidate for being moved into the cloud: email and calendaring. In the last three years, I have yet to run into a situation for small and midsize organizations where going in-house for email was the better option. Not only from a cost and maintenance perspective, but also from an uptime viewpoint.

As Google made public from an otherwise paid-only study from the Radicati Group, in-house Exchange email systems see an average of about 2.5hrs of downtime per month or about 30 hours per year. That translates into an average across the board uptime of about 99.66 percent -- or a full 1.25 days of downtime per year on the flipside. Ouch.

Office 365, in contrast, has had a proven track record lately of achieving better than 99.9 percent uptime (as tracked between July 2012 and June 2013). For the sake of calculation, keeping to Microsoft's advertised SLA of 99.9 percent uptime, this translates into a downtime of only about 8.76 hours per year. If Microsoft's track record keeps up, it will be downright demolishing the uptime numbers of its on-premise email solution of the same blood.

Microsoft has got the uptime numbers to back up just how stable 365 is proving to be. On-premise Exchange servers are maintenance heavy; aren't always managed according to labor-intensive best practices; and usually don't have the expansive infrastructure that providers like Microsoft can offer on the cheap, in scale. This explains why in-house Exchange systems average an uptime of only 99.66 percent, and Office 365 is hitting a 99.9 percent+ month after month. You just can't compare the two anymore. (Credit to EzUTC.com for the visual uptime calculator.)

How do cloud providers achieve such excellent uptime figures, even in light of the bad PR they get in the media? The vast technical backbones that power cloud data centers are technologies that are out of reach for anyone except the largest enterprises. Geographically mirrored data sets, down to server cluster farms which number in the hundreds of systems each -- the average small business with a Dell server just can't compete.

And even in spite of being put under the microscope recently for a spate of short outages, Windows Azure has been winning over the experts in some extensive comparisons that are being done. For example, cloud storage vendor Nasuni released a study in February 2013 showing that Azure had bested Amazon S3 as the best overall cloud storage provider based on stress tests in availability, speed, scalability, and other head to head aspects.

"The results are clear: Microsoft Azure has taken a significant step ahead of Amazon S3 in almost every category tested," the study went on to say. "Microsoft’s investment in its second generation cloud storage, which it made available to customers last year, has clearly paid off,” according to Andres Rodriguez, the CEO of Nasuni.

Whichever direction you are heading with your IT plans, be sure that you know what your downtime cost per hour figure is, and what kind of uptime your prospective approach is going to afford you. That cheap on-premise solution that could be seeing 15-30 hours of downtime in a year may not be so attractive after all if you can put a hard number on your losses for each hour of being out of commission.

1) Anyone Can Compare Recurring Costs, But Do You Know Your TCO?

I saved number one for the very end, because this is the biggest gotcha of them all. The one that only the most in-depth business owners tend to put onto paper when running their numbers. Most of the time, this never even gets discussed because as I said earlier: out of sight, out of mind. How wrong such a mentality can be.

Total Cost of Ownership is the most accurate, objective way to place on-premise and cloud solutions on an apples to apples comparison table. This is because of the innate disparity between the two paths when viewed in the traditional lens. Recurring monthly costs always take precedence over initial hardware CAPEX costs, and the nasty "5 Year Rule" spike, which is why TCO puts all of these into perspective in the same paradigm.

Just because cloud services are almost exclusively dealt with in a subscription based model, this doesn't mean we can't compare our TCO across a five, eight, or ten year timespan. Using the simple TCO calculator I mentioned earlier in the 5 Year Rule discussion, we can find our ten year TCO on a given comparative decision for IT in the same manner.

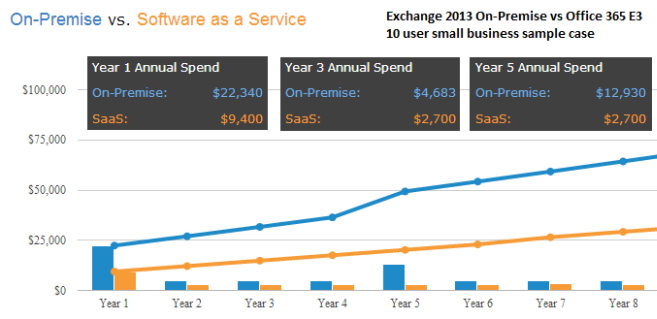

Let's ring back around to a very common scenario for my client base. Where should I host my email now that our eight year old Dell server is about to die? If we keep things in-house, we need a generously powered Dell server (I used a T420 Poweredge tower from a recent customer quote in this example) with licensing for Exchange 2013 along with server user CALs and Exchange user CALs for ten people.

Likewise, a move to the cloud introduces the need for Office 365 E3 licensing for everyone which provides email, Lync, and Office 2013 download rights for all users. To make things fair, I included the cost of a $900 QNAP loaded with enterprise Seagate drives for on-prem file storage after the old server goes down, since our scenario doesn't make SharePoint Online a feasible option.

Here's how the eight year TCO lines up for such a comparison:

At year 1, on-premise hits our example customer with a heavy load of fees. Not only do we account for initial upfront hardware/licensing, but we need antivirus software, onsite backup software (we like ShadowProtect a lot), cloud backup (via CrashPlan Pro), not to mention monthly maintenance and patching of the server, electricity consumption, along with unforeseen hardware costs. 365 wins out on recurring costs month to month (by a little), and then again, our Year 5 Rule comes into effect and the new system costs shoot up TCO quite heavily.

Most importantly, look where our TCO is after eight years. The on-premise route has us at around $65-68K, while over the same timeframe, the cloud approach with Office 365 would have put us at just about $30K. That's more than a 50 percent savings over eight years. Most people wouldn't have figured such costs, because once again, they are so squarely focused on their upfront CAPEX for on-prem or recurring costs on the cloud side.

Does the cloud always win out as shown above? Absolutely not. If I pitted an on-prem NAS storage box, like the aforementioned $900 QNAP with some enterprise Seagate drives for file storage, the TCO compared to using something like Dropbox for Business or Box.net would be tremendously lower. The cloud is not mature enough in the area of mass file storage yet where it makes financial sense to dump physical in-house storage options in exchange for such services. On a smaller scale, we've found SharePoint Online to be a great alternative for lighter needs, but not if you are hosting mass dumps of CAD drawings or media or similarly bulky file sets.

Run the numbers, do your math, and find out where your needs stand. If you don't understand what your 5, 8, 10 year (or longer) TCO looks like, you cannot pit the cloud and physical systems head to head at an accurately analytical level. Making the same mistake that I see time and time again at the organizations we consult will end up giving you the false impression that your chosen approach is saving you money, when in the end, that may be far from the case.

I'm not trying to be a blind advocate for one camp or another. My company is busily helping organizations come to proper conclusions so they can make their own decisions on what path is best for their futures. While the cloud is tipping the scale in its favor more often than not recently, I outlined many scenarios above where this is not the case.

Don't let salespeople guide your decisions based on their pitches alone. If you can objectively compare your own standing from TCO to downtime cost per hour, among other factors, you're in a position of power to make the best choice slated in solid fact. And that's a position that invariably leads to the best results. There are enough tools available today as I described above which can make this comparison process as easy for the average Joe as it is for the CTO.

Photo credit: Tom Wang/Shutterstock

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company FireLogic, with over eight+ years of IT experience in the private and public sectors. He holds numerous technical credentials from Microsoft, Google, and CompTIA and specializes in consulting customers on growing hot technologies such as Office 365, Google Apps, cloud-hosted VoIP, among others. Derrick is an active member of CompTIA's Subject Matter Expert Technical Advisory Council that shapes the future of CompTIA exams across the world. You can reach him at derrick at wlodarz dot net.

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company FireLogic, with over eight+ years of IT experience in the private and public sectors. He holds numerous technical credentials from Microsoft, Google, and CompTIA and specializes in consulting customers on growing hot technologies such as Office 365, Google Apps, cloud-hosted VoIP, among others. Derrick is an active member of CompTIA's Subject Matter Expert Technical Advisory Council that shapes the future of CompTIA exams across the world. You can reach him at derrick at wlodarz dot net.