Meta is taking steps to reduce ‘spammy content on Facebook’ by hitting those responsible in the wallet

Facebook is so far removed from the platform it first started as, it is hardly the same product. Many users would say it is not even the same platform it was a couple of years ago, and one of the biggest changes -- and irritants -- is the sheer volume of worthless content.

By this, we mean spam-like rubbish rather than stuff you’re just not interested in, and Meta has announced that it is finally taking action that it hopes will effectively reduce and discourage “spammy content”.

See also:

- Your WhatsApp conversations have just been made much more secure thanks to the new Advanced Chat Privacy

- Bluesky’s newly unveiled verification system is a unique and interesting approach

- Gmail introduces a Manage Subscriptions tab so you can unsubscribe from junk mailing lists en masse

Acknowledging that there are accounts that “try to game the Facebook algorithm to increase views, reach a higher follower count faster or gain unfair monetization advantages”, the tactic that will be used to discourage this is to limit the reach of these accounts. Meta says that it does not want authentic creator content to be lost in a sea of junk.

Explaining its new approach to handling spam, Meta says:

Some accounts post content with long, distracting captions, often with an inordinate amount of hashtags. While others include captions that are completely unrelated to the content -- think a picture of a cute dog with a caption about airplane facts. Accounts that engage in these tactics will only have their content shown to their followers and will not be eligible for monetization.

Activity such as the creation of multiple accounts to share and promote spam will be detected and will also have their reach reduced.

Accounts that pretend to be famous people or brands are a serious problem, and Meta says it is empowering creators with tools to report such accounts so they can be taken down.

Meta also says:

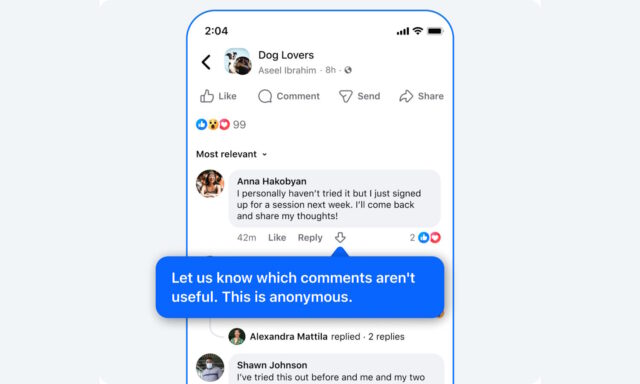

Spam networks that coordinate fake engagement are an unfortunate reality for all social apps. We’re going to take more aggressive steps on Facebook to prevent this behavior. For example, comments that we detect are coordinated fake engagement will be seen less. We also continue to monitor and remove fake pages that exist to inflate reach -- in 2024 we took down more than 100 Million fake Pages engaging in scripted follows abuse on Facebook. Along with these efforts, we’re also exploring ways to elevate more meaningful and engaging discussions. For example, we’re testing a comments feature so people can signal ones that are irrelevant or don’t fit the spirit of the conversation.

Will all of this make any difference? We’ll have to wait and see, but it’s really only a matter of time before spammers find a new way to use algorithms to their advantage and get around restrictions.