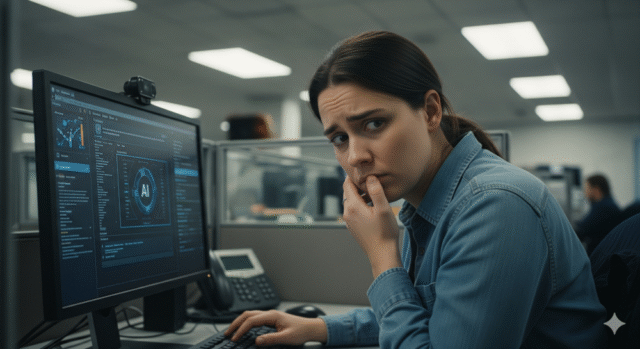

Shadow AI? No, nothing to see here…

Workers are increasingly using shadow AI to draft emails, analyze data, or summarize meetings, but are pretending they haven’t.

New data from marketing agency OutreachX finds 52 percent of US workers are worried about how AI will be used in their workplace in the future and that 48 percent of desk workers say they would be uncomfortable telling a manager they used AI for common tasks.

Top reasons for concealing AI use include a fear of seeming like they’re cheating, lazy, or less competent, as well as unclear rules around using AI in the workplace. 45 percent of workers say they’ve used AI without telling a manager, and 25 percent admit to exaggerating their AI skills, classic impression-management under stress. In addition 27 percent report AI-fueled impostor feelings, while another 30 percent hide usage fearing it could cost them their job.

There are of course real risks in all of this, 46 percent admit to uploading sensitive company data to public tools, while 44 percent knowingly use AI in unauthorized ways. Without clear governance and enablement, investments dissipate across shadow tools, fragmented workflows, and avoidable rework, potentially contributing to millions of dollars in lost productivity and efficiency.

To address the problem requires a cultural change where disclosure is normalized, with employees adding an ‘AI assist’ note to say how AI was used in a particular task. Companies also need to provision approved tools, define allowable data and use cases, restrict copy-pastes from sensitive systems into public models, and retain prompt/output logs where appropriate.

Training is key too, with workers who receive AI training up to 19 times more likely to report productivity gains. Regular AI users are also far more likely to report strong productivity, focus, and job satisfaction.

The report notes, “The story unfolding in many offices is not about fragile workers or technology hype. It is about organizational design. As long as employees believe acknowledging AI use will harm their reputation or trigger scrutiny, they will default to secrecy. That secrecy increases errors, weakens oversight, and undermines returns on AI initiatives. Organizations that codify disclosure, sanction safe tools, teach evaluation, provide in-flow guidance, assign ownership, and frame AI as assistive can convert anxiety into competence and move ‘AI shame’ into standard, auditable workflow.”

Have you hidden your AI use at work? Let us know in the comments -- we promise we won’t tell!