41 percent of schools suffer AI-related cyber incidents

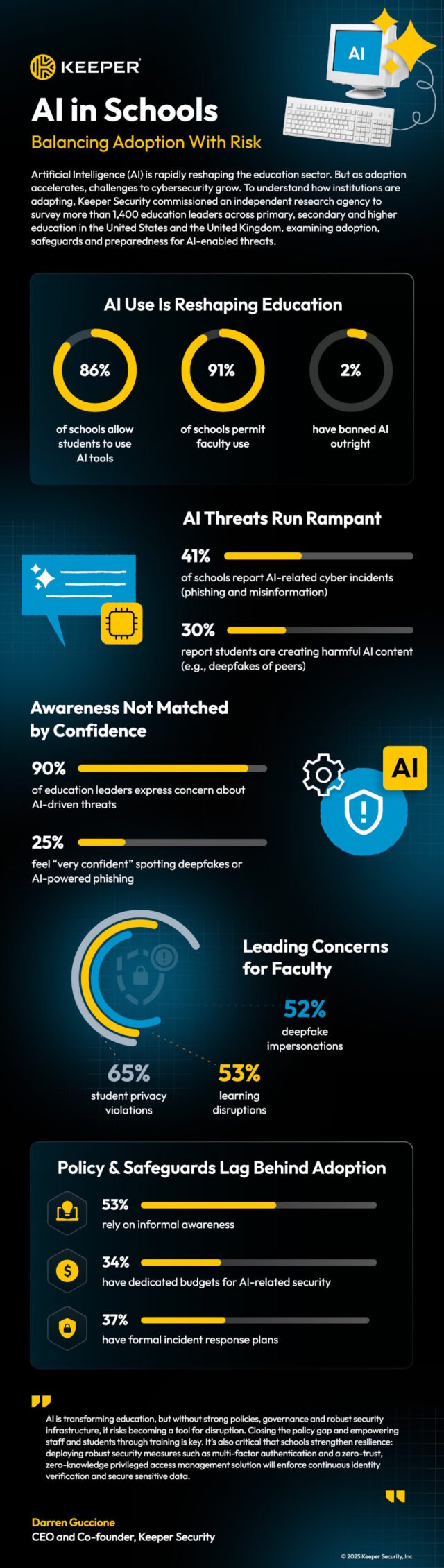

A new survey of more than 1,400 education leaders across primary, secondary and higher education in the UK and US finds that while AI is already integrated into classrooms and faculty work, development of policies and protections needed to manage new risks in schools is lagging.

The study from Keeper Security shows 41 percent of schools have experienced AI-related cyber incidents, including phishing campaigns and misinformation, while nearly 30 percent reported instances of harmful AI content, such as deepfakes created by students.

“AI is redefining the future of education, creating extraordinary opportunities for innovation and efficiency,” says Darren Guccione, CEO and co-founder of Keeper Security. “But opportunity without security is unsustainable. Schools must adopt a zero-trust, zero-knowledge approach to ensure that sensitive information is safeguarded and that trust in digital learning environments endures.”

While 86 percent of institutions allow students to use AI tools and 91 percent permit faculty use, most schools only have guidelines with no formalised AI policies. 90 percent of education leaders express some level of concern about AI-related cybersecurity threats and only one in four respondents feel ‘very confident’ in recognizing AI-enabled threats like deepfakes or AI-driven phishing.

Anne Cutler, cybersecurity evangelist at Keeper Security, says:

Artificial Intelligence (AI) is already part of the classroom, but our recent research shows that most schools are relying on informal guidelines rather than formal policies. That leaves both students and faculty uncertain about how AI can safely be used to enhance learning and where it could create unintended risks.

What we found is that the absence of policy is less about reluctance and more about being in catch-up mode. Schools are embracing AI use, but governance hasn’t kept pace. Policies provide a necessary framework that balances innovation with accountability. That means setting expectations for how AI can support learning, ensuring sensitive information such as student records or intellectual property cannot be shared with external platforms and mandating transparency about when and how AI is used in coursework or research. Taken together, these steps preserve academic integrity and protect sensitive data.

The full report is available from the Keeper site and there’s an infographic of the main findings below.

Image credit: monkeybusiness/depositphotos.com