Microsoft continues to look beyond the glass screen with new touch experiments

At the ACM Symposium on User Interface Software and Technology (UIST) in Santa Barbara, California this week, Microsoft Researchers are showing off some experimental touch interaction projects that look beyond the flat glass touchscreen and move into different areas where touch-sensitivity could be employed.

At the ACM Symposium on User Interface Software and Technology (UIST) in Santa Barbara, California this week, Microsoft Researchers are showing off some experimental touch interaction projects that look beyond the flat glass touchscreen and move into different areas where touch-sensitivity could be employed.

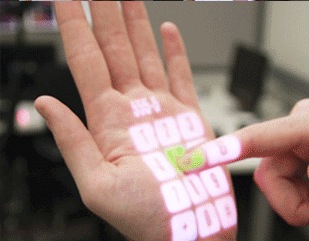

OmniTouch, one of the projects making a major appearance this week, uses a pico projector and a Kinect-like depth-sensing camera to project "clickable" images onto any surface. It's actually quite similar to the device we first saw from the MIT Fluid interfaces group three years ago, which utilized a pico projector, smartphone, and camera to put an interface layer over the real world. The critical difference between the two is OmniTouch's use of a three dimensional camera that detects the difference between a click and a hover and allows for a much more sensitive interface.

PocketTouch is another one of the projects on display this week, and its name illustrates very clearly what it does. Using capacitive sensors and an interface that is designed to work "eyes free," PocketTouch lets users interact with a touchscreen device while it's still in their pocket, through the fabric of their clothing.

What's more, though, is that the UI can be oriented however the user needs it, without the need for a gyroscope, and the interface's touch sensitivity adjusts to the thickness of the fabric and the amount of "background noise" it creates.

Access Overlays, a third technology debuting this week, is a set of three touchscreen add-ons for visually impaired users which make touchscreen navigation more useful. The add-ons, called Edge Projection, Neighborhood Browsing, and Touch-and-Speak, each approach touchscreen usage in a different way.

The edge projection overlay turns a 2D touch interface into an X-Y coordinate map. That places a frame around the outside of the screen with "edge proxies" that correspond with the on-screen targets' X-Y coordinates. Touching the edge proxy highlights the corresponding onscreen target and reads its name. Neighborhood browsing changes touch targets into bigger generalized areas outlined by white noise audio feedback which is filtered at different frequencies to differentiate touch "neighborhoods," and Touch-and-Speak is a marriage of touch gestures and spoken voice commands to manipulate an interface.

Another interface augmenting touchscreens with multiple cameras that will be shown at UIST is called Portico. This system lets users both touch the screen as they normally would or use the space around the outside of their tablet. It even lets them use external objects on top of, or near the screen to interact with running applications.

Portico: Tangible Interaction on and around a Tablet from Daniel Avrahami on Vimeo.

Some other new technologies Microsoft's researchers are showing off at UIST this week include Pause-and-Play, a way to link screencast tutorials with the applications they're displaying; KinectFusion, a 3D object scanner based on a moving Kinect camera; and Vermeer, an interaction model for a 360 degree "Viewable 3D" display.