Betanews Relative Performance Index for browsers 3.0: How it works and why

The Betanews test suite for Windows-based Web browsers is a set of tools for measuring the performance, compliance, and scalability of the processing component of browsers, particularly their JavaScript engines and CSS renderers. Our suite does not test the act of loading pages over the Internet, or anything else that is directly dependent on the speed of the network.

But what is it measuring, really? The suite is measuring the browser's capability to perform instructions and produce results. In the early days of microcomputing, computers (before we called them PCs) came with interpreters that processed instructions and produced results. Today, browsers are the virtual equivalent of Apple IIs and TRS-80s -- they process instructions, and produce results. Many folks think they're just using browsers to view blog pages and check the scores. And then I catch them watching Hulu or playing a game on Facebook or doing something silly on Miniclip, and surprise, they're not just reading the paper online anymore. More and more, a browser is a virtual computer.

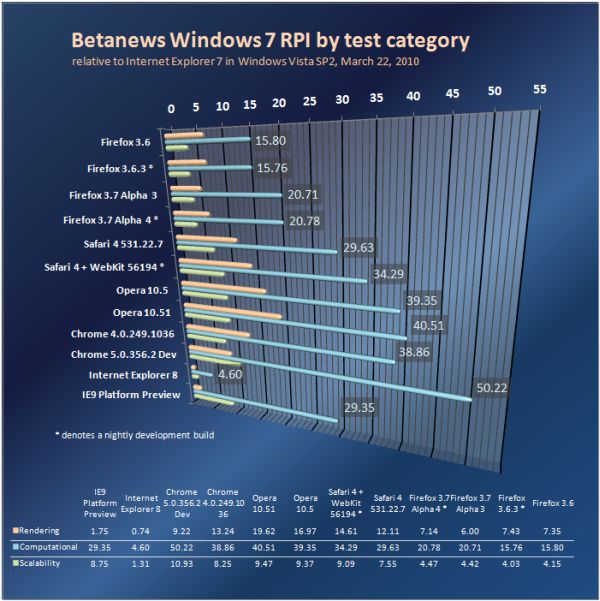

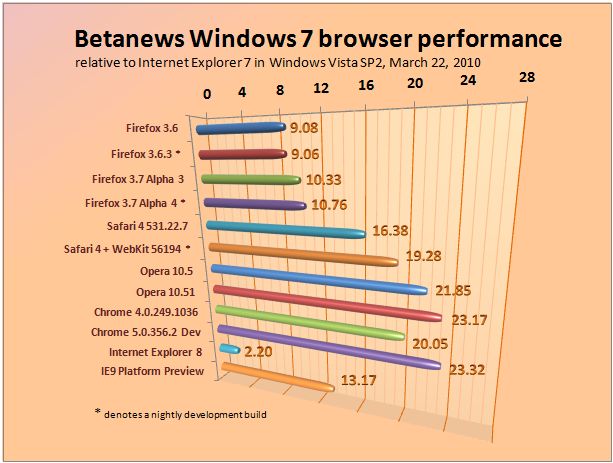

So I test it like a computer, not like a football scoreboard. The final result is a raw number that, for the first time on Betanews, can be segmented into three categories: computational performance (raw number crunching), rendering performance, and scalability (defined momentarily). These categories are like three aspects of a computer; they are not all the aspects of a computer, but they are three important ones. They're useful because they help you to appreciate the different browsers for the quality of the work they are capable of providing.

Many folks tell me they don't do anything with their browsers that requires any significant number crunching. The most direct response I have to this is: Wrong. Web pages are sophisticated pieces of software, especially where cross-domain sharing is involved. They're not pre-printed and reproduced like something from a fax machine; more and more, they're adaptive composites of both textual and graphic components from a multitude of sources, that come together dynamically. The degree of math required to make everything flow and align just right, is severely under-appreciated.

Why is everything relative?

By showing relative performance, the aim of the RPI index is to give you a simple-to-understand gauge of how two or more browsers compare to one another on any machine you install them on. That's why we don't render results in milliseconds. Instead, all of our results are multiples of the performance of a relatively slow Web browser that is not a recent edition: Microsoft Internet Explorer 7, running on Windows Vista SP2 (the slower of the three most recent Windows versions), on the same machine (more about the machine itself later).

I'm often asked, why don't I show results relative to IE8, a more recent version? The answer is simple: It wouldn't be fair. Many users prefer IE8 and deserve a real measurement of its performance. When Internet Explorer 9 debuts, I will switch the index to reflect a multiple of IE8 performance. In years to come, however, I predict it will be more difficult to find a consistently slow browser on which to base our index. I don't think it's fair for anyone, including us, to presume IE will always be the slowest brand in the pack.

Browsers will continue to evolve; and as they do, certain elements of our test suite may become antiquated, or unnecessary. But that time hasn't come yet. JavaScript interpreters are, for now, inherently single-threaded. So the notion that there may be some interpreters better suited to multi-core processors, has not been borne out by our investigation, including from the information we've been given by browser manufacturers. Also, although basic Web standards compliance continues to be important (our tests impose a rendering penalty for not following standards, where applicable), HTML 5 compliance is not a reality for any single browser brand. Everyone, including Microsoft, wants to be the quintessential HTML 5 browser, but the final working draft of the standard was only published by W3C weeks ago.

There will be a time when HTML 5 compliance should be mandatory, and we'll adapt when the time comes.

What's this "scalability" thing?

In speaking recently with browser makers -- especially with the leader of the IE team at Microsoft, Dean Hachamovitch -- I came to agree with a very fair argument about our existing test suite: It failed to account for performance under stress, specifically with regard to each browser's capability to crunch harder tasks and process them faster when necessary.

A simple machine slows down proportionately as its workload increases linearly. A well-programmed interpreter is capable of literally pre-digesting the tasks in front of it, so that at the very least it doesn't slow down as much -- if planned well, it can accelerate. Typical benchmarks have the browser perform a number of tasks repetitively. But because JavaScript interpreters are (for now) single-threaded, when scripts are written to interrupt a sequence of reiterative instructions every second or so -- for instance, to update the elapsed time reading, or to spin some icon to remind the user the script is running -- that very act works against the interpreter's efficiency. Specifically, it inserts events into the stream that disrupt the flow of the test, and work against the ability of the interpreter to break it down further. As I discovered when bringing together some tried and true algorithms for our new test suite, including one-second or even ten-second timeouts messes with the results.

Up to now, the third-party test suites we've been using (SunSpider, SlickSpeed, JSBenchmark) perform short bursts of instructions and measure the throughput within given intervals. These are fair tests, but only under narrow circumstances. With the tracing abilities of JavaScript interpreters used in Firefox, Opera, and the WebKit-based browsers, the way huge sequences of reiterative processes are digested, is different than the way short bursts are digested. I discovered this, with the help of engineers from Opera, when debugging the old test battery I've since thrown out of our suite: A smart JIT compiler can effectively dissolve a thousand or a million or ten million instructions that effectively do nothing, into a few void instructions that accomplish the same nothing. So if a test pops up a "0 ms" result, and you increase the workload by a factor of, say, a million, and it still pops up "0 ms"...that's not an error. That's the sign of a smart JIT compiler.

The proof of this premise also shows up when you vary the workload of a test that does a little something more than nothing: Browsers like Chrome accelerate the throughput when the workload is greater. So if their interpreters "knew" the workload was great to begin with, rather than just short bursts or, instead, infinite loops broken by timeouts (whose depth perhaps cannot be traced in advance), they might actually present better results.

That's why I've decided to make scalability count for one-third of our overall score: Perhaps an interpreter that's not the fastest to begin with, is still capable of finding that extra gear when the workload gets tougher; and perhaps we could appreciate that fact if we only took the time to do so.

Next: The newest member of the suite...