What Carrie Underwood's success teaches us about IBM's Watson failure

I have a TV producer friend I worked with years ago who at some point landed as one of the many producers of American Idol when that singing show was a monster hit dominating U.S. television. She later told me an interesting story about Carrie Underwood, the country-western singer who won American Idol Season 4. That story can stand as a lesson applicable to far more than just TV talent shows. It’s especially useful for the purposes of this column for explaining IBM’s Watson technology and associated products.

I have a TV producer friend I worked with years ago who at some point landed as one of the many producers of American Idol when that singing show was a monster hit dominating U.S. television. She later told me an interesting story about Carrie Underwood, the country-western singer who won American Idol Season 4. That story can stand as a lesson applicable to far more than just TV talent shows. It’s especially useful for the purposes of this column for explaining IBM’s Watson technology and associated products.

You see the producers of American Idol Season 4 knew before the season was half over that Underwood would win. And, by the same token, I’m about to argue that IBM already knows that its Watson artificial intelligence technology has lost. In each case they chose not to tell us.

In the case of American Idol, the likelihood that Carrie Underwood would be the eventual winner was clear from the very start of audience voting. In case you’ve forgotten, the premise of Idol was that professional judges would audition, accept, then eliminate singers for the first half of the season at which point judging was handed over to the TV audience voting by phone, text, or online to choose the eventual winner. What made Underwood different from the three previous Idol winners, according to my friend the producer, was that from the very start this time the votes weren’t close at all. The country-western singer not only came out on top of every weekly vote, she often garnered an outright majority of the total vote against many other singers. Someone was always in second place but second place was never close enough to matter.

Fearing that what was clearly a blow-out victory would lose the interest of the TV audience, the Idol producers decided to keep the actual vote totals a secret. Yes, Underwood was the winner week after week, but the show never said how close was the vote. They implied, in fact, that votes were close when they never were. They created a sense of competition where there really wasn’t one. This was what they felt they had to do for the sake of their TV show which was, after all, a business. If people no longer felt they had to support their favorite (Underwood) or realized that continuing to support their favorite (anyone but Underwood) was pointless, well the show wouldn’t have had as many viewers and wouldn’t have been as profitable.

What the heck does any of this have to do with IBM, you ask? Since you are already sick of me writing about IBM I’ll argue that it has to do with any technology company that has made a huge commitment to some new product line only to have that line come up short in the voting that customers do with their buying dollars.

It’s very difficult to tell from IBM’s reported financials whether Watson is a success or not. As I have already reported, the way IBM segments its revenue makes it difficult to see whether the company’s so-called strategic initiatives are making money. This is especially the case for Watson, whose results are not only mixed up with revenue decidedly not from what IBM calls Cognitive Computing: IBM also splits Watson revenue across two different segments. So while IBM continues to talk a good game for Watson it isn’t at all clear whether Watson is delivering much in the way of sales.

Or at least it was not clear until now.

Recently I came into possession of some internal IBM data concerning Watson product registrations. Almost anyone can try Watson for free but to do so requires first registering with IBM. There is pretty much no entry barrier for these registrations. If a single development group inside a corporation decides to give Watson a try, that counts for the whole company: they are IN.

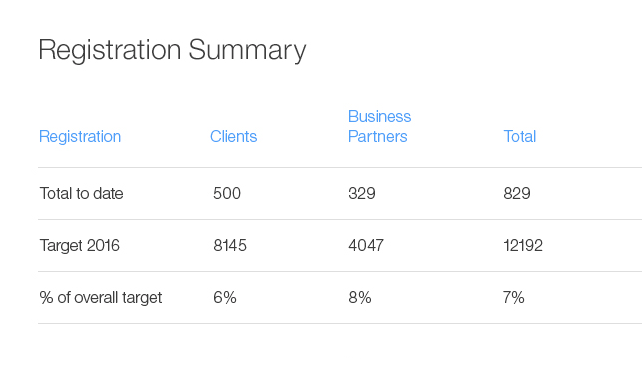

Here are the Watson registration numbers as of approximately one week ago:

Let’s parse these very real internal IBM numbers and decide what they mean. Clients are IBM corporate and institutional customers -- everything from banks to universities to government agencies. So far precisely 500 of these have registered as Watson users. That number, in itself, is questionable. Precisely five hundred, really? But let’s accept it. The client target for 2016 was 8145 of which 500 represents six percent so Watson is presently missing its target client registrations by ninety-four percent.

IBM business partners are companies that resell IBM products or services. Watson business partners are specific to Watson. This chart says there are 329 Watson business partners though IBM’s 2016 target is 4047. Admittedly 2016 is far from over but at this point IBM appears to be 92 percent behind its target.

For a program that is at least three years old, this level of sales performance is dismal. If only eight percent of the companies that are supposed to be selling Watson have even minimal experience with the technology it’s difficult to say it is even broadly available. Certainly cloud-like sales increases of 30+ percent per year aren’t happening for Watson.

At what point will IBM admit this? Not until it is forced to.

If I were to hazard a guess why these numbers are so bad (understand this is only a guess) it’s because the most prominently missing Watson customer is IBM itself. Big Blue has made the most fundamental mistake in high tech: it doesn’t eat its own dog food.