COVID-19 apps: Social responsibility vs. privacy

The growth of coronavirus cases in a number of countries has led to talk about a second wave of the pandemic. According to WHO, disturbing news is coming from China, the US, Israel, South Korea, Iran and other countries. At the same time, privacy problems are arising with new force in COVID mobile apps.

It is a common opinion that Android app users don’t know how exactly their personal data and the information transmitted by the apps are actually used. Alarm signals of privacy breaking are coming from different places around the world. If you add to that the shortcomings and even errors on the part of developers -- the threat of unauthorized access to personal information increases even more.

Millions of downloads and high ratings of many apps confirm that users voluntarily put apps on their smartphones and then willingly evaluate their performance. But what remains behind the scenes for most of us, the ordinary users? Even in cases where users confirm data transfer, you can detect a large number of violations that are not visible at first glance, including hidden tracking and encryption problems during data transfer.

COVID apps are a rather interesting case, because their developers are officially pursuing important social goals: containing and monitoring the pandemic using modern digital technologies. People all over the world are showing civic activism: they install applications developed by public authorities, as well as participate in research by private companies and research centers. That said, it is important to ensure the security and confidentiality of the transmitted data. It is necessary to detect violations in time, such as unfair use of information and data transfer without the proper level of protection.

Below, we analyze whether citizens over the world can trust COVID apps and what data can leak from these apps. This article is based on a study by Aligned Research Group, which has analyzed 24 applications.

Main problems with privacy

App users, primarily of Android devices, have long been under the "soft" control of thousands of apps developed by private companies. We’re talking about medical and sports apps, social networks, etc. In most cases, users voluntarily give permission to track their personal data.

Since the beginning of the COVID pandemic, many mobile apps have appeared which were developed at the state level. The use of information technologies is really necessary in the current conditions to track the movement of people and their interactions, assess their well-being, and, most importantly, to regularly inform the population about the current situation.

Due to the widespread use of COVID applications, the following issues are of concern:

- Active use of social network trackers in these apps.

- Use of analytical system trackers, primarily Google Analytics.

- Problems with data transfer encryption. Most often these are shortcomings or errors on the part of developers.

- Possible hidden unauthorized tracking of personal data.

Let's take a look at several regions and their corresponding popular COVID applications.

EU and US

EU and US citizens are wary of the security of their personal data. Tracking personal data in apps developed by government authorities can easily be explained by the need to stop and control the pandemic. But can I trust my personal data to private companies that develop COVID applications?

Large pharmaceutical companies are closely monitored not only by the regulatory authorities, but also by their competitors and the public. Any unauthorized collection of personal data can cause a big scandal and serious reputational losses. This is why most applications officially report the purpose of data collection. There are hundreds of medical applications that people use today, but COVID apps stand out because they appeal to a sense of belonging to a common cause and call for social responsibility.

For example, the COVID Symptom Tracker app (UK) has been installed by more than a million users so far. COVID Symptom Tracker is owned by the private nutritional science company Zoe Global Limited, which selects nutrition based on individual body indicators.

The official purpose of the app is to collect data for a study conducted by King's College London and the National Health Service. Data collection is allowed by the Welsh government, NHS Wales, the Scottish government, and NHS Scotland. The collected data is transmitted and analyzed by King's College London & ZOE research groups. People are encouraged to show civil action and report their symptoms on a daily basis. Users voluntarily submit the following information: body indicators and medical certificates.

The developer promises that "no information you share will be used for commercial purposes." However, 10 advertising and analytic modules built into the application, including related tracking social networks, cause a concern: Google Crashlytics, Google Analytics, Firebase Google Analytics, Facebook Ads, Facebook Analytics, Facebook Login, Facebook Places, Facebook Share, Google Ads, and Amplitude. It is possible that users’ data will be used in the future not only for public, but also for internal research, as well as for advertising purposes.

Almost all apps in Europe use the same set of permissions, including running in the background, getting geolocation, sending notifications, and working with Bluetooth. However, the Stopp Corona (Austria) and STOP COVID 19 KG (Kyrgyzstan) applications also require access to the microphone, and the last one also requires access to the camera and storage.

Most of the analyzed apps track users' geolocation, and some continue to work in the background. This means that the data of millions of people around the world is processed in real time, and potentially might be under threat of unauthorized transfer and use by third parties.

In January 2020, Facebook notified the public about "Off-Facebook Activity": even when the social network is closed on a user's phone, it continues to receive data. Facebook has been partnering with apps to track and target customers for years. We can assume that COVID apps with Facebook trackers can use the data for advertising purposes. Thus, even when the world has become vulnerable, brands continue to make money. In the context of the pandemic and app positioning, this seems unethical.

Asia and Africa

The security of apps developed by governments in Asia and Africa to monitor the pandemic is rightly a concern for human rights organizations such as Amnesty International and others. Perhaps the problem would not be so acute if it was limited to tracking users only in these countries, but digital technologies make contact tracing possible all over the world.

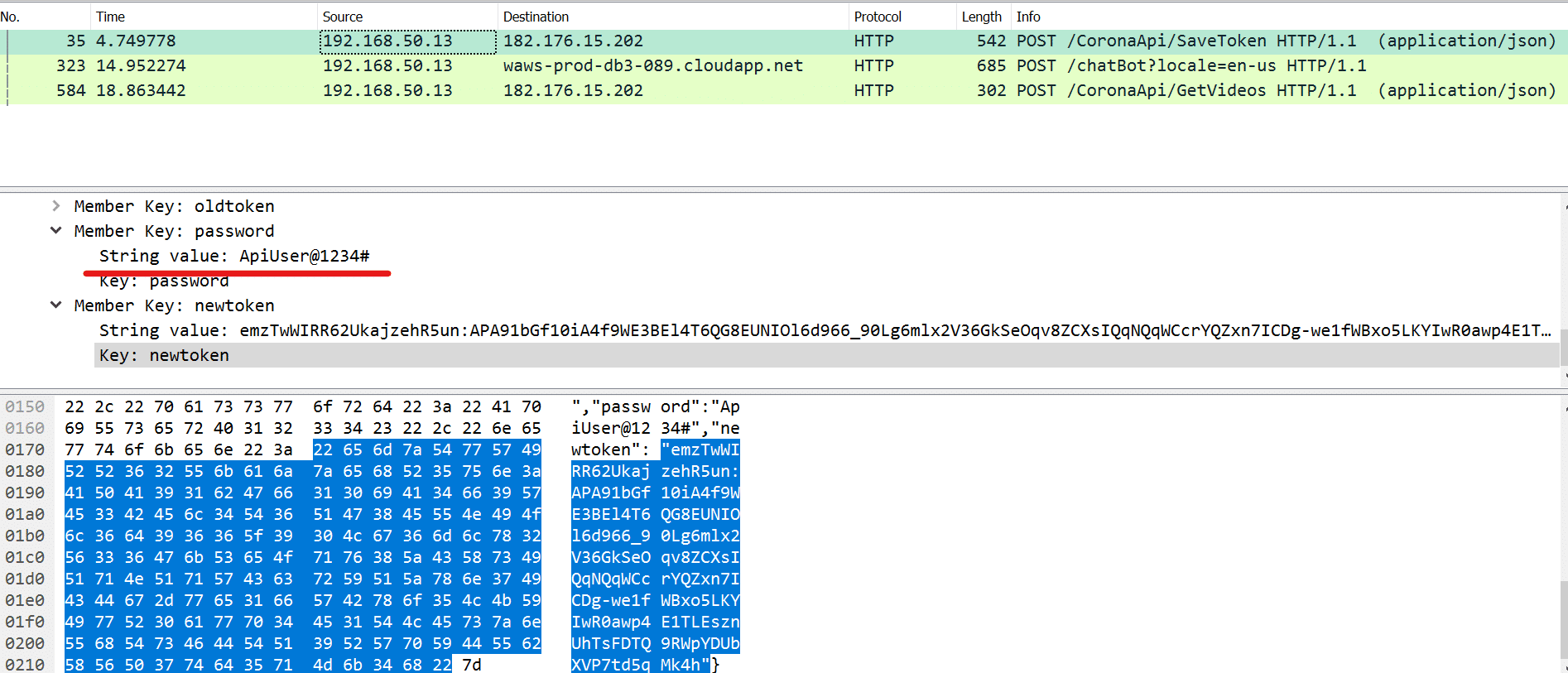

COVID-19 GOV PK (Pakistan) is developed and owned by the National IT Board, the Government of Pakistan, the Ministry of IT and Telecom, and the National Information Technology Board. This app provides citizens with a ChatBot, as well as informational videos about the pandemic and possible ways to control its spread. The number of installations is more than 500 thousand.

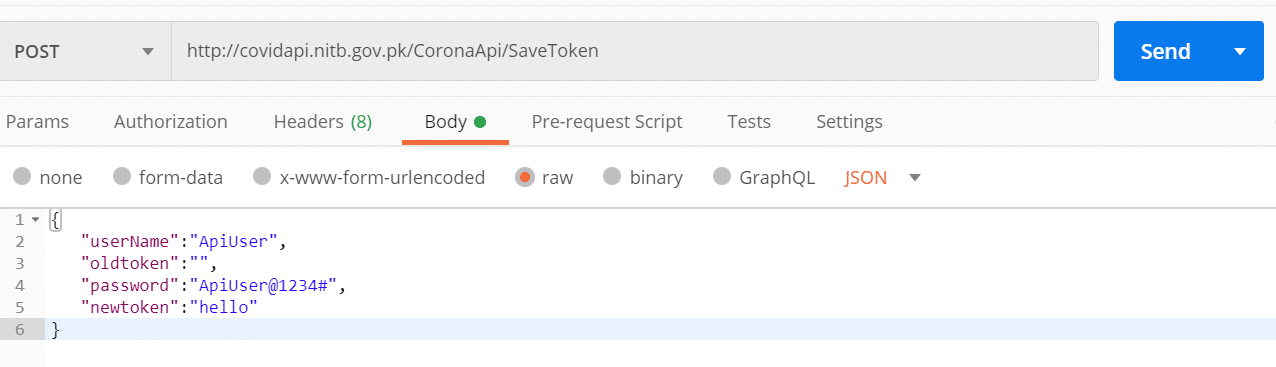

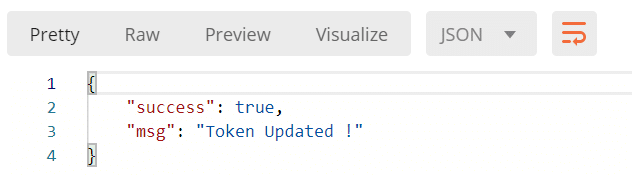

The API user's password is transmitted in plain text in the application. This request is used for sending the token to the server. Obviously, this is a flaw that requires the developers’ attention.

Interestingly, the server does not seem to use any token validation: when trying to send a request with the "hello" token, the server returns a message that the token was successfully updated.

Latin America

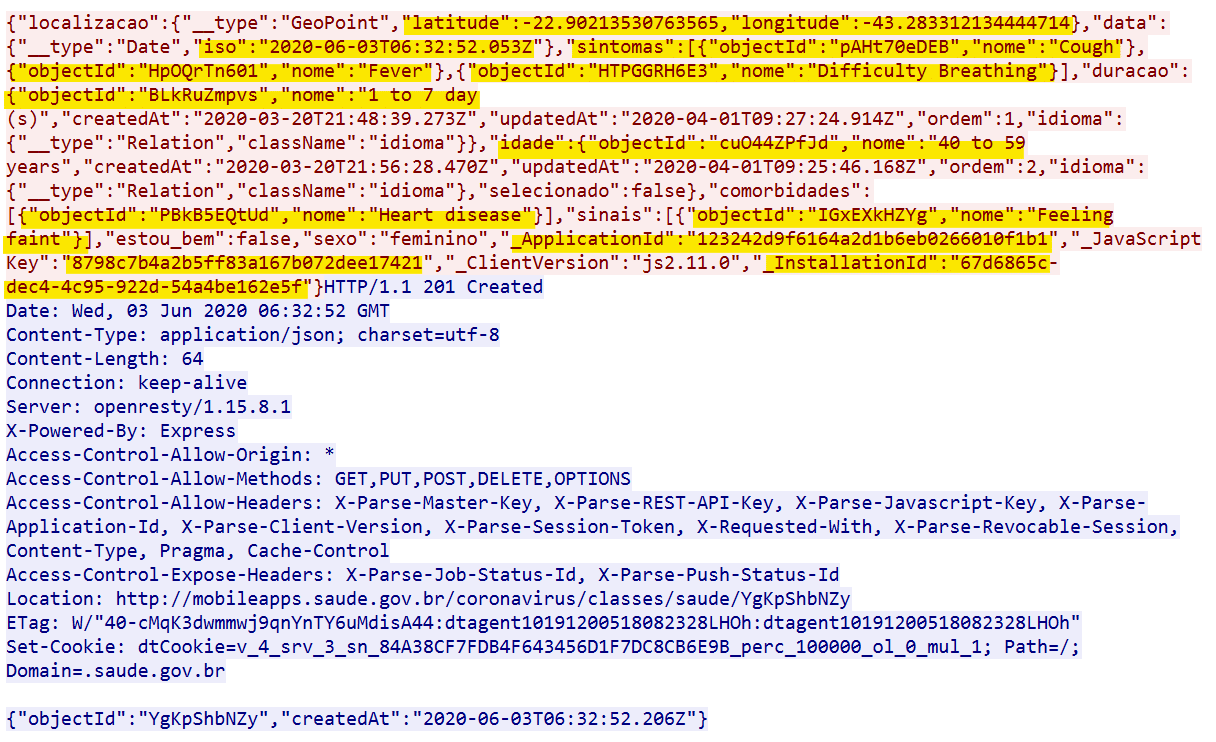

The application Coronavírus - SUS (Brazil), developed by the government of Brazil, can serve as an example of how even working with depersonalized data can have negative consequences for users in case of violations and errors in the implementation of encryption.

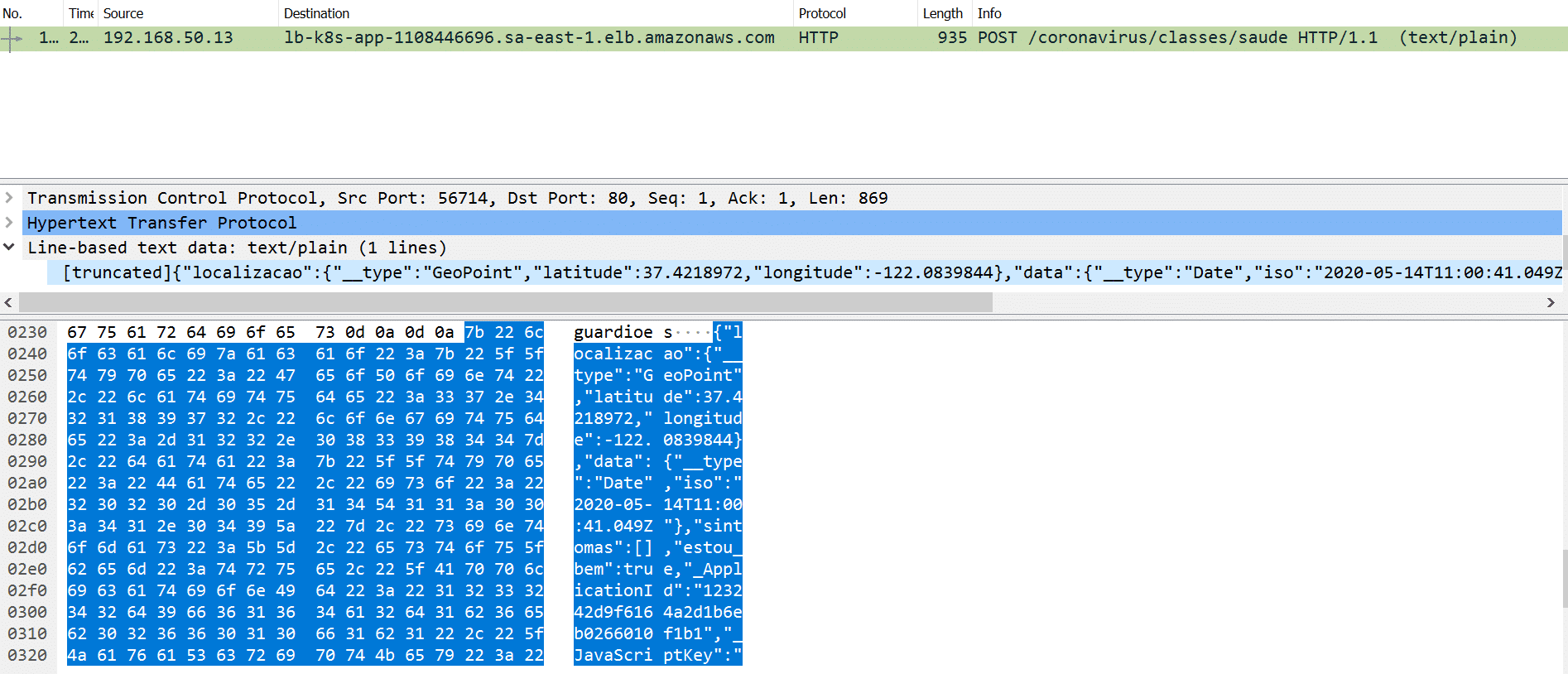

The application implements the following request:

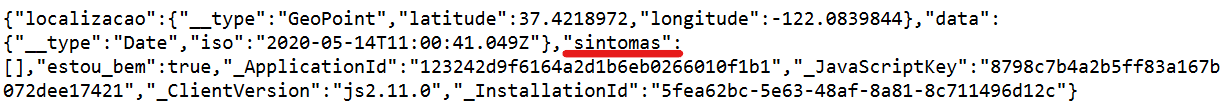

This means that the app sends geolocation with a time reference, but the key "sintomas" (symptoms) is passed in plain text.

Further analysis showed that the app allows the user to take a small survey. Based on the received responses, the app informs the user about the potential presence or absence of a coronavirus infection, and offers to contact doctors or call an ambulance if the result is positive.

The most suspicious point here is that the survey data, including time, location, gender, age, and symptoms (data from responses to the questionnaire), as well as the application ID, installation ID, and JavaScript key, is sent in plain text and contains information about the user’s health status, which is not a good practice.

Despite the fact that the data can be considered to some extent impersonal, its transmission in open form raises the issue of privacy, because it contains information about the state of the user’s health.

Conclusions

The response to the challenges of the epidemic must be proportionate and adequate, without unnecessarily violating the privacy of users. Contact tracking is an important step in the fight against the pandemic, but applications should only be used to control the distribution of COVID-19. Apps can help us solve this problem, but we must not forget about privacy and human rights, as well as data protection, especially when it concerns personal location and movements, medical data and documents. In no case should the data be passed on to third parties.

To protect yourself, it is important to check the app's publisher, ratings, and reviews, and carefully read the permissions that the app requires during installation. If the app requests too many permissions, or permissions that are obviously not necessary for the app to work, you may need to reconsider installing it.

If problems are detected, government authorities should carefully review the applications and make corrections.

Image credit: lightsource / depositphotos

Constantin Bychenkov is CEO of Aligned Research Group LLC with a background in mathematics. He is the author of multiple academic publications in applied algorithms and medical image processing. Before founding Aligned Research Group he was a CEO at SMedX LLC, working with Fortune 500 companies, designing and implementing high-throughput, mission-critical solutions.

Constantin Bychenkov is CEO of Aligned Research Group LLC with a background in mathematics. He is the author of multiple academic publications in applied algorithms and medical image processing. Before founding Aligned Research Group he was a CEO at SMedX LLC, working with Fortune 500 companies, designing and implementing high-throughput, mission-critical solutions.