For future computing, look (as always) to Star Trek

"The step after ubiquity is invisibility," Al Mandel used to say and it’s true. To see what might be The Next Big Thing in personal computing technology, then, let’s try applying that idea to mobile. How do we make mobile technology invisible?

"The step after ubiquity is invisibility," Al Mandel used to say and it’s true. To see what might be The Next Big Thing in personal computing technology, then, let’s try applying that idea to mobile. How do we make mobile technology invisible?

Google is invisible and while the mobile Internet consists of far more than Google it’s a pretty good proxy for back-end processing and data services in general. Google would love for us all to interface completely through its servers for everything. That’s its goal. Given its determination and deep pockets, I’d say Google -- or something like it -- will be a major part of the invisible mobile Internet.

The computer on Star Trek was invisible, relying generally (though not exclusively) on voice I/O. Remember she could also throw images up on the big screen as needed. I think Gene Roddenberry went a long way back in 1966 toward describing mobile computing circa 2016, or certainly 2020.

Voice input is a no-brainer for a device that began as a telephone. I very much doubt that we’ll have our phones reading brainwaves anytime soon, but they probably won’t have to. All that processing power in the cloud will quickly have our devices able to guess what we are thinking based on the context and our known habits.

Look at Apple’s Siri. You ask Siri simple questions. If she’s able to answer in a couple words she does so. If it requires more than a few words she puts it on the screen. That’s the archetype for invisible mobile computing. It’s primitive right now but how many generations do we need for it to become addictive? Not that many. Remember the algorithmic Moore’s Law is doubling every 6-12 months, so two more years could bring us up to 16 times the current performance. If that’s not enough then wait awhile longer. 2020 should be 4096 times as powerful.

The phone becomes an I/O device. The invisible and completely adaptive power is in the cloud. Voice is for input and simple output. For more complex output we’ll need a big screen, which I predict will mean retinal scan displays.

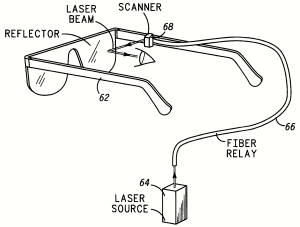

Retinal scan displays applied to eyeglasses have been around for more than 20 years. The seminal work was done at the University of Washington and at one time Sony owned most of the patents. But Sony, in the mid-90s, couldn’t bring itself to market a product that shined lasers into people’s eyes. I think the retinal scan display’s time is about to come again.

The FDA accepted 20 years ago that these devices were safe. They actually had to show a worst case scenario where a user was paralyzed, their eyes fixed open (unblinking) with the laser focused for 60 consecutive minutes on a single pixel (a single rod or cone) without permanent damage. That’s some test. But it wasn’t enough back when the idea, I guess, was to plug the display somehow into a notebook.

No more plugs. The next-generation retinal scan display will be wireless and far higher in resolution than anything Sony tested in the 1990s. It will be mounted in glasses but not block your vision in any way unless the glasses can be made opaque as needed using some LCD shutter technology. For most purposes I’d like a transparent display but to watch an HD movie maybe I’d like it darker.

The current resting place for a lot of that old retinal scan technology is a Seattle company called Microvision that mainly makes tiny projectors. The Sony patents are probably expiring. This could be a fertile time for broad innovation. And just think how much cheaper it will be thanks to 20 years of Moore’s Law.

The rest of this vision of future computing comes from Star Trek, too -- the ability to throw the image to other displays, share it with other users, and interface through whatever keyboard, mouse, or tablet is in range.

What do you think?