Introducing NVIDIA Blackwell: Transforming AI with trillion-parameter models

NVIDIA has unveiled the Blackwell platform, a new advancement in computing that enables organizations to build and run real-time generative AI on trillion-parameter large language models, all while reducing costs and energy consumption by up to 25 times compared to its predecessor.

The Blackwell GPU architecture introduces six transformative technologies for accelerated computing, aimed at unlocking breakthroughs in various fields including data processing, engineering simulation, electronic design automation, computer-aided drug design, quantum computing, and generative AI.

The platform has garnered widespread adoption by major cloud providers, server makers, and leading AI companies, with endorsements from CEOs of tech giants such as Sundar Pichai of Alphabet and Google, Andy Jassy of Amazon, Michael Dell of Dell Technologies, and Mark Zuckerberg of Meta.

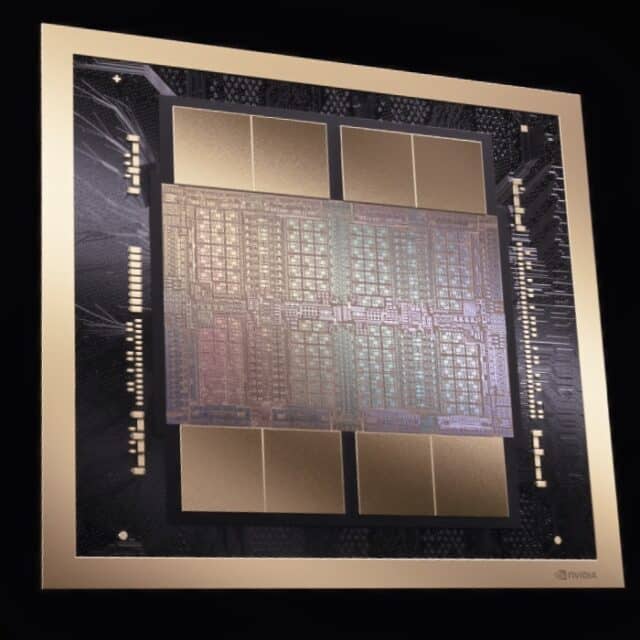

Blackwell's innovations include the world's most powerful chip, a second-generation Transformer Engine, fifth-generation NVLink, a RAS Engine for reliability, advanced confidential computing capabilities, and a dedicated decompression engine.

The NVIDIA GB200 Grace Blackwell Superchip, a key component of the Blackwell platform, connects two NVIDIA B200 Tensor Core GPUs to the NVIDIA Grace CPU, offering unprecedented AI performance. The GB200-powered systems can be connected with NVIDIA's Quantum-X800 InfiniBand and Spectrum-X800 Ethernet platforms for advanced networking at speeds up to 800Gb/s.

Blackwell-based products will be available from partners starting later this year, with cloud service providers like AWS, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure among the first to offer Blackwell-powered instances. Additionally, a wide range of servers based on Blackwell products will be delivered by companies such as Cisco, Dell, Hewlett Packard Enterprise, Lenovo, and Supermicro.

The Blackwell product portfolio is supported by NVIDIA AI Enterprise, the end-to-end operating system for production-grade AI, which includes NVIDIA NIM inference microservices, AI frameworks, libraries, and tools that enterprises can deploy on NVIDIA-accelerated clouds, data centers, and workstations.

As organizations look to harness the power of trillion-parameter large language models, Blackwell’s promise of reduced costs and energy consumption will be very welcome. However, the true impact of this technology remains to be seen as it rolls out across various industries. What are your thoughts on NVIDIA’s latest innovation? Share your opinions and insights in the comments below.