Researchers say traditional blame models don't work when AI causes harm

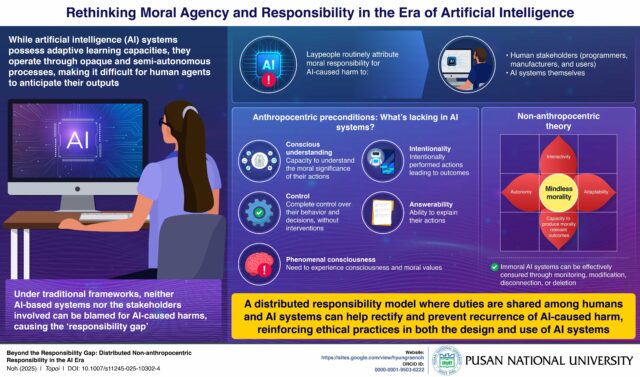

Artificial intelligence shapes our daily lives in all manner of ways, which raises a simple but awkward question: when an AI system causes harm, who should be responsible? A new study from South Korea's Pusan National University says the answer isn’t one person or one group, arguing instead that responsibility should be shared across everyone involved, including the AI systems that help shape the outcome.

The paper published in Topoi looks closely at the long-running responsibility gap. That gap appears when AI behaves in ways nobody meant, creating harm that can’t easily be pinned on the system or the people behind it.

SEE ALSO: AI is fueling an explosive rise in fraud and digital identity crime

Traditional ethical ideas lean on intention, free will, or conscious understanding, but AI has none of those traits. At the same time, developers and users often can’t fully predict how complex, semi-autonomous systems behave in the real world.

AI-mediated harm

Dr. Hyungrae Noh, Assistant Professor of Philosophy at Pusan National University, says this tension makes older frameworks hard to use. “With AI-technologies becoming deeply integrated in our lives, the instances of AI-mediated harm are bound to increase. So, it is crucial to understand who is morally responsible for the unforeseeable harms caused by AI,” says Dr. Noh.

The study explains that AI systems don’t have the mental capacities that traditional ethics expects from a responsible agent. They can’t understand the moral meaning of their actions, they don’t experience the world, they don’t form intentions, and they can’t explain why they act the way they do. Humans working with these systems also face limits, because the processes behind AI decisions are often complex, opaque, and hard to anticipate.

To move past this problem, the research looks at non-anthropocentric theories of agency. These ideas, supported by Luciano Floridi and others, suggest that responsibility should be distributed rather than assigned to one actor. Taking this view, developers, operators, users, and (in some cases) the AI systems themselves all contribute to outcomes, so they all share duties when something goes wrong.

Dr. Noh says this approach helps people respond more effectively to AI-mediated harm. “Instead of insisting traditional ethical frameworks in contexts of AI, it is important to acknowledge the idea of distributed responsibility. This implies a shared duty of both human stakeholders -- including programmers, users, and developers -- and AI agents themselves to address AI-mediated harms, even when the harm was not anticipated or intended. This will help to promptly rectify errors and prevent their recurrence, thereby reinforcing ethical practices in both the design and use of AI systems,” concludes Dr. Noh.

The study presents this shared model as a clearer, more practical way to manage AI risks as these systems become part of everyday decisions across society.

What do you think about this shared model of AI responsibility? Let us know in the comments.