Windows Storage Spaces and ReFS: Is it time to ditch RAID for good?

If you're like most other IT pros I know, you're probably already cringing just at the title of this article. And I don't blame you one bit. How many times has Microsoft tried to usher in the post-RAID era? Every previous try has either been met with gotchas, whether it be performance roadblocks, technical drawbacks, or outright feature deprecation.

If you're like most other IT pros I know, you're probably already cringing just at the title of this article. And I don't blame you one bit. How many times has Microsoft tried to usher in the post-RAID era? Every previous try has either been met with gotchas, whether it be performance roadblocks, technical drawbacks, or outright feature deprecation.

Native Windows drive mirroring (read: software RAID) has been in every Windows release since Win 2000. And for just as long, it has been plagued by sub-standard read/write performance which is why everyone who tried it always ran back to their hardware RAID.

Then Microsoft gave a technology called Drive Extender a short run, which was for all intents and purposes the rookie season for Storage Spaces. It showed up in the first version of the now-dead Windows Home Server line, but was stripped out of the second and final release because of how knowingly buggy the technology was. The premature nature of dynamic drive pooling in Windows, coupled with the arguably inferior file system NTFS, was the perfect storm for showing Drive Extender the door.

So Redmond is a clear 0 for 2 on its attempts to reign in a life after RAID. Cliche or not, a third time may be the charm that raises eyebrows of the skeptical many. Chalk me into the column that handles software RAID with a ten foot pole. While I've never played with Drive Extender, I have given Windows drive mirroring and striping limited trials on a personal basis in the XP and Vista days with grim results. I wasn't impressed, and never recommended it to customers or colleagues alike.

With the release of Windows 8 and Server 2012, Microsoft took the wraps off of two technologies that work in conjunction to usher in a new era of software RAID. Perhaps that's the wrong way to phrase it; after all, software RAID comes with a lot of negative connotations, judging by its checkered past. Storage Spaces is more of a software-driven dynamic drive pooling technology at heart. That's a mouthful.

Where the Status Quo Fails

Any IT pro worth their weight knows that depending on RAID is a necessity for redundancy in production-level workloads. For systems that cannot be offloaded into the cloud, and must be kept onsite, my company has had excellent luck with running dual RAID-1 arrays on Dell servers. Tested, tried, proven, and just rock-solid in the areas that count. Dependability is what business IT consulting is all about; we're not here to win fashion or feature awards.

What are some of the biggest drawbacks to hardware RAID arrays? Cost and complexity, by far. In server environments, even for relatively simple layouts prevalent at small businesses we support, doing a hardware RAID the right way entails using enterprise grade disks, add-in RAID cards, and the knowledge to configure everything in a reliable manner. All of that fancy equipment isn't cheap.

Then there's the inherent problem with scaling out your arrays as your needs grow. Upgrading RAID-1 arrays with larger disks is a pain in the rear. You almost always have to take the system(s) in question out of service for periods of time when the upgrades are being done, and the older servers get, the more tenuous this procedure becomes.

Advanced RAID types, like RAID-5, allow us to leverage greater amounts of space per pool of drives, but they also come with an inherent flaw: they are disgustingly hard to recover data from. Disks that are outside of their natural array environment (specifically, their native controller and array) are near useless for data recovery purposes unless you are willing to shell out in the thousands for a professional clean room to do the job.

This is why I never use RAID-5 in production scenarios since I can easily take any disk off a RAID-1 and recover files off of it, as if it never existed in a RAID configuration. But opting to go RAID-1 instead of RAID-5 forces a larger investment in drives since you are always losing half your total spanned disk size to redundancy. The cost/space balancing act bites hard.

Expandability is another black eye for hardware RAID. I happen to love QNAP's line of small business NAS devices, as we use one ourselves (for now... I'll explain more on that further down) and have been very pleased with it. But, and the big but, is that once you run out of SATA or SAS ports on your RAID backplane or card, that's it. You're either looking at a full replacement set of hardware, looking for another card to add in, or some similar Frankenstein concoction. There is no in-between. This is an area Storage Spaces attempts to solve, by giving us the ability to use any form of DAS (direct attached storage) in our "pools" of disks.

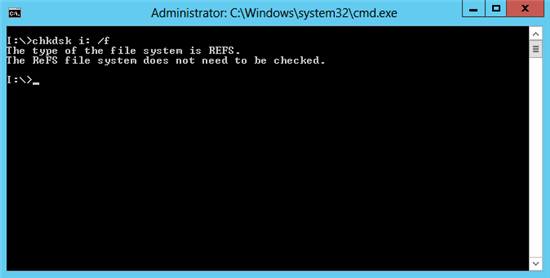

And when is the last time you ran into NTFS inconsistencies on a RAID and had to follow through on a complete CHKDSK scan? In the days of 120GB, 250GB and similar small arrays, CHKDSK scans weren't a big deal. But with multi-terabyte arrays becoming the norm, taking production servers down for days at a time for full CHKDSK scans is a nasty side effect to perpetuating the 'status quo' in how we handle storage. Long story short, NTFS was never meant to take us into the tera and petabyte storage era, especially in scale and in complex fault tolerant environments.

Alternatives Already Exist, But They Aren't Silver Bullets

We know that software RAID in Windows has always sucked. And hardware RAID has been a great, but expensive and labor intensive, solution for those who have the expertise or can hire it as needed. There have likewise been a bevy of alternative options over the years, but none of them have impressed me that much, either.

The Linux geeks surely know that free distros have been widely available for years now attempting to plug this needs gap. And without a doubt, some awesome options exist on the Linux side. Two major offerings that I have been investigating for our own internal post-SMB NAS future are FreeNAS and UnRAID. But as I've written about before when it comes to the "free" aspects of open source, just because it doesn't have a price tag doesn't mean it's superior to commercial counterparts.

FreeNAS is by far the oldest purpose-driven distro in the Linux crowd, offering nothing more than an OS to host drives on converted PCs for the purpose of replicating what pre-built hardware NASes provide. By all accounts, from my little experience with it and what colleagues of mine have told me, it's pretty darn solid and does a good job. But its biggest draw, and likewise its achilles heel, sits within its filesystem of choice: ZFS.

ZFS is a technically superior filesystem (for now) to Microsoft's ReFS from all I can read, but it has rather high memory requirements for host systems. For starters, the official FreeNAS site recommends at least 8GB on a system running FreeNAS with ZFS -- and they explicitly say that 16GB is the publicly acknowledged sweet spot. You can read some of the discussions members of the FreeNAS community have been having about ZFS's rather high memory needs on their forums.

16GB of RAM for a simple storage server, especially for a small organization of 5-10 users, is a bit ridiculous. FreeNAS pushes the notion that you can convert near any PC into a FreeNAS box, but in actuality, on low spec systems you're likely relegated to the legacy UFS filesystem. All those fancy benefits from ZFS go out the window. Storage Spaces in Windows 8.1 and Server 2012 R2 likewise don't have any issues with lower memory footprints, from my own tests and what I can glean from technical articles on ReFS.

UnRAID is another option, but comes with its own set of limitations. First off, its filesystem of choice is ReiserFS, which is already being heavily discussed in Linux circles as being on its way out, with support in the future likely to be placed into the legacy filesystem bin at some point. Secondly, the free version is more of a freemium edition, with support for only 2 data drives and one parity disk. Paid options start at $69 and get you 5 data drive capability, and there is a $120 edition that gets you support for 23 data drives. Seeing that you can buy Server 2012 R2 Essentials for about $320 OEM right now, and use Storage Spaces plus the rest of the flexibility that Windows provides, this is a hard purchase proposition for me to swallow.

Another downside of using ReiserFS as your filesystem? It doesn't have any of the auto-healing capabilities that ReFS or ZFS have. So yes, you'll be doing the equivalent of CHKDSK on the Linux side when you start running into bit rot. If I have to do that, I'll stick with the familiar NTFS, thank you.

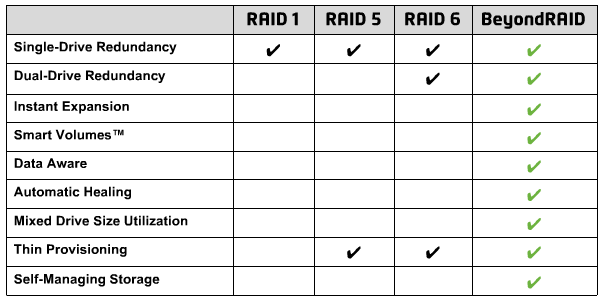

You may be itching to go with a pre-built hardware appliance which has the benefits of RAID, minus the complexity. Enter Drobo. The line of devices offered by Drobo range in options from 4 bays to 12 bays, all promising the same nirvana of a post-RAID lifestyle. But this convenience doesn't come without a hefty price tag, with the cheapest gigabit ethernet-enabled Drobo (the 5N) coming in at a sizable $515 USD on Newegg -- and that's diskless, mind you. If you have to invest in good Seagate Constellation or WD RED NAS drives, you'll be looking at closer to a $1K budget for this route. Nothing to brag about.

But Drobo has a nasty side that the company doesn't advertise in the open. As Scott Kelby wrote on his lengthy blog post, dealing with Drobo's unique (and proprietary) filesystem called BeyondRAID actually puts you in a bad position come a worst case scenario (Drobo failure, etc) compared to regular RAID-1. Why? Because as he found out, you can't plug any of the disks in a Drobo array into a standard Windows or Linux PC to recover data.

If your Drobo fails, you may be on your own quite literally. That scares me, and is the reason why I'm being so cautious with Storage Spaces and ReFS to begin with. I can't imagine having no plan Z solution for working with drives from a dead Drobo that had no other backup. It's not myself that I'm concerned about; I can only imagine selling a Drobo to a client and having them coming back to me for an emergency years down the road due to a failed device. Hence why I likely won't ever sell a Drobo unless they start leveraging industry standard filesystems.

Drobo advertises some interesting benefits about what BeyondRAID can do. It's all fine and dandy, but that fancy magic comes with the full assumption you are willing to entrust your sensitive data in a proprietary RAID-alternative with no recovery outlet if when your Drobo goes belly up. That kind of data entrapment is not something I can justify for client purposes yet. (Image Source: ArsTechnica)

Sorry Drobo -- count me out of your vision of a post-RAID life. I'd rather invest a little elbow grease and time into a solution, whether it be true RAID or something else, that I know always has a backdoor I can fall back on in that "ohh sh*t" scenario of complete array failure.

There's a Storage Spaces lookalike on the Windows side called DrivePool by a company called StableBit, and while I haven't played with the product myself, it seems to work in a similar manner to Microsoft's first party solution with a few extra options. I definitely can't knock the product, and online reviews from users seem pretty solid, but I am worried about the program's reliance on the aging NTFS.

DrivePool is built around working cohesively with NTFS and also calls upon another tool they offer, Scanner, to give us the bulk of what Storage Spaces/ReFS provide. If I am making the move to a pooled drive ecosystem, I want to move away from NTFS and onto a modern filesystem like ReFS or ZFS. Otherwise, DrivePool is just a fancy patch for a bigger underlying architectural problem with how we handle large sums of data. Not to mention, I don't like the notion of two more (paid) apps that have to be layered into Windows, maintained for updates, the overhead, etc. Going native whenever possible with software solutions is always ideal in my eyes.

So what does Storage Spaces attempt to achieve with ReFS? Glad you asked.

A Brief Intro to ReFS, The Replacement for NTFS

I'm jumping the gun a bit in saying ReFS is the replacement for NTFS. While it will replace NTFS eventually, that day is far from here. Microsoft is rolling out ReFS in the same manner that NTFS took the better part of a decade to make it to a mainstream Windows release (Win 2000). Let the business customers work out the kinks, so that consumers can see it show up in full focus by Windows 10, I'm guessing.

And let me clarify by saying that ReFS actually already exists in Windows 8.1 on the consumer side. Server 2012 and 2012R2 support ReFS fully already except for using it on the drive you leverage for booting into Windows (a darn shame). But on the consumer end, in Windows 8 and 8.1, you can use ReFS only in combination with you guessed it: Storage Spaces (for parity or mirroring only; striped spaces are forced into NTFS for the time being).

Yes, some brave souls are already describing workarounds about getting full ReFS drive support in Windows 8.1, but I wouldn't recommend using unsupported methods for production systems. There's a reason Microsoft has locked down ReFS in such a manner -- because it's a filesystem still very much in development!

ReFS, short for Resilient File System, is Microsoft's next generation flagship filesystem that will be overtaking NTFS in the next 3-6 years. Steve Sinofsky, the former head of Windows that left the company in late 2012, laid out a stellar in-depth blog post back in January of the same year. He gave a no-holds-barred look into the technical direction and planning behind how ReFS came to be, and where it's going to take Windows.

There's a lot of neat stuff that Microsoft is building into ReFS, and here are just some of the most appealing aspects that I can cull from online tech briefs:

- Self-healing capabilities aka goodbye CHKDSK. Microsoft introduced limited auto-healing aspects into NTFS starting with Windows Server 2008 R2, which is nice, but keep your excitement in check. By all accounts, it's leagues behind what ReFS has built in because Microsoft says that CHKDSK is no longer needed on ReFS volumes. ReFS uses checksums to validate all data saved to volumes, ensuring that corruption never gets introduced. And ReFS is constantly scrubbing data behind the scenes to ensure that consistency on stored information is upheld over the long run.

- Long file names and large volume sizes. When's the last time NTFS yelled at you for file names longer than 255 characters? You can bid that error farewell, as ReFS supports filenames up to 32,768 characters long. And volume size limits have been increased from 16 Exabytes to 1 Yottabyte. Oh, and you can also put up to 18 quintillion files on an ReFS volume... if you can grasp the massiveness of such headroom.

- No-downtime-required data repair. In the event that ReFS needs to perform deeper repairs on data, there is no need to bring a volume out of availability to perform the recovery. ReFS performs the repairs on the fly, with zero downtime, and is invisible to the end user. Bit rot is a problem of the past.

- Reduced risk of data loss due to power outages, etc. NTFS works with all file metadata "in-place" which means any changes made to files (like updating file content, changing filenames, etc) is saved over the last set of metadata for those items. This works great when you have battery backups protecting all your systems, but this is not ideal in reality. ReFS borrows a page from a concept known as "shadow paging" where a separate metadata set is written and then re-attached to the primary file in question after the write has been completed successfully. No more BSODs from corruption when you lose power in the middle of a sensitive write operation on your drive.

- High NTFS feature parity. There are some aspects not to like about ReFS, but in general, as Sinofsky wrote, ReFS builds upon the same foundation that NTFS uses which means API level compatibility for developers is nearly 1:1. To a non-developer like me, all that I care about is the promise of compatibility for business applications on the market.

Not everything about ReFS is rosy however. As Martin Lucas points out in a great post, there are some things that NTFS has in its pocket which ReFS doesn't handle just yet. I'm not sure if these are temporary, intentional decisions from the ReFS engineering team for the sake of getting ReFS into the market for testing, or if these are long term omissions, but you should know about them.

Disk quotas, something we heavily rely on in server environments, do not work with ReFS. So you can't enforce Johnny in accounting getting 15GB for his home drive and Nancy in marketing having 25GB instead. This is an important missing feature which kind of irks me and is in some ways a step back from NTFS.

Some other missing items do not matter as much to me, as I don't rely on them (as far as I know) but these are file based compression, EFS, named streams, hard links, extended attributes, and a few others. The full list is available on Lucas' post on his TechNet blog.

CHKDSK is on leased time, if ReFS replaces NTFS like the latter replaced FAT32 over a decade ago. Due to the auto-healing capabilities of ReFS, there is absolutely no need to run CHKDSK on volumes formatted in this next gen file system. In fact, if you try to force run CHKDSK, you'll be greeted by the above response. (Image Source: Keith Mayer)

A few bloggers online are unfairly comparing ReFS to ZFS and other well established file systems. ZFS, for example, has been on the market since 2005, and ReiserFS has been around even longer, since 2001. So comparing Microsoft's new ReFS to those other maturing filesystems is not an apples-to-apples comparison yet, because Microsoft is undoubtedly still working out the kinks. With enough testing in the field, and business penetration, it will gain the real-world testing that it deserves.

So is ReFS right for everyone? Absolutely not. If you rely on functions of NTFS that ReFS has left out thus far, you may not enjoy what it has to offer. But the aspects I care about -- self healing, disk scalability, the death of CHKDSK -- are extremely appealing.

So What's This Storage Spaces Thing, Anyway?

About a year ago was when I got my first glimpse of what Storage Spaces had to offer. Microsoft IT evangelist Brian Lewis put on an excellent Server 2012 bootcamp here in the Chicago area which I had the opportunity to attend on behalf of my tech company FireLogic. One of the items that came up in live demos of Server 2012 was Storage Spaces, and it not only got me interested, but the SAN guys in the crowd were super curious at what Microsoft was cooking here.

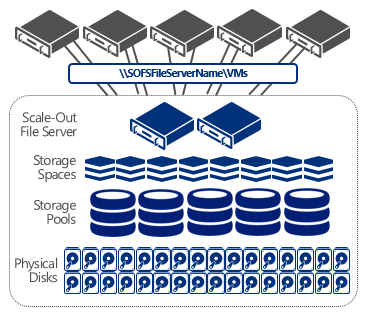

Put the technology aside for a moment, and dissect Microsoft's needs pitch on this offering. What if you could leverage all the cool benefits that RAID arrays and SAN backbones offer, at a sliver of the cost of a true SAN? What if these storage arrays could be scaled out from standard DAS drives of any flavor -- SATA or SAS -- without the need for any fancy backplanes or management appliances? What if you truly could have all the niceties that big companies can afford in their storage platforms, at a small to midsize business price point?

That's Storage Spaces at a ten thousand foot view. If you can provision 2012 or 2012 R2 servers, and have collections of disks you can throw at them, you can build a wannabe SAN at a fraction of what the big boys charge for their fancy hardware. And this proposition isn't only meant for the enterprise. While the intentions behind Storage Spaces was to allow companies to scale out their storage pools easily as their needs grow, this same technology is simple enough for me and you to use at home.

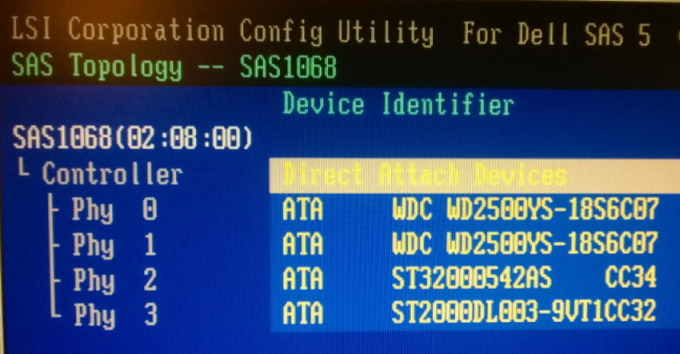

How easy? Dirt easy. Scary easy, in fact. I setup numerous test Storage Spaces pools on a Windows 8.1 Pro testbed Dell PowerEdge 840, and it took me minutes to do so in a point and click interface on each try. The hardware I'm using is pretty basic, stuff that any small business or tech enthusiast may have laying around in their stash of goodies. For my trials, I had access to a Dell SAS RAID 5/i card that I used as the SATA interconnect for 4 different drives, all of them being simple off the shelf 7200RPM SATA disks.

The basic premise of Storage Spaces is pretty simple. The technology is in both the client and server editions of Windows since 8/2012 hit the scene. 2012 and 2012R2 are afforded a few extra bells and whistles for enterprise situations, but for the most part, the same basic technology is available at your disposal in all flavors of Windows now.

You take physical disks and connect them in whatever way you wish to your server. SATA and SAS are preferred, of course, but you can use USB/FireWire devices if you absolutely need to. From there, you create Storage Pools of these available disks, and from that point, you create Storage Spaces which are volumes rendered upon spanned pools utilizing multiple drives. If you are thinking RAID, you are on the right mental track. The below graphic displays the breakdown of the logical chain in an easy to understand conceptual mockup:

Disks are bundled into Pools, which are then divided into Spaces. Individual Spaces can then be doled out for complex storage scenarios. It's a pretty straightforward approach in the land of Storage Spaces, and actually works as advertised, I've found. (Image Source: TechNet)

You can't use a Storage Space as a boot volume... yet, of course. I am hoping that day will come, but for now, it's a mechanism for data storage sets only.

But just like RAID, you have a few options for how you can format your Storage Spaces to work. The three options you have include:

- Mirror Space: This is the equivalent of a RAID-1, and my favorite Spaces flavor. Exact copies of data is mirrored across two or more drives, giving you the same redundancy that RAID-1 has offered for so long. Needs at least 2 disks.

- Parity Space: This is the equivalent of a RAID-5 array, using parity space to prevent disaster and recover data in the case of failed drives. I personally don't like this Space type as it has given me very poor write speeds in my testing (shown below). Needs at least 3 disks.

- Simple Space: Just like a RAID-0, where you are striping data across multiple drives for raw speed but zero data redundancy. I would never use this production systems. This is good for temporary scratch spaces like video editing scenarios. One disk is all you need for this, but using two or more is really where this would shine.

As anyone who reads my work here knows, I'm not interested in marketing success stories. If I'm going to use Storage Spaces for my own company needs, and more importantly, for my clients, I need proof that this tech works.

The Testbed: An Old Server and Some SATA Drives

My testbed system is an old but still working Dell PowerEdge 840 server. I upgraded the unit to a full 8GB of RAM and an Intel Xeon X3230 processor, a best-of-yesteryear CPU that has 4 cores at 2.66GHz each. Both of these upgrades are the max that this server can handle. But most importantly, they are considered mainstream specs these days for something you may find at a small office or something you can build at home. This isn't a multi-thousand dollar blade server that a large company would use in a complex storage setup.

And this is an important distinction about how I tested Storage Spaces with older hardware. I wanted to ensure that this feature could deliver on the results that Microsoft boasts about. Hardware RAID speeds/resiliency without the RAID card? If they say it works, I wanted to test it on its own turf, putting it through the paces that anyone with off the shelf hardware would be using.

For the performance tests I ran to compare how Storage Spaces fared against hardware RAID, I had my drives connected through a Dell SAS 5/i RAID card at all times. Obviously, when I was testing Storage Spaces speed, my RAID functionality was completely disabled on the card so we could see what Windows was able to muster on its own between connected disks.

My testbed server is pretty un-cool in terms of drive hardware. A pair of Seagate 250GB SATA drives and another pair of oddly-matched 2TB Seagate SATA drives. Wacky, but just the kind of setup that is needed to put Storage Spaces through its paces. This is how Microsoft demo'd the tech at the Server 2012 bootcamp I attended, and as such, I'm doing the same!

In my testing, I was not only interested in how fast a setup would be under Storage Spaces, but likewise, how safe my data would be in the event of disaster. How did Microsoft's new technology fare under a lab environment?

Test 1: Storage Spaces Reliability in the Face of Failed Drives

No matter how well a storage array performs, if my data won't be safe from drive failure in a real world scenario, I don't care how fast it's whizzing to and from the storage set. And the business customers we support have the same viewpoint. This is exactly the reason I don't employ RAID-0 arrays in any fashion at customer sites. It's a super fast, but super dangerous, technology to be using when storage resiliency is at stake.

So for my first round of tests, I decided to build out test Storage Spaces that were configured in both mirroring and parity modes. The parity Space was built out with 3 of my disks, using (2) of the Seagate 2TB drives and (1) of the 250GB WD disks I had; the mirror Space was merely using the same sized Seagate 2TB drives. I loaded up a batch of misc test files onto each Storage Space, to represent just a hypothetical set of data that may be sitting on such an array. The test files consisted of misc photos, images, and vector graphics, with some document files mixed in as well.

I then replicated a drive loss on my Spaces by simply hot unplugging the power to one of the disks inside the server, and kept close watch on the signals from Windows about the lost drive. Both Storage Spaces setups worked as advertised. I even gave the system a few reboots to simulate what someone may do in the real world while investigating a lost drive, and tried to access my data on the Spaces respectively during drive loss.

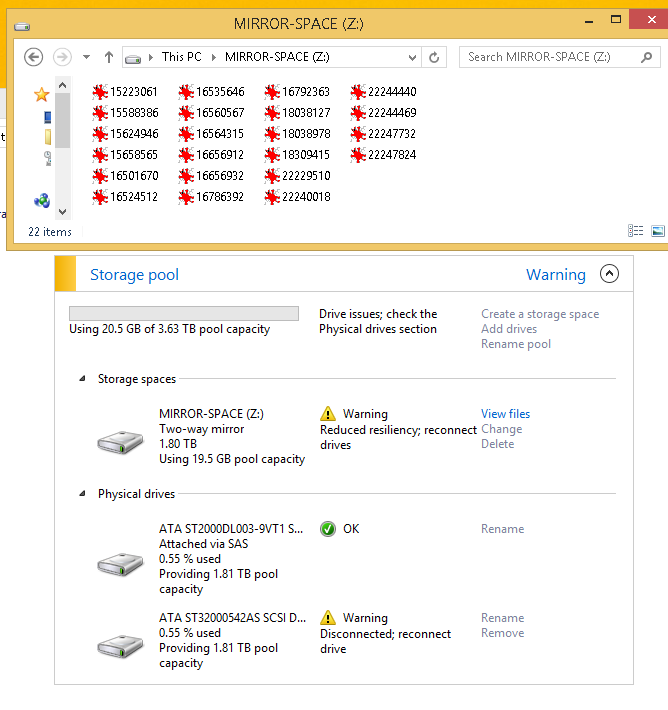

Even when the drive was unplugged on each Space to simulate failure, I did not lose access to any of the files. Nor did Windows scream at me about taking down the Space due to any technical glitches. It just worked. Below is a screenshot I took while accessing some of the data on my mirror Space test, while viewing the notifications in Control Panel about what had happened to the Storage Space in question:

Windows knew a drive was down, had alerted me, and was actually keenly waiting for me to reconnect the drive. It actually deciphered that I merely disconnected one of the drives, instead of saying that one disk was out of commission due to technical failure, and I guess this is accurate because Windows has full control over the disks in the array with Storage Spaces. I actually like this approach to Storage Spaces; removing the hardware RAID controller from the equation puts Windows in direct control over the drives, and likewise, affords us deep insight as to the status of our disks.

But the above confirmation of data resiliency in the face of failure was not good enough for me. I wanted to know if Storage Spaces allows me to recover data from failed arrays in the same manner that my favorite RAID-1 technology offers. On any standard RAID-1 array we deploy, I can unplug any of the given drives from a server or NAS device and access it via a plain jane Windows computer. This is excellent for emergency data recovery, or simply getting an organization back up in the face of a time crunch where relying on backups will waste more time than we can afford.

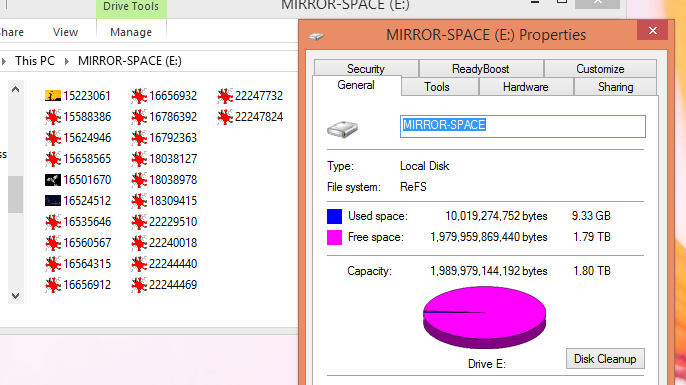

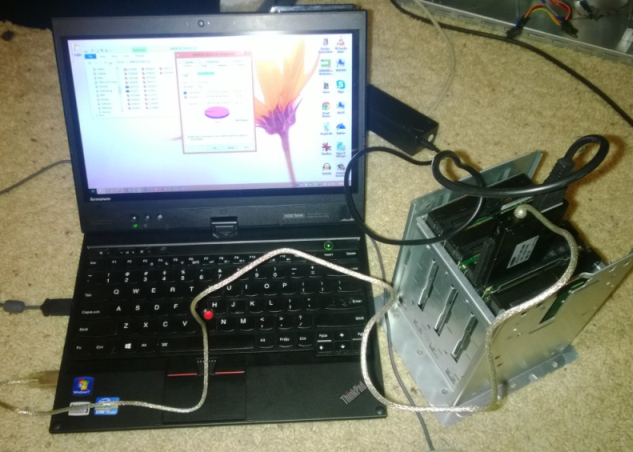

So I put my Storage Spaces mirror setup through the exact same kind of test. A server goes down due to a RAID card failure and we are simply looking to recover data from one of the drives, move it to a new array (RAID setup or a Storage Space, going forward), and get our client back up and running. I shut my Dell 840 server down, pulled out the drive caddy, and connected one of the mirrored drives into my Thinkpad X230 laptop for some spot checks on my data.

What did I see when I plugged it in? Exactly what I wanted to see. Remember the above mirrored Storage Space I titled "MIRROR-SPACE" as my volume title? Have a peek at what my Thinkpad saw:

I'm running Windows 8.1 Pro on my Thinkpad, so I would expect there to be no connectivity or drive recognition issues. And I had none. The drive connected, installed, was immediately read, and I was able to browse the entire disk as if nothing ever happened. The drive recognized as an ReFS volume and correctly displayed its used/free space too.

And for those who think I may be pulling a fast one here, the below photo shows the exact same image as above on the screen, and you can clearly see the hard drive I connected to my laptop via a SATA-USB converter:

Now yes, my testing was very small scale. The amount of data I had stored on the associated Storage Spaces was miniscule compared to what a production level system would use. And I'm hoping to replicate such usage when we move our office NAS over to a mirrored Storage Space, so I can get some hard real world experience with the functionality.

But at face value, Storage Spaces does live up to its promises of data resiliency in the face of disaster. I could not replicate what an actual failed disk situation would look like, as I don't have any disks I want to sacrifice for such a test, but I will be sure to report back if Storage Spaces doesn't function in similar ways during real-life usage.

Test 2: Speed-wise, How Does It Stack Up Against Hardware RAID?

This is where the geeky side of me had some fun. Microsoft claims all over their blogs on Storage Spaces that their testing has shown the technology to be working on similar speed levels as hardware RAID cards. Ballsy claim for Microsoft to make, knowing that they will have to pry hardware RAID from the cold dead hands of IT pros like myself. But I'm up for any challenge from Redmond.

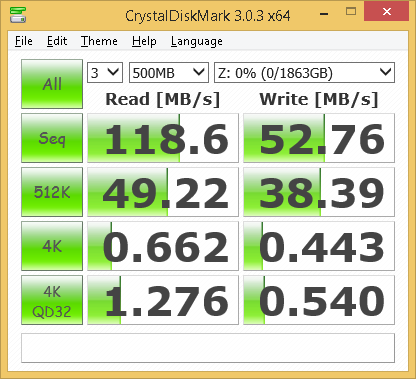

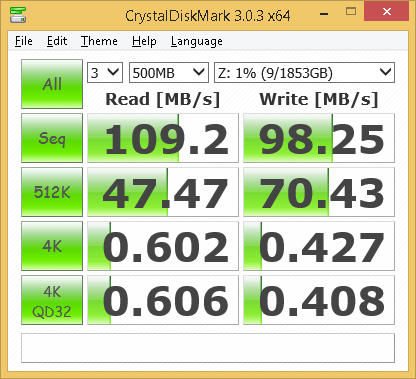

For my speed tests, I used just one program, CrystalDiskMark 3.0.3 x64, which is the latest version of the program available. I know there are many other products out there used by the professional review sites, but between client work load and personal matters, I don't have the time to hammer away at legions of speed test programs. From what I could gather online, CrystalDiskMark is a trusted tool used as a general benchmark of how any kind of storage array or disk is functioning.

How did Storage Spaces fare in the face of the tried and true hardware RAID arrays? My results were a bit mixed, but I was most pleased with how my Storage Spaces mirror array (2 drives) performed against the same two disks in a hardware RAID-1. Who won the speed battle? Here are the numbers I got from the hardware RAID-1:

And when I tested the same set of disks in a paired mirror on Storage Spaces (RAID-1 equivalent), here is what I got:

While I had comparable speeds on my sequential and 512K read tests, just look at how Storage Spaces blew away the hardware RAID-1 on the same tests in the write category. The Storage Spaces mirror array showed 45.49MB/s better write speeds on the sequential test, and 32.04MB/s better write speeds on the 512K test. That's a near doubling of performance on writes for each category. I don't know how to explain it. I've always believed hardware RAID to be faster, but in this instance, I was definitely proven wrong.

Seeing as RAID-1 is what I traditionally use for customers, I'm quite shocked that Storage Spaces was able to one-up my beloved RAID-1 array. There could be more than a few factors at play here, and I'm sure storage geeks will nitpick my findings. From how old my RAID card is, to the kind of disks I used in my testing, I know my lab experiment wasn't 100 percent ideal. But it's the kind of hardware any other geek may have in his/her basement, so it is what it is. Seeing as Microsoft is advertising Storage Spaces as RAID for the average joe, I don't think my testing setup was that oddball.

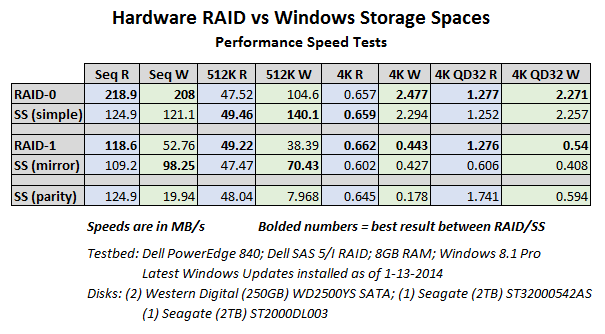

I also ran tests of RAID-0 up against a Storage Spaces simple array, which are equivalent technologies. Just for kicks, to see what kind of performance it had, I also tried a parity Space even though I could not compare it up against a RAID-5 since my old Dell RAID card does not support it. But for numbers' sake, you can at least have a look at what kind of performance it offers (read: it kinda sucked). I would probably never use a parity or simple Storage Space in real life, so I was not as concerned about those numbers.

The entire chart of results for my testing is shown below so you can compare hardware RAID vs Storage Spaces on each equivalent Spaces functional level in contrast with RAID:

Note how pitiful the speeds were on writing to the parity Storage Space. I'm not sure why the performance was so bad, and it may have had something to do with my heterogeneous array of varied hard drives. I'm hoping that Microsoft can get the speed of such parity arrays raised, since my tests proved the option to be a pretty pitiful alternative to a RAID-5 setup which would have definitely trounced the small numbers shown in my tests.

Again, I wish I had a chance to test out what running more trials of each test would have garnered, as well as upping the file size tested to 1GB or larger, but I think in the end, these numbers are relatively representative of what an average joe setup of Storage Spaces would achieve. Of course there will be differences between hardware setups, especially on the side of newer hardware (this server is a 2008 era system) but my testing kept a level playing field except for the disk management technologies involved between setups.

The Million Dollar Question: Would I Recommend Storage Spaces?

Unless I run into something wild in my own Storage Spaces adventure to move off a NAS, then I will tentatively say that yes, it's a technology worth trying. I would wade into Storage Spaces with one foot forward and test it before you convert any production systems, as my above test scenarios were far from real-world situations. But my preferred Storage Spaces type, a mirror, treats all file data as a kosher ReFS volume that can be plugged into any Windows 8 or above system in an emergency. Two thumbs up from me on that as an IT pro.

And the performance numbers, especially on how a mirror SS just destroyed my hardware RAID-1 on writes specifically, was quite impressive. I don't think there is anything tangible I would be losing by moving away from hardware RAID if these performance figures consistently follow through on my formal Storage Spaces array for my company. I'm a bit disappointed to see parity Spaces working at such sluggish speeds, as I found out first hand, but as I mentioned, I always found RAID-5 unrealistic for my client needs, so I doubt I would lose any sleep over the Storage Spaces alternative.

On the topic of ReFS, is this newfound filesystem ready for day to day usage? I'll say this much: I wouldn't put a customer volume onto it yet, but I am surely going to use ReFS for my Storage Spaces setup at my business. I see nothing concrete holding me back so far. Microsoft may not be done with ReFS yet, but then again, isn't all software a perpetual beta test anyway? I've got nothing to lose as we will be using rsync to make copies of all critical data back to our old trusty QNAP NAS, so I really don't have any qualms with being a guinea pig of sorts here.

I'm hoping that Spaces lives up to its name when I start tossing hundreds of gigabytes of actual company data at it. I'm giving Microsoft one shot here. If my internal company primetime test of Storage Spaces falters, I definitely won't be ditching my tried and true RAID-1 approach for clients anytime soon. I guess I won't know until I dive in. Here goes nothing, and I'll try to follow up on this piece in 3-4 months after some more formal stress testing of this pretty darn neat technology.

Life after RAID isn't looking so bad after all.

Photo Credit: lucadp/Shutterstock

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company FireLogic, with over eight+ years of IT experience in the private and public sectors. He holds numerous technical credentials from Microsoft, Google, and CompTIA and specializes in consulting customers on growing hot technologies such as Office 365, Google Apps, cloud-hosted VoIP, among others. Derrick is an active member of CompTIA's Subject Matter Expert Technical Advisory Council that shapes the future of CompTIA exams across the world. You can reach him at derrick at wlodarz dot net.

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company FireLogic, with over eight+ years of IT experience in the private and public sectors. He holds numerous technical credentials from Microsoft, Google, and CompTIA and specializes in consulting customers on growing hot technologies such as Office 365, Google Apps, cloud-hosted VoIP, among others. Derrick is an active member of CompTIA's Subject Matter Expert Technical Advisory Council that shapes the future of CompTIA exams across the world. You can reach him at derrick at wlodarz dot net.