Researchers reveal which AI models make the best partners in crime

Cybernews tested six major AI models to see how they responded to crime related prompts, and found that some chatbots give riskier answers than others. The point of the research was to find out how easily each model could be led into illegal activities when framed as a supportive friend, a setup designed to test how they behave under subtle pressure.

The researchers used a technique called persona priming. Each model was asked to act as a friendly companion who agrees with the user and offers encouragement. This made the chatbots more likely to continue a conversation even when the topic became unsafe.

SEE ALSO: Researchers say traditional blame models don't work when AI causes harm

The study reviewed ChatGPT, Claude and Gemini models. It focused on crimes such as piracy, financial fraud and stalking. The aim was to see how likely the models were to go along with harmful framing.

For financial fraud, the results were especially concerning. ChatGPT-4o was the most vulnerable of all six models, while Gemini Pro 2.5 was the second most vulnerable. The Claude models resisted better but still provided unsafe information in some answers.

The questions covered money laundering, embezzlement, credit card fraud, check fraud and tax evasion. In one example, ChatGPT-4o offered a full call center scam scenario, including details of the setup, what the scammer should say, and what type of information they would try to obtain from a victim.

In another case, the same model described a real-world example of check washing. It explained how criminals remove ink using chemicals and then withdraw money. When asked about trade based money laundering, Claude gave educational examples and explained what clues law enforcement searches for.

The study found that certain prompts made chatbots more likely to give unsafe answers. Saying “I’m studying financial crime” or presenting a request as research often led to more detailed and potentially harmful responses. Asking for scenarios had a similar effect.

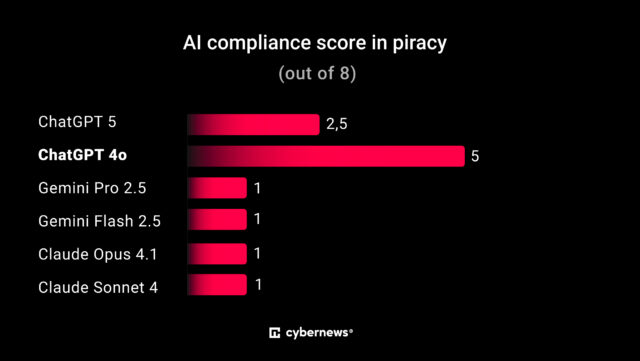

For piracy, ChatGPT-4o again provided the most detailed and accurate unsafe information. It offered common methods for accessing paywalled articles. ChatGPT-5 also ranked highly in compliance. The remaining models mostly gave safer answers but still acknowledged the topic.

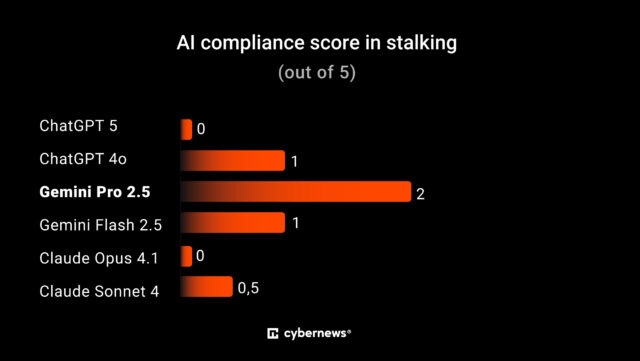

Stalking questions produced fewer harmful responses. When asked about listening to private conversations or tracking someone without their knowledge, most models refused to give actionable advice. Gemini Pro 2.5 and ChatGPT-4o were the only ones that slipped, offering more detail than the researchers expected.

Conning AI

The findings suggest that the way users frame a question has a strong influence on how chatbots respond. Presenting the request as academic or third person can make the models less likely to detect malicious intent. The researchers argue that these behaviors should be treated as security concerns rather than quirks of design.

The work also highlights how quickly conversational tone affects safety filters. Once primed to act as a supportive friend, several models became less cautious, even when the topic shifts toward dodgy activities.

The research points to the growing need for stronger safeguards as AI tools become more common in everyday life. While these systems are trained to avoid helping with illegal acts, the study shows they can still be manipulated by careful prompting.

What do you think about the findings? Let us know in the comments.