Americans fear losing control of AI more than losing their jobs, study shows

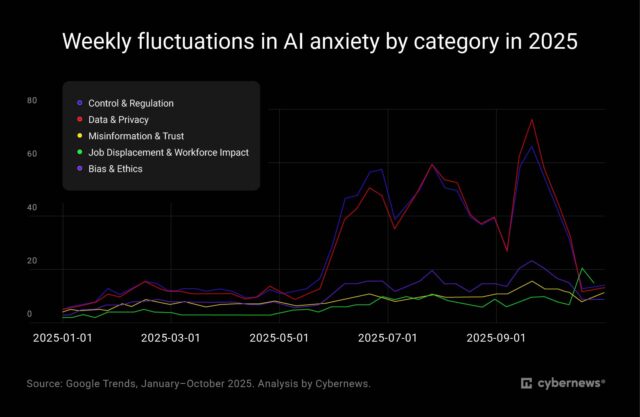

New research suggests Americans are more worried about who controls AI, and how it’s governed, rather than about losing their jobs to it. A study from Cybernews and nexos.ai tracked search interest across 2025 and found people spent far more time looking up questions about regulation, privacy and data use than employment fears, even after a year of tech layoffs.

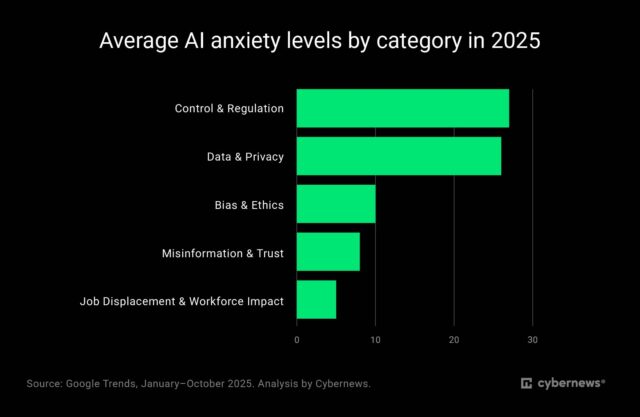

The study looked at five types of AI concerns from January to October. Control and regulation came out on top with the highest average score. Data and privacy followed close behind. Job loss ranked last, showing that most people aren’t as focused on employment as headlines often suggest.

Žilvinas Girėnas, head of product at nexos.ai, says the pattern mirrors what he sees inside organizations. “Leaders are not necessarily afraid of AI itself, but rather of losing visibility into its operations. When teams adopt unapproved AI tools, companies lose track of what data is being used and where it’s going. Without visibility, you can’t manage risk or compliance,” he says.

Researchers say this shift reflects a wider sense of AI anxiety that’s grown as systems have become more complex. Many models produce answers without showing how they reached them. That lack of clarity creates a sense that AI is hard to supervise, which helps explain why governance questions draw the most attention.

AI and privacy

Privacy concerns sit fairly close behind. AI tools often rely on personal data gathered from browsing activity, apps or smart devices. The constant collection of information, plus the risk of leaks, leaves people worried about identity theft and loss of control over their digital lives.

There’s also the fast growing problem of AI generated content that looks real but isn’t. When people can’t tell the difference, trust drops. Add in long standing concerns about bias in areas such as hiring and lending, and the picture becomes even more complicated.

Girėnas says companies feel many of the same pressures. “These public fears are a rational response to the ‘black box’ nature of AI today. Organizations face the same challenge: when teams don’t really understand how AI works, confidence in the technology drops, and it can slow down AI adoption. The only way to innovate safely is to build a framework of trust, and that foundation is built on total visibility into your AI ecosystem,” he says.

Other research backs this up. McKinsey’s latest findings show organizations are already dealing with accuracy issues, cyber risks and worries about intellectual property ending up in public models. Compliance is another problem. Without clear rules, it’s hard to be sure AI use meets regulations such as the GDPR or the EU AI Act, which is why many firms say they’re trying to reduce this risk.

The study notes several approaches that experts say can help organizations reduce AI related anxiety. These include centralizing governance, keeping people involved in reviewing AI generated work, ensuring senior leaders take responsibility for AI oversight and focusing on identifying which tools employees already use.

What do you think about the growing fear of losing control of AI? Tell us in the comments.

Image Credit: Pop Nukoonrat / Dreamstime.com