In a more complicated gaming world, OpenGL 4.0 gets simpler, smarter

Despite the fact that game console manufacturers still drive studios toward exclusivity for individual titles, so that a popular Xbox 360 game isn't available for PlayStation 3 and vice versa, developers within those studios are insisting more and more upon cross-platform flexibility and portability. While they may be restricted to one console, they don't want those borders to extend to computers or to handsets.

For this reason, the Khronos Group has become more and more important to developers, and OpenGL is no longer being perceived as some kind of fallback standard, as in the phrase, "Your graphics use only OpenGL. Today, OpenGL is developers' ticket to portability between PCs, consoles, and handsets, and it's the only technology shining a ray of hope for cross-console portability should it ever become politically feasible.

Today at the annual Game Developers' Conference, Khronos (whose principal members include AMD, Nvidia, and Sony) unveiled on schedule the 4.0 version of its OpenGL cross-platform rendering language for 3D virtual environments. To understand the significance of this event, you have to understand a bit more about the challenges that game developers are facing. Specifically, as screen sizes become larger on average, screen resolutions grow finer, and screens by number increase beyond one per system, the types of simplifications that made 3D scenes look "good enough" for older PCs actually look bad on modern systems. More finely-detailed systems make typical "fuzzifications" (my word for it, not Khronos') look more obvious.

It's still too arithmetically costly to expect 3D games and artistic applications to be ray tracers -- they can't assume the shading values for a huge number of traveling photons in space in real-time. We've talked before about how some graphics cards, including the first DirectX 10.1 cards from ATI in late 2007, can track a few photons, and fudge the remaining shader values for the rest.

But applying that type of calculation to OpenGL -- so it can be used with ATI and Nvidia (Intel? Maybe someday) -- requires the application to calculate how much...or more accurately, how little detail it can get away with, for certain points in space. Typically in OpenGL, developers have used lookup functions to determine the level of detail required to map any given area. As screen dynamics change, the number of functions required for a given space may increase, and their efficiency may decrease. As a result, it could take exponentially more time to make a scene look realistic -- to fuzz the focus of areas that are on the sidelines or out of range, or to blur regions that we're supposed to be flying by.

Khronos' engineers tackled this problem by extending the research begun earlier in the last decade (PDF available here) by SGI, the company that originally got the ball rolling for OpenGL. Technically, the whole technique is called "level of detail," but another way to refer to it is shader simplification. Used judiciously, it's a way to make certain elements of a scene seem clearer by selecting which others appear not so clear.

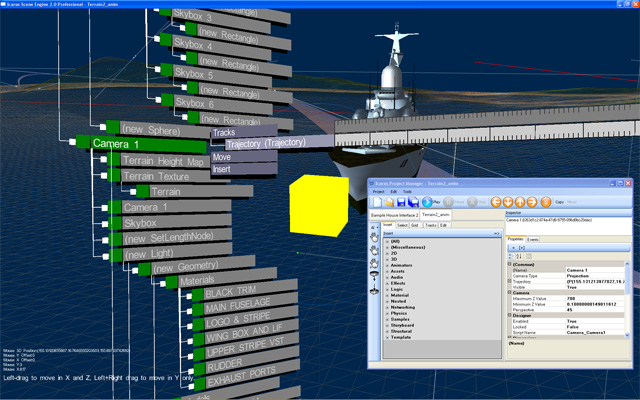

Screenshot of an early build of the Icarus Scene Engine, an OpenGL-based 3D scene editor that is itself rendered in 3D, using the OpenTK toolkit.

As scenes are processed, OpenGL effectively determines the active level of detail for any given shader (the equivalent of what Direct3D calls a "pixel," which isn't always a "pixel" per se). OpenGL 4.0 expedites this process by realizing that levels of detail should be remembered, so that when a function looks it up from the outside, it doesn't have to be recalculated. Thus, top of the list on version 4.0's list of changes is the new textureLOD function set, which will not impact how developers use the API -- it's like a retroactive fix. The new functions recall levels of detail rather than recalculate them.

These new functions are actually necessary for OpenGL to work (properly) on ATI Radeon HD 5xxx series graphics cards, which began shipping last year. Now developers are looking forward to ATI upgrading its 5xxx drivers to enable 4.0, now that 4.0 has enabled them.

The result may be an avoidance of the exponential lags that developers had been seeing as resolution and screen complexity increased. Double-precision floating-point vectors will be supported for the first time, also signifying the new dynamics of 3D rendering. And now, cube map textures can be layered, potentially for more iridescent effects that will substitute for bump mapping.

As one contributor to Khronos' OpenGL forum noted this morning, "I just had a look on the extension, it's so much more than whatever I could have expected! I think there is a lot of developer little dreams that just happened here."

The first rollout demonstrations of OpenGL 4.0, along with its WebGL counterpart, were scheduled for late this afternoon, West Coast time. That's probably where we'll see our first screen shots of the final specification at work.

We'll also learn more about just how viable the new edition is for cross-platform development. This afternoon, another forum contributor from Montreal noted the remaining political roadblocks to true cross-platform development: "As a cross-platform developer I would like to use OpenGL exclusively but it's commercially unviable to use it on Windows, due to the fact that OpenGL just doesn't work on most machines by default, which forces me to target my game to both DirectX and OpenGL. The OpenGL shortcomings on Windows aren't a big deal for hardcore games where the users are gonna have good drivers (although they are a cause of too many support calls which makes it inviable anyway) but it's a show-stopper for casual games...Until OpenGL isn't expected to just work in any Windows box, it is dead in the Windows platform. Do something about this please."