New Chrome extension helps spot deepfakes

Deepfakes are becoming more of a problem and particularly around election times they can seek to influence voters views. They're also getting better so it can be hard to know if what you're watching or hearing is real or fake.

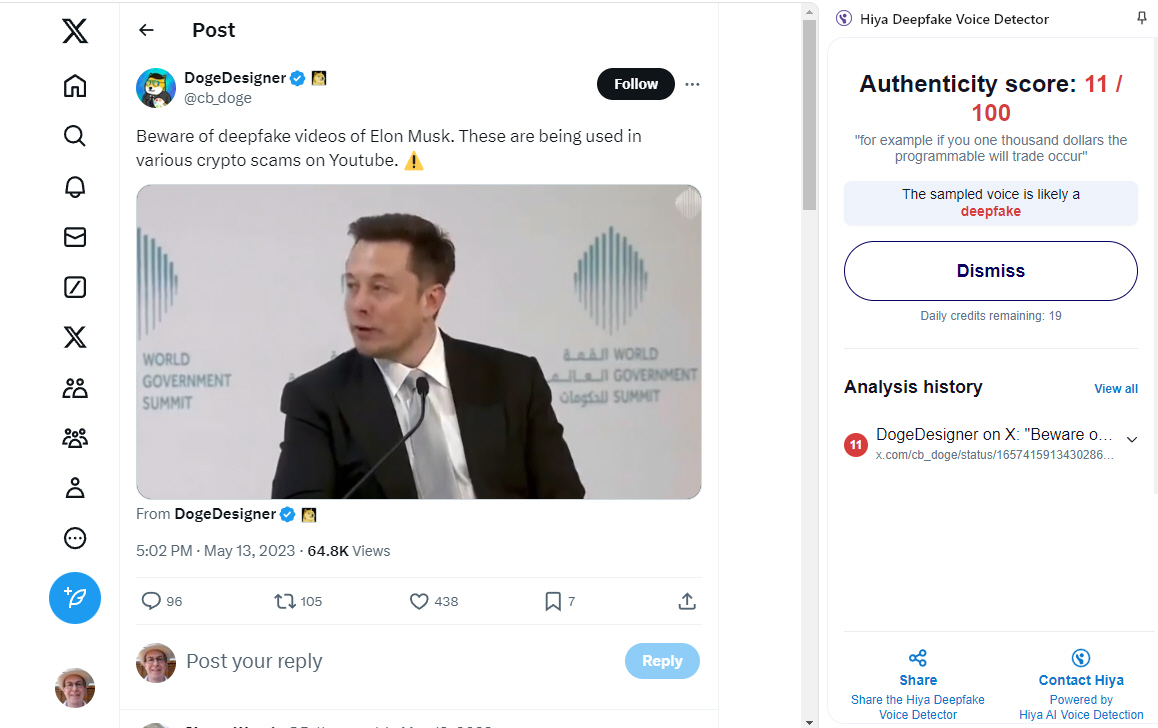

To combat this issue voice security company Hiya has launched a new plugin for the Chrome browser designed to identify video and audio deepfakes with up to 99 percent accuracy, by simply analyzing a few seconds of audio/voice.

Deepfake fraud is on the rise say business leaders

Over half of C-suite and other executives (51.6 percent) expect an increase in the number and size of deepfake attacks targeting their organizations' financial and accounting data in the next year.

A new Deloitte poll shows that increase could impact more than one-quarter of executives in the year ahead, as those polled report that their organizations experienced at least one (15.1 percent) or multiple (10.8 percent) deepfake financial fraud incidents during the past year.

How CISOs should tackle the year of deepfakes

Deepfakes are picking up steam and no one is safe -- not even the President of the United States, who was recently the subject of an election-based audio deepfake scandal. And with an unavoidably heated year ahead with the impending presidential election, I anticipate deepfakes will continue to proliferate.

Deepfakes are a unique cybersecurity topic. They stem from social engineering and are always evolving, but there’s a responsibility for CISOs to position their organizations to combat them.

Manufacturing and industrial sectors most targeted by attackers

Manufacturing and industrial products remain the most targeted sectors by cyber threat actors in the first half of 2024, with 377 confirmed reports of ransomware and database leak hits in the first half of the year.

A new report from managed detection and response specialist Critical Start is based on analysis of 3,438 high and critical alerts generated by 20 supported Endpoint Detection and Response (EDR) solutions, as well as 4,602 reports detailing ransomware and database leak activities across 24 industries in 126 countries.

Deepfakes: the next frontier in digital deception

Machine learning (ML) and AI tools raise concerns over mis- and disinformation. These technologies can 'hallucinate' or create text and images that seem convincing but may be completely detached from reality. This may cause people to unknowingly share misinformation about events that never occurred, fundamentally altering the landscape of online trust. Worse -- these systems can be weaponized by cyber criminals and other bad actors to share disinformation, using deepfakes to deceive.

Deepfakes -- the ability to mimic someone using voice or audio and to make them appear to say what you want -- are a growing threat in cybersecurity. Today the widespread availability of advanced technology and accessible AI allows virtually anyone to produce highly realistic fake content.

Why a 'Swiss cheese' approach is needed to combat deepfakes [Q&A]

Deepfakes are becoming more and more sophisticated, earlier this year a finance worker in Hong Kong was tricked out of millions following a deepfake call.

With the deepfake fast becoming a weapon of choice for cybercriminals, we spoke to Bridget Pruzin, senior manager -- compliance and risk investigations and analysis at Convera, to learn why she believes a 'Swiss cheese' approach, layering controls like unique on-call verification steps and involving in-person verification, is crucial to effectively defend against these scams.

Companies lack policies to deal with GenAI use

While 27 percent of security experts perceive AI and deepfakes to be the biggest cybersecurity threats to their organisations not all have a responsible use policy in place.

The third part of a survey of over 200 information security professionals carried out at Infosecurity Europe 2024 has been released today by KnowBe4 and it finds 31 percent of security professionals admit to not having a 'responsible use' policy on using generative AI within the company currently in place.

Almost three-quarters of US companies have a deepfake response plan

Given the level of worry around the influence of deepfakes -- as we reported yesterday -- it's perhaps not surprising to learn that companies are developing their own deepfake response plans.

A new survey of over 2,600 global IT and cybersecurity professionals, from software recommendation engine GetApp, finds 73 percent of US respondents report that their organization has developed a deepfake response plan.

72 percent of Americans worry about deepfakes influencing elections

New research from identity verification company Jumio finds growing concern among Americans about the political influence AI and deepfakes may have during upcoming elections and how they might influence trust in online media.

The study of over 8,000 adult consumers, split evenly across the UK, US, Singapore and Mexico, finds 72 percent of Americans are worried about the potential for AI and deepfakes to influence upcoming elections in their country.

Fraudulent transactions increase over 70 percent

Fraudulent transactions in the first half of 2024 were up over 73 percent year on year, and suspected fraudulent transactions increased by over 84 percent, according to the 2024 Mid-Year Identity Fraud Review, released today by AuthenticID.

The report also looks at the latest trends including a surge in AI-enabled fraud, as well as the increased use of deepfakes for identity fraud tactics like account takeover attacks and injection attacks.

Most consumers ready to switch banks over fraud protection measures

A new study reveals growing anxiety among consumers that weaknesses in their banks' fraud-protection measures could leave them exposed to scammers, this would result in the vast majority (75 percent) switching providers.

For the report from Jumio sampled the views of more than 8,000 adult consumers, split evenly across the UK, US, Singapore, and Mexico, with research carried out by Censuswide.

Think you could spot a deepfaked politician?

Given the quality of many politicians at the moment you might be forgiven for thinking that sometimes a deepfake would be an improvement.

But to be serious, a new study from Jumio of over 2,000 adults from across the UK finds that 60 percent are worried about the potential for AI and deepfakes to influence upcoming elections, and only 33 percent think they could easily spot a deepfake of a politician.

Deepfakes pose growing fraud risk to contact centers

Deepfake attacks, including sophisticated synthetic voice clones, are rising, posing an estimated $5 billion fraud risk to US contact centers, according to the latest Pindrop Voice Intelligence and Security Report.

Contact center fraud has surged by 60 percent in the last two years, reaching the highest levels since 2019. By the end of this year, one in every 730 calls to a contact center is expected to be fraudulent.

Deepfakes are now the second most common security incident

Concern around deepfakes has been growing for some time and new research released by ISMS.online shows deepfakes now rank as the second most common information security incident for UK businesses and have been experienced by over a third of organizations.

The report, based on a survey of over 500 information security professionals across the UK, shows that nearly 32 percent of UK businesses have experienced a deepfake security incident in the last 12 months.

Consumers worry about being fooled by deepfakes

A new report from Jumio shows 72 percent of consumers worry about being fooled by deepfakes on a daily basis.

Based on a survey by Censuswide of more than 8,000 adult consumers, split evenly across the UK, US, Singapore and Mexico, it finds only 15 percent of consumers say they've never encountered a deepfake video, audio or image before, while 60 percent have encountered a deepfake within the past year.