Celebrating Data Privacy Day: Ensuring ethical agentic AI in our daily interactions

Both AI agents and agentic AI are becoming increasingly powerful and prevalent. With AI agents, we can automate simple tasks and save time in our everyday lives. With agentic AI, businesses can automate complex enterprise processes. Widespread AI use is an inevitability, and the question going forward is not if we’ll use the technology but how well.

In a world where AI takes on more responsibility, we need to know how to measure its effectiveness. Metrics like the number of human hours saved or the costs reduced are, of course, important. But we also need to consider things like how ethically and securely our AI solutions operate. This is true when adopting third-party solutions and when training AI in house.

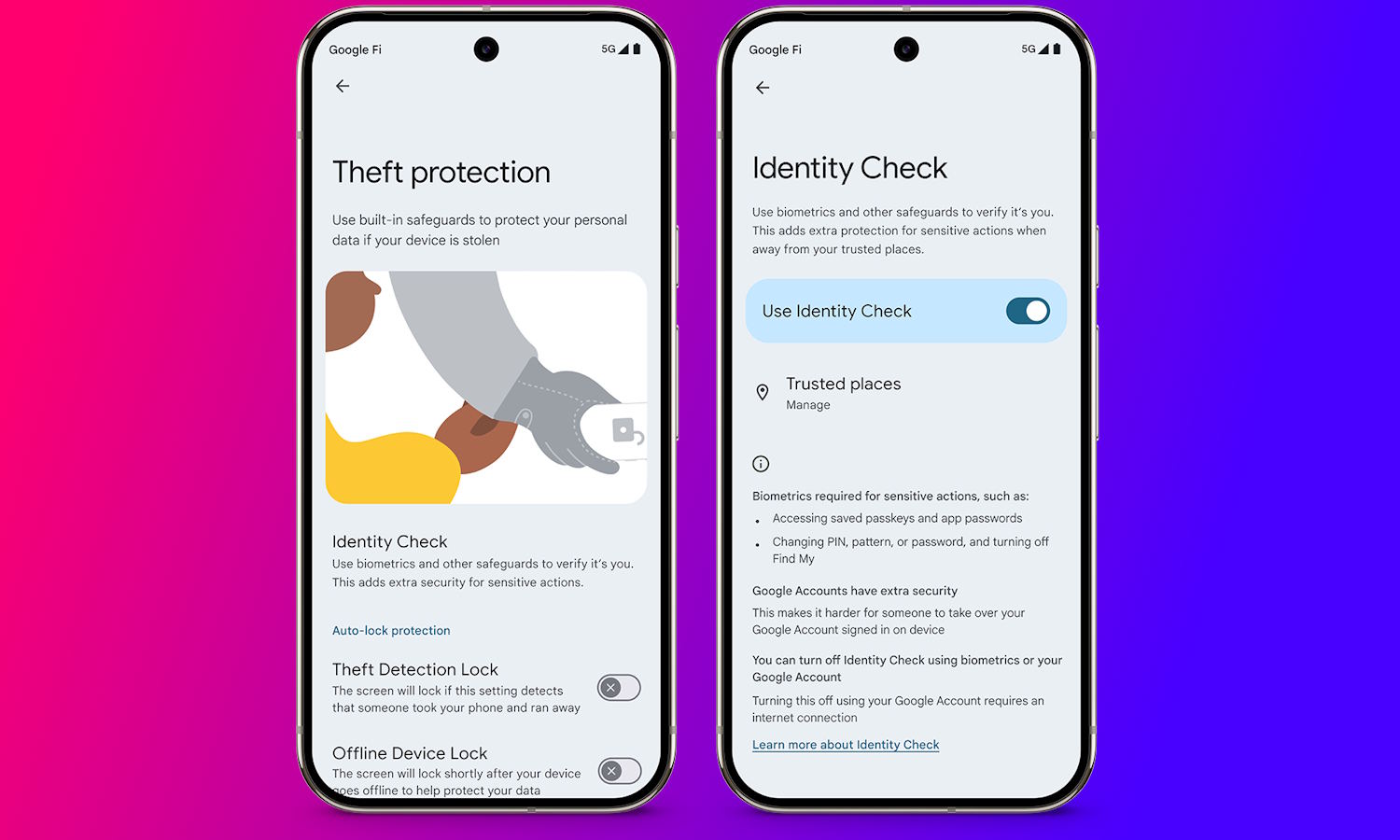

Google launches Identity Check, a new location-based security feature, and completes roll-out of AI-powered theft detection

Theft of mobile devices is a crime that is not going away any time soon. Phones are now completely central to so many aspects of life, and the theft (or loss) is about much more than the monetary value of the device itself. There is great potential for a thief to gain access to a wealth of information via a stolen phone.

This is why the security of mobile devices is so important, and it is why Google is taking steps to limit the impact of theft. A new feature that is starting to roll out is Identity Check, which requires the use of biometric authentication whenever your device is in an unknown or untrusted location. The company is also harnessing the power of artificial intelligence for good, using AI-powered tools to detect thefts.

Hyper-personalization is here -- but are organizations ready?

The rising demand for relevant, convenient and personalized customer experiences across all sectors has put modern organizations under pressure to adapt. McKinsey reports that almost three quarters of buyers now expect personalized interactions. The choice is clear: either embrace personalization, with individual offers or tailored updates based on previous habits, or risk falling behind competitors with a standardized approach.

Many organizations already do this well. From personalized Netflix movie recommendations to tailored Google adverts built on previous searches, organizations are able to delight customers with tailored services.

Apple decides to disable its broken AI-powered news summaries

While Apple was excited to roll out news summaries powered by artificial intelligence, the reception has been somewhat muted because of some serious issues. The Apple Intelligence notification summaries were found to be sharing misleading or incorrect news headlines.

The BBC and other news outlets complained that their names were being used to spread misinformation, and Apple responded by promising an update to the service. For now, though, the company seems have to changed its mind, opting instead to simply disable notification summaries.

Microsoft increases its focus on artificial intelligence by creating a new CoreAI team

Microsoft continues to bet big on AI and the company has created a new artificial intelligence engineering division called CoreAI. The new development-focused unit is headed by Jay Parikh -- once Meta's VP and global head of engineering -- and the intention is to speed up AI infrastructure and software development at Microsoft.

CEO Satya Nadella describes 2025 as being "about model-forward applications that reshape all application categories". Nadella clearly wants to power forward in what he says is the "next innings of this AI platform shift".

How to quickly remove AI results from Google Search

You can’t have failed to notice that certain searches on Google now display AI-generated summaries, known as "AI Overviews," at the top of search results.

If you ask Google a question, such as “What is Bigfoot?” you will see an instant answer explaining that it is a “legendary, hairy, ape-like creature said to live in the forests of North America, especially in the Pacific Northwest.” That overview will also provide additional information about its size, appearance, location, and other names.

VLC will soon be able to use AI to generate subtitles for any video

VLC Media Player remains one of the most popular video players, having just hit a staggering 6 billion downloads. But VideoLAN, the company behind the software, is not one to rest on its laurels as an exciting demonstration at CES shows.

One of the biggest features in the pipeline for the media player is automatic subtitles generation and translation based on local, open-source AI models. With subtitles being vital for a lot of people, and highly preferable for many, this use of artificial intelligence plugs an important gap in media accessibility.

Companies have to address the risks posed by GenAI

Even though it’s only been two years since the public demo of ChatGPT launched, popularizing the technology for the masses, generative AI technology has already had a profound and transformative effect on the world. In the years since the platform’s launch, critics have regularly pointed out the risks of generative AI and called for increased regulation to mitigate them. Once these risks are addressed, companies will be more free to use AI in ways that help their bottom line and the world as a whole.

We must remember that artificial intelligence is a powerful tool, and as the adage goes, “With great power comes great responsibility.” Although we have seen AI make a positive impact on society in several ways -- from boosting productivity in industrial settings to contributing to life-saving discoveries in the medical field -- we have also seen wrongdoers abuse the technology to cause harm.

Harnessing AI to drive team efficiency and optimize project management

As organizations strive for greater project management efficiency, AI can be a powerful tool to identify inefficiencies, anticipate risks, and improve decision-making. From content creation to data summarization, generative AI (GenAI) transforms how teams work by quickly creating valuable outputs with less effort. For example, a daily sprint report that might take a team member up to an hour to compile can be generated in seconds by AI that is trained to summarize data from multiple sources.

Over time, small efficiencies like these are multiplied, leading to extensive time savings across teams and organizations. By handling repetitive tasks, GenAI models give teams more time to focus on collaboration, strategic thinking, and creative problem-solving.

In large companies, particularly those with high staff turnover or matrixed, cross-functional teams, new team members often face a steep learning curve when adapting to the team’s specific tools and processes. By functioning as a copilot, an ever-present, always-patient expert on all things project management, GenAI can provide answers from both static documentation and real-time data. When getting up to speed in a new role, a manager could ask a GenAI copilot what is happening across a team that could, over time, negatively impact the projects they are working on. The feedback given can help address these issues, reduce the manager’s learning curve, and provide on-the-job training that delivers immediate, actionable insights.

Microsoft says 2025 is the year to ditch Windows 10 and embrace Windows 11

Every time January rolls around there are declarations that this will be the year of Linux on the desktop -- and of course, it never is. This year is no different, and Microsoft would much rather you consider 2025 to be the “year of the Windows 11 PC refresh”.

The company is using CES as a platform to encourage people who are still hanging on to Windows 10 to loosen their grip and move to Windows 11. The end of support for Windows 10 is being used as a stick, but the carrot is the new breed of AI-powered Copilot+ PCs. Microsoft is hoping that the artificial intelligence driven capabilities of this recently launched range of PCs will also encourage existing Windows 11 users to invest in new hardware.

AI in life sciences and healthcare

The life sciences and healthcare industries have critical challenges to overcome in 2025. Costs in both are on the rise, forcing business leaders to seek out more efficient methods for developing and delivering new products and services. Additionally, consumer expectations are higher than ever, with patients and other clients looking for features that maximize convenience and accessibility.

To help with these challenges, industry leaders should consider the potential of artificial intelligence. AI has been transforming the business world by providing the power to automate, analyze, and increase efficiency in unprecedented ways. For life sciences and healthcare, AI provides the capability to gather and analyze data in a way that drives better outcomes and delivers better consumer experiences.

Coding in the age of AI: Redefining software development

As AI marks its stamp on every industry, the software development landscape is experiencing a rapid transformation driven by the integration of automation into coding practices. With approximately $1 billion invested in AI-driven code solutions since early 2022, we’re seeing a shift that goes far beyond just automation. This transformation is redefining the entire software development lifecycle and testing assumptions about what it means to be a developer.

It is clear, as we embark at the beginning of a new era, that the future of coding does not reside in opposing change, but in adapting our approaches to education and practice in software development.

The AI arms race: How machine learning is disrupting financial crime

The financial services industry is in the midst of an unprecedented AI arms race. Criminal organizations are getting smarter, using cutting-edge tech to launch elaborate attacks on financial systems. In response, financial institutions (FIs) are turning to AI and machine learning (ML) to level the playing field. That’s right -- FIs are keeping pace with their criminal counterparts, thwarting malicious activity much more reliably and efficiently.

Having spent my career at the intersection of finance and technology, I've seen the constant race to stay ahead of evolving criminal operations. Rules-based systems, while foundational, simply can't match the speed and adaptability of modern financial crime. But now, through advanced pattern detection, adaptive defense mechanisms, and dramatically improved accuracy in identifying suspicious activity, AI is fundamentally reshaping how we fight financial crime -- and winning.

AI and automation: The future of pool care

As technology advances, the integration of artificial intelligence and automation is reshaping the landscape of pool maintenance. These innovations promise not only to enhance efficiency but also to align with eco-friendly practices, paving the way for a sustainable future in pool care.

The future of pool maintenance is being defined by the convergence of AI and automation. Homeowners are increasingly adopting these technologies to streamline pool care, reduce manual labor, and minimize environmental impact. A prime example is the use of a solar powered pool skimmer, which combines AI-driven efficiency with renewable energy, setting a new standard for sustainable pool management.

The dark side of AI: How automation is fueling identity theft

Automations empowered by artificial intelligence are reshaping the business landscape. They give companies the capability to connect with, guide, and care for customers in more efficient ways, resulting in streamlined processes that are less costly to support.

However, AI-powered automations also have a dark side. The same capabilities they provide for improving legitimate operations can also be used by criminals intent on identity theft. The rise of low-cost AI and its use in automations has empowered scammers to widen their nets and increase their effectiveness, leading to a drastic increase in identity theft scenarios.