Apps, analytics and AI: 4 common mistakes

The app economy is big business. Apple’s App Store ecosystem alone generated a staggering $1.1 trillion in total billings and sales for developers in 2022. But as users demand more relevant and immediate experiences, often driven by AI, developers increasingly need competitive advantages to stand out.

Real-time analytics, supercharged by generative AI, can provide a critical edge by allowing developers to extract key insights and quickly adapt their apps to reflect changing user expectations. But only 17 percent of enterprises today have the ability to perform real-time analysis on large volumes of data, and adoption remains slow. Meanwhile, even when companies are able to perform real-time analytics, there are several common mistakes that can prevent them from reaping its full benefits:

Will AI change the makeup of software development teams?

With the increased popularity of artificial intelligence technology, many human workers have expressed concern that AI models will replace them or make their positions obsolete. This is particularly the case with occupations like coding and software design, where artificial intelligence has the opportunity to automate several essential processes. Although AI is a powerful tool that has the potential to revolutionize the coding process, the role of human workers is still invaluable, as this technology is still in its infancy.

Software development teams are among the ranks of workers most profoundly affected by the AI revolution. Some of the ways in which software development teams have begun to use artificial intelligence include:

Save $25.99! Get 'Artificial Intelligence and Machine Learning Fundamentals' for FREE

Machine learning and neural networks are pillars on which you can build intelligent applications. Artificial Intelligence and Machine Learning Fundamentals begins by introducing you to Python and discussing AI search algorithms.

The book covers in-depth mathematical topics, such as regression and classification, illustrated by Python examples. As you make your way through the book, you will progress to advanced AI techniques and concepts, and work on real-life datasets to form decision trees and clusters.

Tackling information overload in the age of AI

Agile decision-making is often hampered by the volume and complexity of unstructured data. That’s where AI can help.

In 2022, the U.S. Congress passed the Inflation Reduction Act (IRA), which allocated billions in investment to clean energy. This set off a race among private equity and credit firms to identify potential beneficiaries -- the companies throughout the clean energy supply chain that may need additional capital to take advantage of the new opportunities the IRA would create. It turned out to be quite a data challenge.

Save $18! Get 'AI + The New Human Frontier: Reimagining the Future of Time, Trust + Truth' FREE for a Limited Time’ for FREE

AI + The New Human Frontier: Reimagining the Future of Time, Trust + Truth by Erica Orange, a renowned futurist, offers a compelling exploration of generative AI's potential to enhance human creativity rather than replace it. This pivotal book navigates how AI tools will help shape the human experience, and aid in augmenting human ingenuity and imagination.

The author eloquently argues that the essence of human intelligence -- our curiosity, critical thinking, empathy, and more -- is not only irreplaceable but will become increasingly valuable as AI evolves to take on routine tasks. AI + the New Human Frontier is a clarion call for embedding trust, human oversight and judgement into AI development, ensuring that the technology amplifies our most human capabilities. At a time when the lines between what is real, fake, true and false are becoming more blurred, reliance on human-centric solutions, not just technological ones, will become more critical.

The biggest mistake organizations make when implementing AI chatbots

Worldwide spending on chatbots is expected to reach $72 billion by 2028, up from $12 billion in 2023, and many organizations are scrambling to keep pace. As companies race to develop advanced chatbots, some are compromising performance by prioritizing data quantity over quality. Just adding data to a chatbot’s knowledge base without any quality control guardrails will result in outputs that are low-quality, incorrect, or even offensive.

This highlights the critical need for rigorous data hygiene practices to ensure accurate and up-to-date conversational AI software responses.

It’s time to treat software -- and its code -- as a critical business asset

Software-driven digital innovation is essential for competing in today's market, and the foundation of this innovation is code. However, there are widespread cracks in this foundation -- lines of bad, insecure, and poorly written code -- that manifest into tech debt, security incidents, and availability issues.

The cost of bad code is enormous, estimated at over a trillion dollars. Just as building a housing market on bad loans would be disastrous, businesses need to consider the impact of bad code on their success. The C-suite must take action to ensure that its software and its maintenance are constantly front of mind in order to run a world-class organization. Software is becoming a CEO and board-level agenda item because it has to be.

The newest AI revolution has arrived

Large-language models (LLMs) and other forms of generative AI are revolutionizing the way we do business. The impact could be huge: McKinsey estimates that current gen AI technologies could eventually automate about 60-70 percent of employees’ time, facilitating productivity and revenue gains of up to $4.4 trillion. These figures are astonishing given how young gen AI is. (ChatGPT debuted just under two years ago -- and just look at how ubiquitous it is already.)

Nonetheless, we are already approaching the next evolution in intelligent AI: agentic AI. This advanced version of AI builds upon the progress of LLMs and gen AI and will soon enable AI agents to solve even more complex, multi-step problems.

The evolution of AI voice assistants and user experience

The world of AI voice assistants has been moving at a breakneck pace, and Google's latest addition, Gemini, is shaking things up even more. As tech giants scramble to outdo each other, creating voice assistants that feel more like personal companions than simple tools,

Gemini seems to be taking the lead in this race. The competition is fierce, but with Gemini Live, we're getting a taste of what the future of conversational AI might look like.

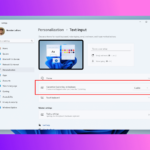

Microsoft will let Windows 11 users remap the stupid Copilot key on their keyboard

If you’ve bought a computer or a keyboard recently, you may have spotted an extra key near the spacebar. The Copilot key is Microsoft’s attempt to push, prompt and encourage use of its AI-powered digital assistant, but not everyone is convinced.

Even if you are someone who sees the value in Copilot as a tool, you may well not feel the need to have a dedicated physical key to access it. Thankfully, Microsoft understands that the latest addition to keyboards is not something that everyone needs. As such, the company is testing the ability to remap the Copilot key.

Addressing the demographic divide in AI comfort levels

Today, 37 percent of respondents said their companies were fully prepared to implement AI, but looking out on the horizon, a large majority (86 percent) of respondents said that their AI initiatives would be ready by 2027.

In a recent Riverbed survey of 1,200 business leaders across the globe, 6 in 10 organizations (59 percent) feel positive about their AI initiatives, while only 4 percent are worried. But all is not rosy. Senior business leaders believe there is a generational gap in the comfort level of using AI. When asked who they thought was MOST comfortable using AI, they said Gen Z (52 percent), followed by Millennials (39 percent), Gen X (8 percent) and Baby Boomers (1 percent).

Scratch that! We’re actually no wiser about when Microsoft plans to release the Windows 11 24H2 update

For those who are keenly awaiting the release of the Windows 11 24H2 update, a recent Microsoft blog post caused a good deal of excitement. It appeared to reveal that this significant feature update is due to roll out this very month; but all was not as it seems

Microsoft has now updated the blog post to clarify that the information it includes has been misinterpreted -- or perhaps that it was not sufficiently clearly written in the first place. Where does this leave us?

Microsoft reveals the imminent release date for Windows 11 24H2

If you have been wondering just when you’ll be able to get your hands on the final version of the Windows 11 24H2 update, wonder no more.

Although Microsoft has not made a big announcement about the release date for this eagerly anticipated feature update for Windows 11, the company has -- seemingly inadvertently -- revealed the release schedule in a blog post. This spills the news that the release of Windows 11 24H2 is just days away.

Why businesses can't go it alone over the EU AI Act

When the European Commission proposed the first EU regulatory framework for AI in April 2021, few would have imagined the speed at which such systems would evolve over the next three years. Indeed, according to the 2024 Stanford AI Index, in the past 12 months alone, chatbots have gone from scoring around 30-40 percent on the Graduate-Level Google-Proof Q&A Benchmark (GPQA) test, to 60 percent. That means chatbots have gone from scoring only marginally better than would be expected by randomly guessing answers, to being nearly as good as the average PhD scholar.

The benefits of such technology are almost limitless, but so are the ethical, practical, and security concerns. The landmark EU AI Act (EUAIA) legislation was adopted in March this year in an effort to overcome these concerns, by ensuring that any systems used in the European Union are safe, transparent, and non-discriminatory. It provides a framework for establishing:

Meta is training its AI using an entire nation’s data… with no opt-out

The question of how to train and improve AI tools is one that triggers fierce debate, and this is something that has come into sharp focus as It becomes clear just how Meta is teaching its own artificial intelligence.

The social media giant is -- perhaps unsurprisingly to many -- using data scrapped from Facebook and Instagram posts, but only in Australia. Why Australia? Unlike Europe where General Data Protection Regulation (GDPR) necessitated Meta to give users a way to opt out of having their data used in this way, Australia has not been afforded this same opportunity. What does this mean?

Recent Headlines

Most Commented Stories

BetaNews, your source for breaking tech news, reviews, and in-depth reporting since 1998.

Regional iGaming Content

© 1998-2025 BetaNews, Inc. All Rights Reserved. About Us - Privacy Policy - Cookie Policy - Sitemap.