Generative AI: closing the developer gap and redefining the software moat [Q&A]

Generative AI (GenAI) is reshaping software development, closing the long‑standing gap between surging demand for new applications and the limited supply of skilled developers. But this AI‑driven leap in productivity comes with an unexpected twist: it’s also dissolving the traditional technological edge that once set companies apart.

So where does sustainable advantage come from in this new world? We sat down with Matthias Steiner, senior director of global business innovation at Syntax, to explore how enterprises can redefine their competitive edge in the GenAI era.

Generative simulators allow AI agents to learn their jobs safely

As AI systems increasingly shift from answering questions to carrying out multi-step work, a key challenge has emerged. The static tests and training data previously used often don't reflect the dynamic and interactive nature of real-world systems.

That’s why Patronus AI today announced its ‘Generative Simulators,’ adaptive simulation environments that can continually create new tasks and scenarios, update the rules of the world in a simulation environment, and evaluate an agent's actions as it learns.

One in 44 GenAI prompts risks a data leak

In October, one in every 44 GenAI prompts submitted from enterprise networks posed a high risk of data leakage, impacting 87 percent of organizations that use GenAI regularly.

A study from Check Point Research finds an additional 19 percent of prompts contained potentially sensitive information such as internal communications, customer data, or proprietary code. These risks coincide with an eight percent increase in average daily GenAI usage among corporate users.

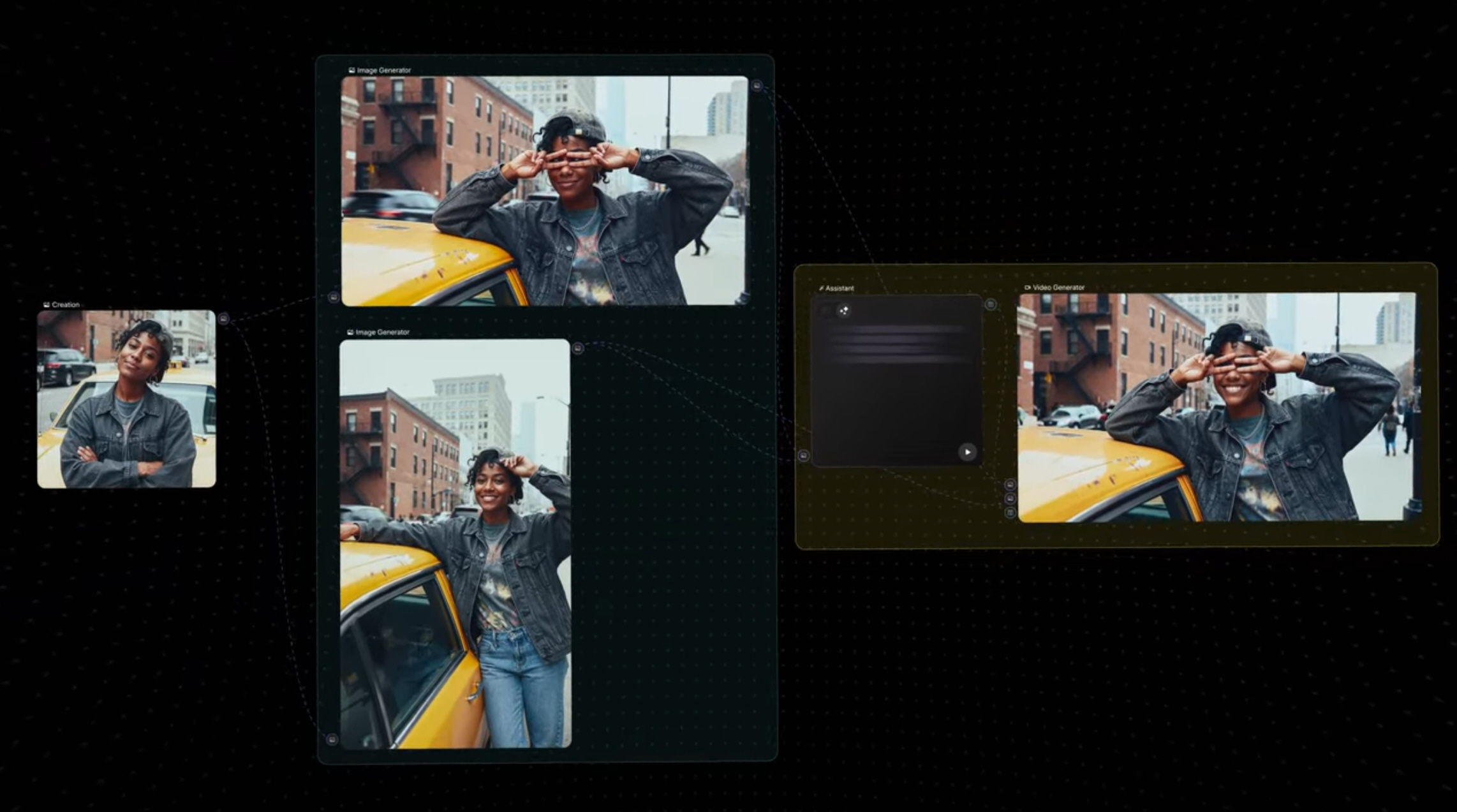

Freepik launches Freepik Spaces to power real time AI visual creation and collaboration

Freepik has introduced Freepik Spaces, a new product within its AI Creative Suite to allow creative teams to design, automate, and collaborate on visual projects in real time. The platform uses a node-based interface that brings the entire creative process onto one shared canvas, so teams can move from concept to campaign within a single environment.

Freepik Spaces is targeting creative directors, advertisers, marketers, filmmakers, innovation leads, and other professionals who work across visual media. Users can connect multiple AI tools and workflows within the same space, doing away with the need to switch between separate applications. The goal is to streamline production and make collaboration easier for distributed teams.

Text-to-video app Sora surges past 1 million downloads as OpenAI races to meet demand

OpenAI’s text-to-video app Sora has reached over one million downloads in less than five days, according to Sora boss Bill Peebles on X.

Peebles said the milestone came faster than ChatGPT’s initial launch, despite Sora’s invite-only rollout and it currently only being available in North America.

Sora 2, OpenAI's AI video generator is now available for everyone on Invideo

Invideo has become the first platform to offer unrestricted global access to Sora 2, OpenAI’s next-generation AI video model for generating cinematic, photorealistic footage from text prompts.

The partnership with OpenAI removes previous barriers such as invite codes, waitlists, VPNs, and 10-second limits, making next-generation generative video creation available to everyone. If you’ve ever wanted to see what your dog battling Godzilla at the end of the world might look like, now you can find out.

CISOs under pressure to keep data secure during AI rollouts without harming growth

IT leaders are optimistic about the value AI can deliver, but readiness is low. Many organizations still lack the security, governance and alignment needed to deploy AI responsibly.

A new study by the Ponemon Institute for OpenText finds 57 percent of CIOs, CISOs, and other IT leaders rate AI adoption as a top priority, and 54 percent are confident they can demonstrate ROI from AI initiatives. However, 53 percent say it is ‘very difficult’ or ‘extremely difficult’ to reduce AI security and legal risks.

Human risk and Gen AI-driven data loss top CISO concerns

As cyber threats become more frequent and complex, CISOs are increasingly concerned about their organization’s ability to withstand a material attack. 76 percent feel at risk of experiencing a material cyberattack in the next 12 months, yet 58 percent say they are unprepared to respond.

The latest Voice of the CISO report from Proofpoint surveyed 1,600 global CISOs across 16 countries and finds human behavior remains a critical vulnerability, with 92 percent attributing at least some data loss to departing employees.

Insider threats become more effective thanks to AI

Artificial intelligence is making insider threats more effective according to a new report which also shows that 53 percent of respondents have seen a measurable increase in insider incidents in the past year.

The survey, of over 1,000 cybersecurity professionals, from Exabeam finds 64 percent of respondents now view insiders, whether malicious or compromised, as a greater risk than external actors. Generative AI is a major driver of this, making attacks faster, stealthier, and more difficult to detect.

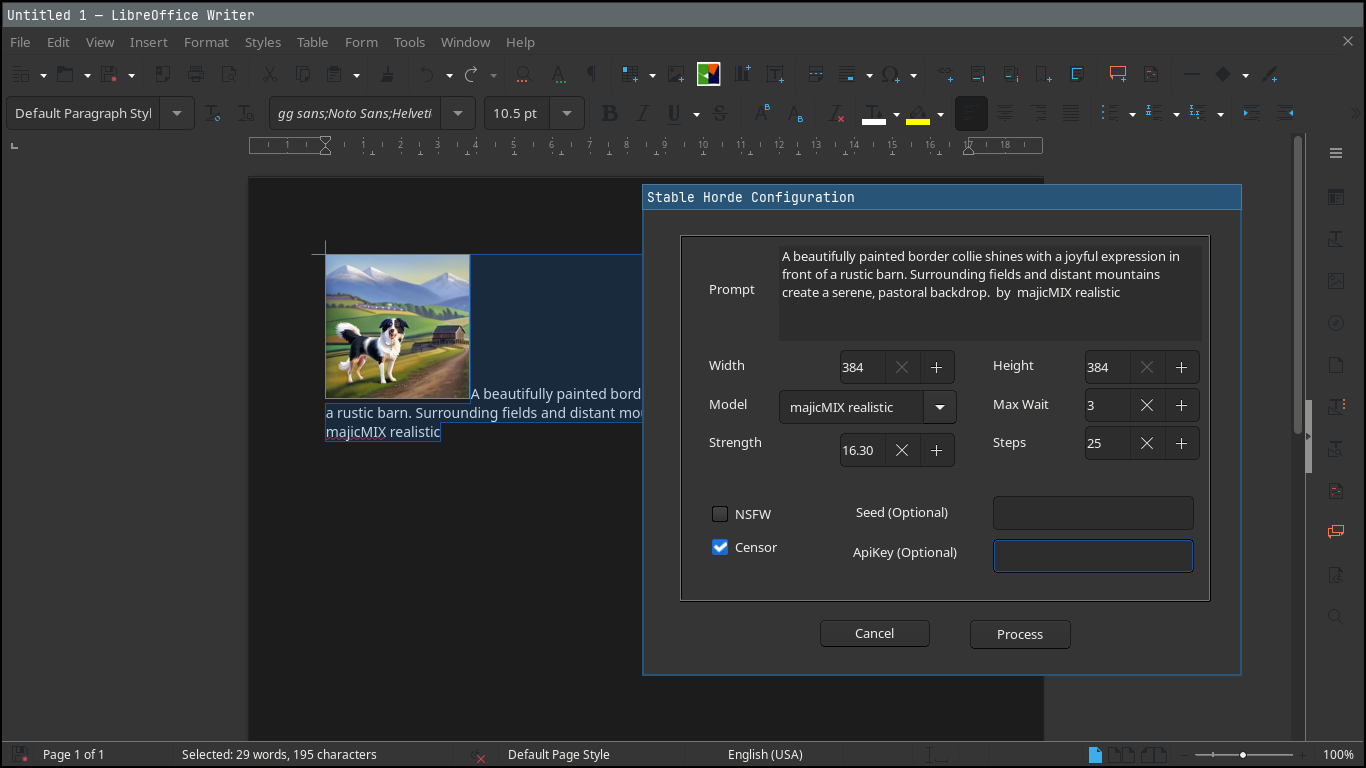

LibreOffice users can now generate AI art inside Writer and Impress

LibreOffice doesn’t ship with artificial intelligence features by default, but a new extension gives the office suite AI-powered features thanks to a community effort.

While Microsoft Office has started to integrate AI directly into its products, often tied to cloud subscriptions and proprietary tools, LibreOffice follows a different path where volunteers and independent developers can add optional capabilities through open-source extensions.

Copilot 3D is Microsoft’s latest generative AI experiment

There is no shortage of generative AI tools out there, and the latest is Copilot 3D. This experimental tool from Microsoft does very much what you would expect of it – it creates three-dimensional images using artificial intelligence.

As this is an experimental tool, there are a couple of things to keep in mind. Firstly, it is not a completed product so there may be issues with it. Secondly, this is an experimental tool which is still being developed, so it could change dramatically or even disappear altogether. But let’s take a look at what is currently available.

Why the future of AI isn’t about better models -- it’s about better governance [Q&A]

The rise of generative and agentic AI is transforming how data is accessed and used, not just by humans but by non-human AI agents acting on their behalf. This shift is driving an unprecedented surge in data access demands, creating a governance challenge at a scale that traditional methods can’t handle.

If organizations can’t match the surge in access requests, innovation will stall, compliance risks will spike, and organizations will reach a breaking point. Joe Regensburger, VP of research at Immuta, argues that the solution isn’t more powerful AI models; it’s better governance. We talked to him to learn more.

Why an adaptive learning model is the way forward in AIOps [Q&A]

Modern IT environments are massively distributed, cloud-native, and constantly shifting. But traditional monitoring and AIOps tools rely heavily on fixed rules or siloed models -- they can flag anomalies or correlate alerts, but they don’t understand why something is happening or what to do next.

We spoke to Casey Kindiger, founder and CEO of Grokstream, to discuss new solutions that blend predictive, causal, and generative AI to offer innovative self-healing capabilities to enterprises.

The impact of AI -- how to maximize value and minimize risk [Q&A]

Tech stacks and software landscapes are becoming ever more complex and are only made more so by the arrival of AI.

We spoke to David Gardiner, executive vice president and general manager at Tricentis, to discuss to discuss how AI is changing roles in development and testing as well as how companies can maximize the value of AI while mitigating the many risks.

Half of Americans think AI is a threat, the other half don't. Who's right?

As artificial intelligence moves from tech circles into daily life, Americans are sharply divided over what it means for the future.

A new Gallup survey finds that 49 percent of U.S. adults see AI as “just the latest in a long line of technological advancements that humans will learn to use to improve their lives and society.”