Migrating from Windows Server 2003: 12 best practices straight from the trenches

Most of us have hopefully managed to get off the sinking ship that was Windows XP. As much of a recent memory as that has become, a new end of life is rearing its head, and it's approaching fervently for those who haven't started planning for it. Microsoft's Windows Server 2003, a solid server operating system that's now about eleven and a half years old, is heading for complete extinction in just under 300 days. Microsoft has a fashionable countdown timer already ticking.

Seeing as we just finished our second server migration in a single week (a personal record so far), sharing some of the finer aspects of how we are streamlining these transitions seems like a timely fit. This braindump of sorts is a collection of best practices that we are routinely following for our own customers, and they seem to be serving us well so far.

The best practices below all assume that you have gone through a full inventory of your current servers, taking note how many servers are still in production and what ongoing workloads they support. If you don't know where you stand, you have no idea where you're heading -- so stop reading here and start getting a grasp on your current server layout. I'm going to pen a fuller piece about how to inventory and plan a server move that addresses all of the non-technical criteria.

Microsoft has put together a fairly good four-step first party guide that you can follow on their Server 2003 EOL website, but as you can expect, it's chock full of soft sells on numerous products you may or may not need, so take it with the usual grain of salt and get an expert involved if necessary.

Given you have a solid inventory, a plan for replacement, and hardware to get the job done, here's a rundown of some of the things saving us hours of frustration.

There's Nothing Wrong with Re-Using Servers -- In Some Cases

Need to re-deploy another physical server after ditching 2003? Refurbishing existing servers for usage in your upgraded environment is not sinful, like some traditional MSPs or IT consulting firms may make it out to be. Many of the voices always pushing for "buy new!" are the ones who are used to making fat margins on expensive server purchases, so follow the money trail when being baited into a brand new server when it may not be necessary.

The last server I just finished deploying was a re-purposed Dell PowerEdge R210 1u rack mount server that was previously being underutilized as a mere Windows 7 development sandbox. With only a few years of age, and no true production workload wear (this is a lower end box, but it was used as anything but a server), the box was a perfect fit for the 20-person office it would end up supporting for AD, file shares, print serving, and other light needs.

We didn't just jump to conclusions on OK'ing the box to be placed back into production, mind you. Re-use of the server was wholly contingent upon the unit passing all initial underlying diagnostics of the existing hardware, and upon passing, getting numerous parts upgrades.

For this particular Dell R210, we ended up installing the max amount of RAM it allows (16GB), the second fastest CPU it could take (a quad core Intel Xeon X3470), dual brand new Seagate 600GB 15K SAS hard drives, and a new Dell H200 Perc RAID controller to handle the disks. A copy of Windows Server 2012 R2 was also purchased for the upgrade.

We also picked up a spare power supply for the unit to have on hand in case the old one dies, since the unit doesn't have warranty anymore. Having a spare HDD on hand doesn't hurt, either, for those planning such a similar move. You don't have to rely on manufacturer warranty support if you can roll your own, and the two most likely parts to fail on any server are arguably the PSU and HDDs.

Still have a good server that has useful life left? Refurbish it! Consultants pushing new servers blindly usually have fat margins backing up their intentions. We overhauled a Dell R210 for a 20-person office for less than half the cost of a brand new box. Proper stress testing and diagnosis before deciding to go this route are critical. (Image Source: Dell)

Instead of spending upwards of $5000-$6000 on a proper new Dell PowerEdge T420 server, this customer spent about half a thousand on refurbishment labor, and another $2000 or so in parts. In the end we ended up saving thousands on what I found to be unnecessary hardware.

We also did a similar upgrade on an HP Proliant DL360e just a week prior. Second matching CPU installed, RAM increased, brand new Samsung 850 Pro SSDs put into a RAID 1, Windows Server 2012 R2 Standard, and a couple of extra fans. We took a capital outlay that would have been no less than $5K and turned it into a $2K overhaul.

Want to go the extra mile with the refurbished system and extend fan life? On all of our overhauls, we lubricate all of the server fans with a few drops of sewing machine oil. You can read about how great of a cheap lease on life this is per this TechRepublic blog post. A $5 bottle at Ace Hardware has lubricated dozens of servers and still has years of oil left.

One last key: it's super important to ensure you are using the right software to diagnose the system's internals with all parts being installed. On server overhauls, we run diagnostics before the system is approved for an overhaul, and also after all new parts go in. Unlike a workstation where we can afford downtime due to a bad part in many cases, a server doesn't have this kind of leeway for being down.

Almost every server maker out there has custom software for testing their boxes. Our favorite server OEM, Dell, has excellent utilities under the guise of Dell Diagnostics that can be loaded onto a DVD or USB stick and ran in a "Live CD" style. Only after all tests pass with flying colors is a box allowed to go back into production.

In addition, we always stress test servers days before they are meant to be placed back into production with a free tool by JAM Software called HeavyLoad. It does the equivalent of red-lining a car for as many hours as you wish. We usually stress a server for 6-10 hours before giving it a green stamp of being ready for workloads again.

In another related scenario last year, we had a client who had dual Dell PowerEdge 2900 servers in production. We refurbished both, and kept one running as the production unit on Windows Server 2012, with the second clone kept in the server cage as a hot spare and as a parts depot. It was a rock solid plan that is humming away nicely to this day, one year later nearly to the day.

We have numerous clients running such refurbished servers today and they are extremely happy not only with the results, but also with the money they saved.

Move to Windows Server 2012 R2 Unless You Have Specific Reasons You Can't

I've talked about this notion so many times before, it feels like I'm beating the dead horse. But it's an important part in planning for any new server to be in production for the next 5-7 or so years for your organization, so it's not something that should be swept under the rug.

There is absolutely zero reason you should be installing servers running on Windows Server 2008 R2 these days. That is, unless you have a special software or technical reason to be doing so. But aside from that, there are no advantages to running Windows Server 2008 R2 on new servers going forward. We've put Windows Server 2012 R2 and Windows Server 2008 R2 through extensive paces already in live client environments and the former is leagues ahead of the latter as far as stability, performance, resource usage, and numerous other areas, especially related to Hyper-V, clustering, and related functions.

Need further reason to stay away from Windows Server 2008? Seeing as we are already halfway through 2014, you would be doing yourself a disservice since Microsoft is cutting support for all flavors of 2008 by January of 2020. That's a mere sliver of just over five years away -- too close for comfort for any server going into production today.

Server 2012 R2 is getting support through January of 2023 which is much more workable in terms of giving us wiggle room if we need to go over a five year deployment on this next go around, with room to spare.

At FireLogic, any new server going up is Windows Server 2012 R2 by default, and we will be anxiously waiting to see what is around the corner as Windows Server releases seem to have a track record lately of only getting markedly better.

A quick note on licensing for Windows Server 2012 R2: do know that you can run three full instances of Windows Server 2012 R2 on any Standard copy of the product. This includes one physical (host) instance for the bare metal server itself, and two fully licensed VMs at no charge via Hyper-V off the same box.

It's an awesome fringe benefit and we take advantage of it often to spin up VMs for things like RDS (Remote Desktop Services). You can read about the benefits of Server 2012 licensing in a great post by Microsoft MVP Aidan Finn.

Ditch the SANs: Storage Spaces Is a Workable, Cheaper Alternative

Like clockwork, most organizations with large storage needs or intent to do things like clustering, are listening to the vendors who are beating the SAN (Storage Area Network) drum near incessantly. If the clock were turned back just four to five years, I could see the justification in doing so. But it's 2014, and Microsoft now lets you roll your own SAN in Server 2012 and up with a feature I've blogged about before, Windows Storage Spaces.

I recently heard a stat from an industry storage expert that nearly 50 percent or more of SANs on the market run Windows behind the scenes anyway, so what's so special about their fancy hardware that justifies the high price tags? I'm having a hard time seeing the benefits, and as such, am not looking at SANs for clients as first-line recommendations. Unless there's a good reason Storage Spaces can't do it, we're not buying the SAN line any longer going forward.

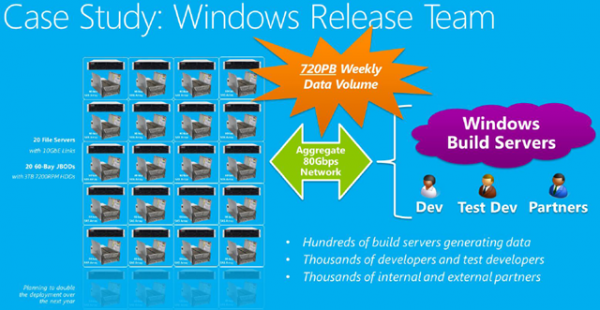

Think Storage Spaces isn't capable of large production workloads yet? The Windows Release team replaced eight full racks of SANs with cheaper, plentiful DAS attached to Windows Server 2012 R2 boxes, using this production network to pass upwards of 720PB of data on a weekly basis. They cut their cost/TB by 33 percent, and ended up tripling their previous storage capacity. While far larger in scale than anything small-midsize businesses would be doing, this just shows how scalable and cost effective Storage Spaces actually is. (Image Source: Aidan Finn)

The premise is very simple. Tie sets of JBOD roll your own DAS (direct attached storage -- SATA/SAS) drives in standard servers running Server 2012 R2 into storage pools which can be aligned into Storage Spaces. These are nothing more than fancy replicated, fault tolerant sets of DAS drives that can scale out storage space without sacrificing performance or the reliability of traditional SANs.

Coupled with Microsoft's new age file system, ReFS, Storage Spaces are highly reliable, highly scalable, and future proofed since Microsoft is supporting the technology for the long haul from everything I am reading.

While Storage Spaces aren't bootable volumes yet, this will change with time, probably rendering the need for RAID cards also a moot point by then, as I questioned in a previous in-depth article on Storage Spaces.

You can read about Microsoft's own internal cost savings and tribulations in a post-SAN world for their Windows Release team, which has far greater data storage needs than any business I consult with.

Clean Out AD/DNS For All References to Dead Domain Controllers

This nasty thorn of an issue was something I had to rectify on a client server replacement just this week. When domain controllers die, they may go to server heaven, but their remnants are alive and well, causing havoc within Active Directory and DNS. It's important to ensure these dead phalanges are cleansed before introducing a new Windows Server 2012 R2 server into the fold, as you will have an uphill battle otherwise.

In a current Windows Server 2003 environment, you can easily find your complete list of active and dead domain controllers via some simple commands or GUI-based clicks. Match this list with what you actually still have running, and if there are discrepancies, it's time to investigate if any of the dead boxes were handling any of your FSMO (Flexible Single Master Operation) roles. A simple way to view what boxes are in control of FSMO in your domain can be found on this article.

While a potentially dangerous operation, if any dead boxes are shown as controlling any FSMO roles, you need to go through and seize those roles back onto an active AD controller (NOT on a potential new Windows Server 2012 R2 box). The steps to handle this are outlined here.

In most small/midsize organizations we support, the FSMO roles are held by a single server, and we can easily transition these over to new Windows Server 2012 R2 instances after the Windows Server 2012 R2 box is promoted as a domain controller.

Be sure that you also do a metadata cleanup of AD for all references of the old dead DCs, and finally, clean out your DNS manually for any references leftover as well -- this includes fine tooth combing ALL forward and reverse lookup zones for leftover records. Even a single remaining entry to a dead box could cause messes you want to avoid down the road.

Once you have a fully clean FSMO role structure, which sit on a healthy DC, you can initiate proper formal role transfer over to the Windows Server 2012 R2 box after you have promoted the system properly through Server Manager. Canadian IT Pro blog has an excellent illustrated guide on handling this.

Remember: your network is only as strong as its lowest common denominator, and that is the core of AD and DNS in most respects. A clean AD is a happy AD.

Getting "Access is Denied" Errors on New Windows Server 2012 R2 DC Promotion? Quick Fix

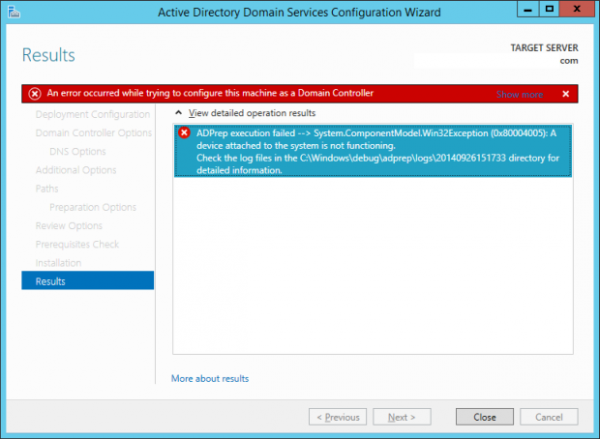

I lost hours on a recent migration to a Windows Server 2012 R2 server from a Windows Server 2003 R2 box due to this Access is Denied error. After cleaning out a few things which I thought may have been causing Server Manager's integrated ADPREP on 2012 R2 to bomb, I finally found the fix which was causing the below error:

The fix? The Windows Server 2003 R2 server had registry issues related to not giving a proper key permissions to the LOCAL SERVICE account on that box. Prior to the adjustment, the registry key in question only had read/write access to the domain and enterprise admins, which was fruitless for what ADPREP wants to see in a full domain controller promotion of a Windows Server 2012 R2 box.

The full fix is described on this blog post, and don't mind the references to Windows client systems -- the information is accurate and fully applies to Windows Server 2003 and likely Windows Server 2008 as well, depending on what your old server runs.

Other problems that could lead to this nasty error include having multiple IP addresses assigned to a single NIC on a domain controller (not kosher in general); using a non-Enterprise Admin or Domain Scheme Admin account to perform the promotion; and having the new Windows Server 2012 R2 server pointing its DNS requests to something other than a primary Windows DNS server, likely your old DC itself.

Follow Best Practices When Configuring 2012 R2 for DNS

DNS by and far is one of the most misconfigured, maligned, and misunderstood entities that make up a Windows network. If I got a dime for every time I had to clean up DNS in a customer network due to misconfiguration... you know the rest.

You can read my full in-depth post on how DNS should look inside a company domain, but here are the main points to take away:

- Your Windows Server 2012 R2 server should always be the primary DNS record. I am assuming you will be hosting DNS on your new Windows Server 2012 R2 domain controller, as is the case with most small-midsize organizations. If so, the primary DNS records on both the server itself, as well as what is broadcasted over DHCP to clients, should be the actual internal IP of the server itself (or the NIC team you have setup for it).

- Consider adding the IP of your firewall as a secondary internal DNS server. This goes more so for the DHCP settings being broadcasted to clients, but consider this: if your DNS server is undergoing maintenance or is rebooting, how do clients resolve internet addresses during this time? To avoid the helpdesk calls, I usually set secondary DNS to point to the Meraki firewall we deployed so it can handle backup DNS resolution. This works extremely well in periods of server downtime.

- Never use public DNS servers in client DHCP broadcasting or on the IP settings of Windows Server 2012 R2. This is a BIG no-no when it comes to Windows networks, and I see it all too often on maligned networks. The only place public DNS servers should be used (Google DNS, OpenDNS, etc) is on the DNS Forwarders page of the DNS administration area on Windows Server 2012 R2. This ensures the internal network is trying to do first-level resolution externally, causing numerous headaches in the way AD wants to work. I discuss how to set this up on my aforementioned DNS Best Practices article.

- Don't disable IPv6 in nearly all cases. Contrary to the notion of "less is more" in the world of servers, IPv6, while still not widely used, is having itself baked in as a dependency on many core Windows Server functions. Unless you specifically have tested to ensure disabling IPv6 doesn't cause any issues, I would almost always recommend leaving it turned on. It doesn't harm anything, and Microsoft even publicly advises against turning it off. As it state on its blog post, Microsoft does not do any internal testing on Windows Server scenarios with IPv6 turned off. You've been warned!

Take some time to ensure the above best practices are followed when setting up your Windows Server 2012 R2 DNS, because an improperly configured network will cause you endless headache. Trust me -- I've been knee deep in numerous server cleanups in the last few years where DNS was the cause of dozens of hours of troubleshooting down the drain.

Use the Chance to Implement Resiliency Best Practices: Dual NICs, PSUs, RAID, etc

A server update is the perfect chance to implement the kinds of things I wrote about in a piece earlier last week outlining what good backbone resiliency looks like on critical servers and network components. If you are purchasing new equipment outright, there is no reason at all you shouldn't be spec'ing out your system(s) with at least the following criticals:

- Dual NICs or better: Windows Server 2012 R2 has NIC teaming functionality baked into the core OS -- no fancy drivers needed. It's easy to setup, works well, and protects you in the face of cable/port/NIC/switch failure during heavy workload periods.

- Dual PSUs or better: The lowest end Dell server we will actively recommend to customers is the Dell PowerEdge T420 when buying new. This awesome tower/rack server has the ability to have dual power supplies loaded in which can be hot swapped and removed on demand in case of failure. Zero downtime needed. Can't spend the money on a hot-swap PSU server? Buy a spare PSU to have on hand, even if you have warranty coverage.

- RAID-1 across (2) SAS or SSD Drives for Windows: Since Storage Spaces cannot be made bootable yet, we are stuck with using tried and true RAID for the core Windows installation on a server. Our go-to option is a pair of high-end SSDs or dual SAS drives paired in a RAID1 on a decent controller.

- RAID-1 or Storage Spaces for data volumes: You have slightly more flexibility with data volumes since they don't need to be bootable to the native bare metal server. Our new up and coming favorite is Storage Spaces due to its cost effectiveness and flexibility, but if you have a RAID controller already due to needing it for your Windows install, then using it to build a 1-2 volume array for storage works well, too. We like RAID-1 sets due to their simplicity and ease of data recovery in the face of failure, but RAID 10 is an option if you want something a little more fancy and can spare the extra couple hundred bucks.

- Dual WAN connectivity: For more and more businesses, having outside connectivity to the main office, or similarly, up to cloud services, is getting increasingly common and important. While Cisco ASAs or Sonicwalls used to be go-to options for many IT pros, these days we are finding huge success by choosing to go with Meraki firewalls and access points. Not only do they have dual WAN connectivity across their firewall line, but the boxes are fully cloud managed and receive firmware updates every few weeks automatically. Can't say enough good things about these excellent units.

Most of the items on the above list aren't going to increase costs that much more. For the amount of productivity loss and headache that not having them will cost otherwise, the expense up front is well worth it in my eyes and that of my customers.

Promote, Test, Demote, Raise: 4 Keys to a Successful Domain Controller Replacement

PTDR is a goofy acronym, but it represents the four key items that we usually follow when implementing new domain controllers into an environment, and wiping away the vestiges of the legacy domain levels. These steps ensure that you aren't removing any old servers before the functionality of new replacements are fully tested.

It goes something like this:

- Promote your new Windows Server 2012 R2 DC. After joining your new Windows Server 2012 R2 server as a member server, it needs to be promoted properly to be a domain controller on the network. Ideally, this means having it take over a shared role for handling DNS as well (if it will be taking over fully), and slowly transferring things like file shares, shared printers, etc. Get the basics in place for what you want this box to handle so you can allow time for step two.

- Test the environment after promotion. Is everything working OK? Is the new server replicating AD information properly and handling DNS requests the way it should? Are there any other goblins coming out of the AD closet you need to tackle? Give yourself anywhere from a few days to a week of breathing room to let these nasties raise to the surface so they can be expunged properly before giving the new DC full control of the network.

- Demote the old Windows Server 2003 server fully after transferring FSMO/DNS roles to it. After you had a chance to test all functionality, and transfer core server roles from Windows Server 2003 to Windows Server 2012 R2, it's time to demote the server. A great guide on how this works can be found here. Once it gets demoted, that's it -- take it off the network, unplug it, and archive the contents of its system state into something like a Shadowprotect or Acronis backup.

- Raise the functional levels of your forest and domain. Running a Windows Server 2012 R2 server on a network acting like it's a Windows Server 2003 one has few benefits. The former introduced numerous enhancements to AD and core infrastructure services within Windows Server, and none of those advantages can come to the surface until you raise your forest and domain functional levels. This is a one time change that cannot be undone, but if you have confirmed you have no other older servers running as DCs, you can safely perform these raises.

Some of the enhancements made in the Windows Server 2012/2012 R2 functional levels are described in an excellent WindowsITPro post.

Virtualize Up Internally, or Better Yet, Virtualize Out via the Cloud

It's no secret that virtualizing is an easy way to reduce the need for physical hardware. We tend to prefer using Hyper-V from Microsoft, mostly in the form of Hyper-V that is baked into the full Windows Server 2012 R2 Standard edition. It's Microsoft's go-to type 2 hypervisor option that allows us to host VMs on domain controllers for companies that don't have money for extra separate servers and don't want to move VMs into the cloud.

The other option, which we haven't adopted with clients that widely yet, is the VMWare alternative called Hyper-V Server 2012 R2 which is a completely free type 1 hypervisor that lives natively on the raw bare metal itself. You heard right -- it's totally free, doesn't cost a dime, and is not feature crippled in any way. Do note that the sole purpose of this edition of Windows Server is to merely host Hyper-V virtual machines. It can'texplains why in detail.

For many organizations, especially ones either looking to move from expensive VMWare rollouts, or considering going down that path, Hyper-V Server 2012 R2 is an awesome option. It receives full support from Microsoft in terms of Windows Updates (there are relatively few needed each month, but they do come out) and follows the same exact support lifecycle policy as Windows Server 2012 R2 Standard, which has a sunset of Jan 2023.

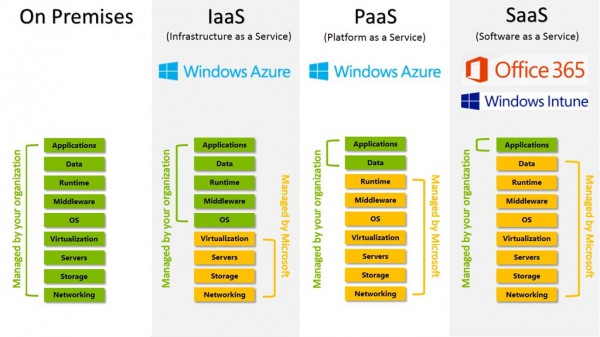

A lot of customers are curious as to what exact areas they can offload by using a cloud IaaS provider like Azure. This neat infographic explains it fairly well. Microsoft handles the networking backbone, storage arrays, servers themselves, virtualization hypervisor, and two items not shown -- the maintenance and geo-redundancy of the instances, too. Not as nice as what SaaS offers, but if you need your own full blown Windows Server instances, IaaS on Azure is as clean as it gets. (Image Source: TechNet Blogs)

While keeping VMs internally is a great first-party option, and getting more mature by the day, we are actively recommending to many clients that moving their VM needs off to services like Azure or Rackspace is a much better bet, especially for organizations with no formal IT staff and no MSP to fall back on. These platforms take care of the hardware maintenance, resiliency, geo-redundancy, and numerous other aspects that are tough to handle on our own with limited resources.

For example, we were at a turning point with a ticket broker who either needed to replace 4-6 standalone aging boxes with new ones, or opt to move them to the cloud as VMs. We ended up choosing to make the full move to Azure with the former boxes being converted into IaaS VMs. With the ability to create virtual networks that can be linked back to your office, we ended up tying those Azure machines back over a VPN being tunneled by a Meraki MX60 firewall. After stabilizing the VPN tunnel to Azure, the broker hasn't looked back.

When deciding on whether to go cloud or stay on premise, there are numerous decision points to consider. We help customers wade through these regularly. But you can follow the advice I penned in an article from about a year ago that went over the core criteria to use when outlining an intended path.

Evaluate What Internal Needs Can Be Offloaded to SaaS

Pinning up extra servers just to continue the age-old mess of "hosting your own" services internally, whether it's on-prem via Hyper-V or in the cloud on Azure IaaS, is just plain silly. SaaS offerings of every shape and size are trimming the number of items that are truly reliant on servers, meaning you should be doing your homework on what items could be offloaded into most cost-effective, less maintenance-hungry options.

For example, it's a no-brainer these days that Office 365, in the form of Exchange Online, is the best bet for hosting business email these days. Businesses used to host Exchange, their own spam filters, and archiving services -- all of which needed convoluted, expensive licensing to work and operate. Office 365 brings it all together under one easy to use SaaS service which is always running the latest and greatest software from Microsoft. I haven't been shy about how much my company loves Office 365, especially for email.

Another item that can likely be evaluated to replace traditional file shares is SharePoint Online. Our own company made the switch last year and we are nearing our year mark on the product soon. While we keep a small subset of files on-premise still (client PC backups; too large to keep in the cloud), the bulk of our day to day client-facing documentation and supporting files are all in SharePoint Online now, accessible from OneDrive for Business, the web browser, and numerous mobile devices. It's an awesome alternative to hosting file shares, if its limitations work with your business needs.

And for many companies, hosting their own phone server or PBX for VoIP needs has always been a necessity. But this day in age, why continue to perpetuate this nightmare when offerings like CallTower Hosted Lync exist? This hybrid UCaaS (Unified Communications as a Service) solution and VoIP telephone offering from CallTower brings the best of a modern phone system along with the huge benefits of Lync together into a single package. We have been on this platform ourselves, along with a number of clients, for a few months now and cannot imagine going back to the status quo.

Still hosting your own PBX, plus paying for GoToMeeting/Webex, and maintaining PRIs or SIP trunks? CallTower has a UCaaS solution called Hosted Lync which integrates all of the above under a simple, cheap cloud-hosted PBX umbrella that runs over any standard WAN connection(s). I moved FireLogic onto this platform, and fully believe this is the future of UC as I can see it. (Image Source: CallTower)

Hosting your own servers has its benefits, but it also comes with its fair share of pains that can, as shown above, be avoided by using cost effective alternative SaaS offerings. We almost always offload capable needs to SaaS platforms where possible these days for customers as it continues to make business sense from a price, redundancy, and maintenance perspective.

Moving File Shares? Use the Chance to Clean House!

Most organizations don't have good formal policies for de-duplicating data, or at the least, ensuring that file shares are properly maintained and not flooded with needless data. If you haven't skimmed your file shares recently, a server move to Windows Server 2012 R2 is the perfect chance to evaluate what exists and what can go.

- Take inventory of current shares. As I mentioned earlier, if you don't know where you stand, you have no idea where you're heading. It's like one of those Google cars hitting snow or rain -- going somewhere, but not intelligently. Make a simple Excel sheet of all the shares, and a summary of who has rights to what.

- See what can be culled -- less is more in the world of shares. There's no reason a company of 30 people needs 100 file shares. Someone wasn't in the driver's seat when it comes to saying no. Regardless of where you are, see what can be logically cut, and better yet, consolidated into other shares. Get rid of duplicated data, and use the chance to clean up internal policies on what gets stored where and for how long.

- Move the shares to RAID-backed or Storage Spaces Windows Server 2012 R2 volume(s). File shares running off single non-redundant hard drives is policy for failure. Use this chance to not only clean up old data, but also place the cleansed data onto proper redundant, tested volumes that can handle the failure of any single given drive. Using Windows 7 or Windows 8 workstations as file share hosts is not best practice, either, no matter how cheap and friendly such an option may look.

In some cases, we won't let clients move data onto clean volumes of a new Windows Server 2012 R2 server until we have been able to sit down with them and verify that old garbage data has been culled and cleaned out. Garbage in, garbage out. Don't perpetuate a bad situation any longer than needed. A server upgrade is ripe timing to force action here.

Is a Server Migration Above Your Head? Hire an Expert

Moving between servers is something warranted once every 5-7 years for most companies, and especially for smaller entities without IT staff, handling such a move first party is rarely a good idea. Companies like mine are handling these situations at least once a month now. We've (almost) seen it all, been there, and done that -- not to brag, but to say that professionals usually know what they are doing since we are doing this week in, week out.

The number of times we've been called in for an SOS on server migrations or email migrations gone south is staggering. And it's usually when we get the call which is generally the time it's too late to reverse course and start from scratch the right way. Customers hate emergency labor fees, but honestly, the best way to avoid them is to avoid the mess in the first place!

Not only can a professional help with the actual technical aspects of a Windows Server 2003 to Windows Server 2012 R2 server move, but also help evaluate potential options for slimming down internal needs and offloading as much as possible to SaaS or IaaS in the cloud. Increasingly, it is also important that organizations under PCI and HIPAA compliance umbrellas consider the finer aspects of end-to-end security such as encryption at-rest, in-transit, and other related areas. A professional can help piece such intricate needs into a proven, workable solution.

With Windows Server 2003 being deprecated in under 300 days at this point, if you haven't started thinking about moving off that old server yet, the time to start is right about now. Seeing as organizations such as healthcare offices and those that handle credit card payments have much to lose with failing HIPAA or PCI compliance, for example, there's no reason to procrastinate any longer.

Have any other best practices to share when moving off Server 2003? Share them below!

Photo Credit: dotshock/Shutterstock

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company FireLogic, with over eight+ years of IT experience in the private and public sectors. He holds numerous technical credentials from Microsoft, Google, and CompTIA and specializes in consulting customers on growing hot technologies such as Office 365, Google Apps, cloud-hosted VoIP, among others. Derrick is an active member of CompTIA's Subject Matter Expert Technical Advisory Council that shapes the future of CompTIA exams across the world. You can reach him at derrick at wlodarz dot net.

Derrick Wlodarz is an IT Specialist who owns Park Ridge, IL (USA) based technology consulting & service company FireLogic, with over eight+ years of IT experience in the private and public sectors. He holds numerous technical credentials from Microsoft, Google, and CompTIA and specializes in consulting customers on growing hot technologies such as Office 365, Google Apps, cloud-hosted VoIP, among others. Derrick is an active member of CompTIA's Subject Matter Expert Technical Advisory Council that shapes the future of CompTIA exams across the world. You can reach him at derrick at wlodarz dot net.