Microsoft releases open source CentOS-based 'Linux Data Science Virtual Machine' for Azure

Microsoft is both an open source and Linux champion nowadays -- on the surface at least (pun intended). In other words, while it does embrace those things, we may not know the motivation of the Windows-maker regarding them. Regardless, Linux and open source are now important to the Redmond company.

Today, Microsoft announces a CentOS-based VM image for Azure called 'Linux Data Science Virtual Machine'. The VM has pre-installed tools such as Anaconda Python Distribution, Computational Network Toolkit, and Microsoft R Open. It focuses on machine learning and analytics, making it a great choice for data scientists.

Crowdsourcing platform creates insights from unstructured data

Getting useful information from unstructured data is a notoriously difficult and time consuming task, but the launch of a new intelligent crowdsourcing platform could be about to change that.

The Spare5 platform uses a known community of specialists to accomplish custom micro-tasks that, filtered for quality, allow product owners to train powerful artificial intelligence models, improve their search and browse experiences, augment their directories and more.

New platform speeds up development of intelligent search apps

One of the problems with big data is that creating applications to access the information inevitably introduces a time lag and this leads to frustration for the end user.

Search and analytics software company Lucidworks is aiming to cut out this bottleneck with the launch of Lucidworks View, which allows companies to quickly and easily create custom search-driven applications built on Apache Solr and Apache Spark.

Open source database targets the big data analytics market

Leader in open source databases MariaDB is announcing the release of its new big data analytics engine, MariaDB ColumnStore.

It unifies transactional and massively parallelized analytic workloads on the same platform. This is made possible because of MariaDB's extensible architecture that allows the simultaneous use of purpose built storage engines for maximum performance, simplification, and cost savings. This approach sets it apart from competitors like Oracle, and removes the need to buy and deploy traditional columnar database appliances.

Use of next generation databases hindered by backup technology

Businesses are showing increased interest in developing their infrastructure to support distributed, scale-out databases and cloud databases, but a lack of robust backup and recovery technologies is hindering adoption.

Backup and recovery is cited by 61 percent of enterprise IT and database professionals as preventing adoption. However, 80 percent believe that deployment of next-generation databases will grow by two times or more by 2018.

Data scientists spend lots of time doing stuff they don't enjoy, but they still love their jobs

Data scientists spend a lot of time doing things they don't like, such as sorting out problems with unprocessed information, but they still love their jobs according to a new survey.

The second annual Data Science report from data enrichment platform CrowdFlower shows that there’s a perceived shortage of data scientists, with 83 percent saying there aren’t enough to go around, up from 79 percent last year.

New platform aims to break down barriers to big data adoption

Big data deployments are increasingly shifting from lab settings to full production environments. But there are a number of security and QoS (quality of service) challenges that can slow this process.

Big data company BlueData is launching the latest release of its EPIC software platform, introducing several security and other upgrades to provide a smoother Big-Data-as-a-Service experience as well as support for new applications and frameworks.

Kyvos brings big data to Microsoft Azure

Many organizations are looking at the benefits they can gain from big data but are put off by the infrastructure costs involved.

Analytics company Kyvos Insights is aiming to make big data more accessible by making its scalable, self-service online analytical processing (OLAP) solution available to users of Microsoft Azure HDInsight.

What you should expect from big data in 2016

Big data has truly progressed from being just a buzzword to being an essential component of many companies' IT infrastructure and business plans. How we store, analyze, and process big data is changing the way we do business, and the industry is in the midst of the biggest transformation in enterprise computing in years.

Organizations can now look for patterns that are indicative of current or even future behavior. And the acceleration in big data deployments is helping to identify where we can expect the really big advances to be made in the near future.

The most disruptive business technologies

You'll quite often hear talk of how technology can disrupt business. A survey carried out at Microsoft's 2015 Global CIO Summit in October suggests that CIOs believe 47 percent of their company's revenues will be under threat from digital disruption in the next five years.

But what does this disruption really mean? Microsoft has produced an infographic looking at the five major technologies that are doing most to disrupt the business world.

New cloud tool links big data to business intelligence

Big data can provide a useful source of insights for business analysis. But providing access to it can mean significant IT effort and the use of expensive, off-the-shelf solutions.

Altiscale the big data as a service specialist is launching a new Insight Cloud self-service analytics solution to provide a bridge between big data and business users.

New platform improves security, governance and performance for big data

Organizations are keen to exploit the power of big data and containerized applications using Docker allow them to do this in a reliable way.

Converged data platform specialist MapR Technologies is launching its latest version which provides a comprehensive data services layer for Docker, offering enhancements to security, data governance and performance.

Big Brother's crystal ball: China developing software to monitor citizens and predict terrorist activity

We've become used to the idea of online surveillance thanks to Edward Snowden blowing the lid off the activities of the NSA and GCHQ. While it's easy and natural to bemoan the infringement of privacy such surveillance entails, no one ensures as limited and controlled an internet as the Chinese.

There's the famous Great Firewall of China for starters, and as part of a counter-terrorism program the country also passed a law requiring tech companies to provide access to encryption keys. Now the Communist Party has ordered one of its defense contractors to develop software that uses big data to predict terrorist activity.

Documents reveal details of EU-US Privacy Shield data sharing deal

Details of the data sharing arrangements agreed between the US and EU earlier in the month have been revealed in newly published documents. The EU-US Privacy Shield transatlantic data transfer agreement is set to replace the Safe Harbor that had previously been in place.

The European Commission has released the full legal texts that will form the backbone of the data transfer framework. One of the aims is to "restore trust in transatlantic data flows since the 2013 surveillance revelations", and while privacy groups still take issue with the mechanism that will be in place, the agreement is widely expecting to be ratified by members of the EU.

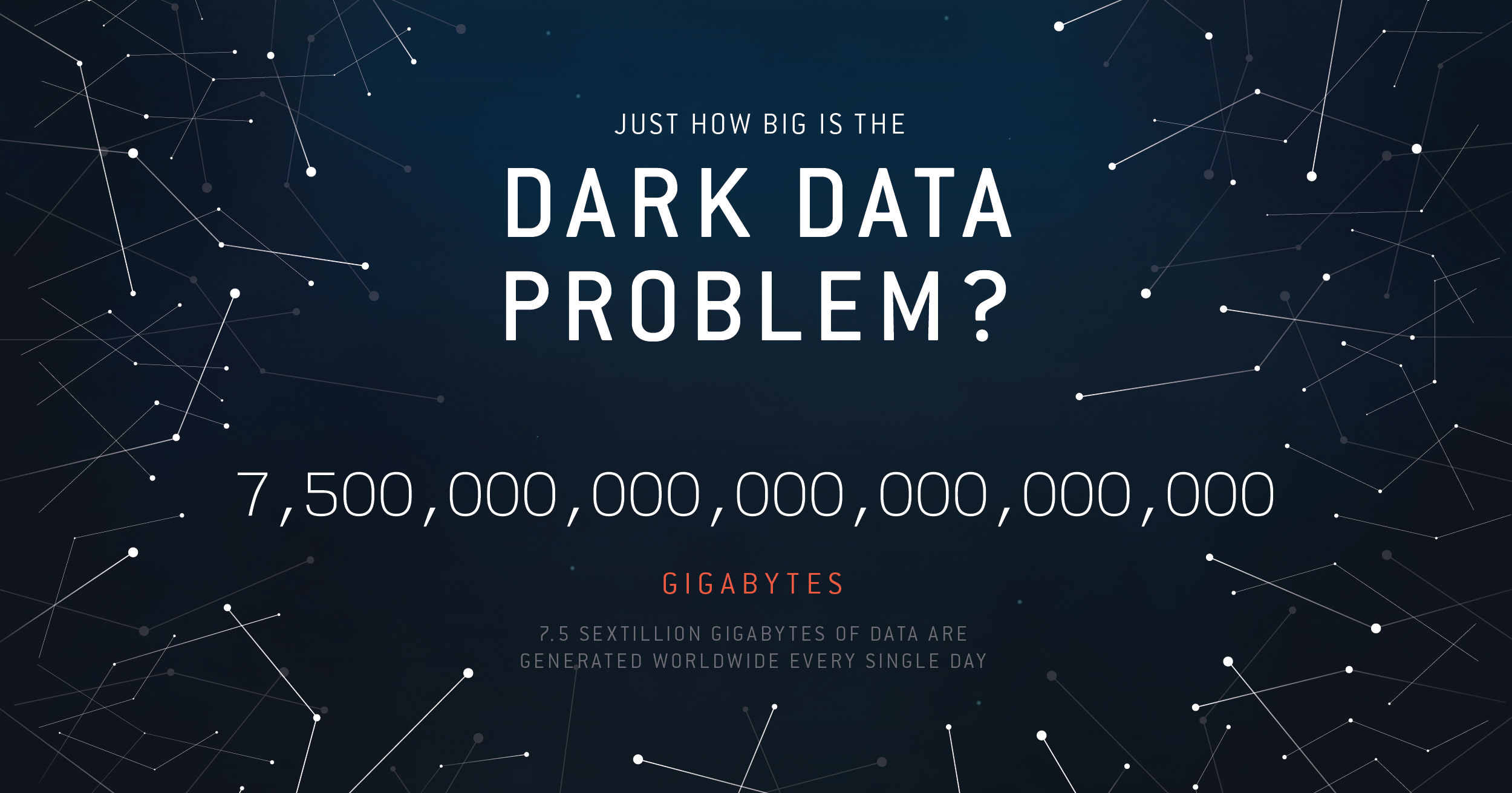

Dark data and why you should worry about it

How much of your company's data do you actually use? According to search technology specialist Lucidworks, businesses typically only analyze around 10 percent of the data they collect.

The rest becomes what the company calls 'dark data' -- information that lurks unused. Much of this data is unstructured and doesn't fit into any convenient database format. This means that companies don't have the tools to make sense of it or simply find they have too much to handle.