Chrome extension Who Targets Me? reveals how Facebook is used for election propaganda

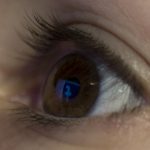

Social media is powerful, so it's really little wonder that the likes of Facebook are used for propaganda. We already know that advertising can be very carefully targeted for maximum impact, and this can prove important when it comes to getting across a political message.

With the UK on the verge of an early general election -- one that will be fought with Brexit and Scottish Independence looming large -- political campaigns are getting underway, including on Facebook. To help educate voters about how they are being besieged by political parties, a free Chrome extension called Who Targets Me? has been launched. It reveals just how personal information made available on the social network is used.

Facebook Messenger rolls out Instant Games globally, with turn-based gameplay and bots

Facebook has been introducing games to its Messenger chat app for a while. It started with simple hidden gems like Chess, Basketball, and Soccer, and then added arcade games to the mix.

Today, the social networking giant begins to roll out Instant Games on Messenger globally, and for all users on both iOS and Android. But that’s not all.

Facebook denies allowing advertisers to target people based on their emotional state

A leaked internal document shows that Facebook is capable of identifying people according to their emotional state. The document, seen by The Australian, shows how the social network can monitor users' posts and determine when they are feeling "stressed, defeated, overwhelmed, anxious, nervous, stupid, silly, useless, or a failure."

The leak pertains to Facebook's Australian office and suggests that algorithms can be used to detect "moments when young people need a confidence boost." It raises serious ethical questions about Facebook's capabilities, but the company denies it is doing anything wrong.

Facebook updates Rights Manager so content owners can earn ad income from pirated videos

Like Google, Facebook places great importance on advertising. The social network not only earns money from ads itself, but also allows companies and individuals to do so by displaying ads in videos. Pirates were quick to spot an easy way to earn money -- steal someone else's popular video and watch the ad revenue roll in.

Now Facebook is fighting back in a way that has already been used to some extent by YouTube. There is a new "claim ad earnings" option in the Rights Manager tool which enables the owner of a particular video to bag the ad revenue when their material is pirated. But the update to Rights Manager are more far-reaching than this.

Report: Facebook really is used for propaganda and to influence elections

It's something that many people have expected for some time, and now we know that it's true. Facebook has admitted that governments around the world have used the social network to spread propaganda and try to influence the outcome of elections.

In the run-up to the US election, there was speculation that powerful groups had been making use of Facebook to influence voters by spreading fake news. Now, in a white paper, Facebook reveals that through the use of fake accounts, targeted data collection and false information, governments and organizations have indeed been using the social network to control the news, shape the political landscape, and create different narratives and outcomes.

New report shows the number of requests for user data Facebook receives from global governments

Today Facebook publishes its Global Government Requests Report, revealing just how many data requests the social network has received from governments around the world. This time around, the report covers the second half of 2016, and it shows a mixed-bag of figures.

While the number of items that had to be restricted due to contravention of local laws dropped, the number of government data requests increased by 9 percent compared to the previous six months. Facebook is well-aware that it faces scrutiny and criticism for its willingness to comply with data requests, and the company tries to allay fears by saying: "We do not provide governments with "back doors" or direct access to people's information."

Facebook is testing pre-emptive related articles in News Feed

The "related articles" feature of Facebook's News Feed is nothing new -- in fact it has been with us for more than three years. But now the social network is trialling a new way of displaying related content; rather than waiting until you have clicked on a story to suggest related stories you might be interested in, Facebook will instead be offering these suggestions before you read an article.

As well as giving users the chance to read more about a topic from different source, Facebook says that it will help people to discover articles which have been fact-checked. It is -- almost by accident, it seems -- another way for Facebook to tackle fake news.

Spotify bot for Facebook Messenger lets you share music and listen to mood-based playlists

At Facebook's F8 conference yesterday, much of the attention was focused on virtual reality, augmented reality -- anything that breaks out of vanilla reality. But there were other things of arguably greater interest, and for music fans there was news of the Spotify bot for Facebook Messenger.

Facebook has been throwing a lot at bots recently, and it's little surprise that big names like Spotify are getting in on the action. For the music streaming service, the bot serves a dual purpose: giving useful functionality to Messenger users, while simultaneously pushing people into taking up a subscription.

Facebook vows to do better after murder video

By now you undoubtedly have seen news coverage of the so-called "Facebook Murder." While the social network was not responsible for the killing, the suspect did upload a video of the murder to Facebook. The video upload aspect of the killing has lead to reflections on society's reliance on social networks, but it is important to remember that there is a real victim here -- a 74-year old grandfather named Robert Godwin. His justice and remembrance is more important than blaming Facebook for not removing the video fast enough.

Of course, Facebook still has a responsibility to issue a statement on the situation, and today, Justin Osofsky, VP Global Operations, has done so. Osofsky explains that the murder "goes against our policies and everything we stand for."

Facebook responds to the Cleveland murder shared on the social network

Over the weekend, it was suggested that Steve Stephens used Facebook Live to livestream himself fatally shooting a man in his 70s. He went on to use the social network to admit to other murders, as well as saying that he wanted to "kill as many people as I can."

Despite rumors of a murder having been committed live on Facebook, the social network issued a statement clarifying that, while Stephens had broadcast on Facebook Live over the weekend, the footage had actually been uploaded rather than livestreamed. Whether broadcast live or not, the story -- once again -- brings into question Facebook's content vetting procedures.

Investigation finds Facebook mods fail to remove illegal content such as extremist and child porn

That Facebook is fighting against a tide of objectionable and illegal content is well known. That the task of moderating such content is a difficult and unenviable one should come as news to no one. But an investigation by British newspaper The Times found that even when illegal content relating to terrorism and child pornography was reported directly to moderators, it was not removed.

More than this, the reporter involved in the investigation found that Facebook's algorithms actively promoted the groups that were sharing the illegal content. Among the content Facebook failed to remove were posts praising terrorist attacks and Islamic State, and others calling for more attacks to be carried out. Failure to remove illegal content once reported is, under British law, a crime in itself.

Snapchat reminds Scottish voters to register to vote

With council elections due to be held throughout Scotland next month, steps are being taken to ensure that as many people as possible are registered to vote. The Electoral Commission has turned to Snapchat to remind people to register ahead of the cut-off deadline next week.

Using social media tools as election reminders is not a new tactic -- Facebook has been used for some time -- but in using Snapchat, an entirely different section of voters is being targeted. This is the first time 16- and 17-year olds will be eligible to vote in Scottish council elections, and Snapchat seems like the sensible way to connect with late-millennials.

Facebook goes on the offensive against fake news and aims to educate users

Having introduced various tools to fight fake news, the next weapon in Facebook's arsenal is education. Over the next few days a large "Tips for spotting fake news" banner will appear at the top of news feeds in 14 countries, but the approach it is taking is unlikely to have much impact on those most influenced by, and most likely to share, fake news.

Like Google, Facebook is taking steps to tackle fake news. The social network has already announced a raft of measures aimed at stamping out the problem, but now it is trying to not only educate people about how to spot fake news, but also to stem the spread of fake news, and to disincentivize the practice.

Facebook's AI assistant, M, now offers suggestions in Messenger

Promising to "make your Messenger experience more useful, seamless and delightful," Facebook has launched suggestions from M to everyone in the US. M is the social network's AI assistant, and iOS and Android users can now benefit from behavior-based suggestions for content and actions as the assistant analyses conversations.

What this means in practice is that M might notice that you are chatting with a friend about sending them some money for something. Rather than waiting until you meet them in person, M will spring into action and suggest that you might like to send the money through Messenger. Other possible suggestions relate to Uber and Lyft, stickers, polls and locations.

Microsoft, Google, Twitter and Facebook to work with UK government to tackle extremist content

Following UK home secretary Amber Rudd's suggestion that encrypted messaging service WhatsApp was 'a secret place to hide' for terrorists, four major technology companies met with the minister to discuss the removal of extremist material from websites and social media.

Microsoft, Google, Twitter and Facebook met with Rudd amid calls from civil liberties groups for greater transparency. Following the meeting, senior executives from each company signed a statement indicating that they are ready to work with the government to tackle the problem. The matter of backdoors into encrypted apps and services, however, remained off the table.

© 1998-2025 BetaNews, Inc. All Rights Reserved. About Us - Privacy Policy - Cookie Policy - Sitemap.