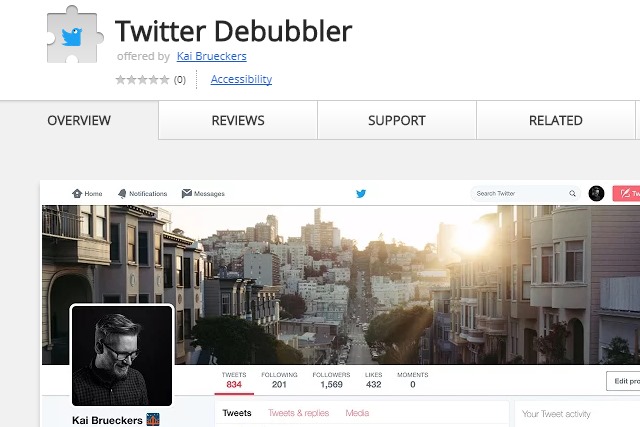

How to avoid Twitter's bubbly redesign

With its latest redesign, many people are complaining that Twitter has stepped back in time. If you are not a fan of the "circles and curves everywhere" look, you can -- with a little help -- avoid the redesign and stick with the way things were.

There's just one catch -- you have to be using Chrome. If this is already your web browser of choice, all you need to do is to install an extension built specifically to "remove Twitter's 'bubbly' redesign."

Twitter's redesign basically comprises old fashioned rounded buttons and new icons

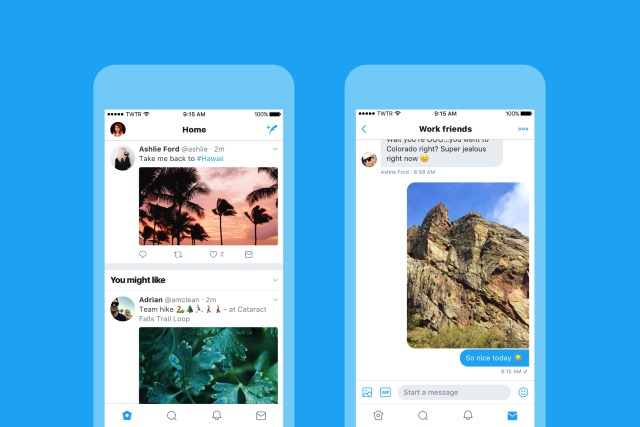

It has been a while since Twitter was treated to a new lick of paint, but that all changes today. A new look is rolling out, and "round" is the word that springs most readily to mind.

Think back to the look of the web around 15 years ago -- all rounded corners and the like -- and you're in the right ballpark. Online there are not only rounded buttons and round profile pictures, but also redesigned, wireframe icons. Mobile users are also treated to a new look.

Happy birthday to the GIF... and welcome to Facebook comments!

Today marks the 30th anniversary of the GIF. The humble file format has -- after protracted arguments about how to pronounce the word -- come a long way. After years irritating people in the format of flashing animated ads, the images are now used to adorn messages with pithy memes and pertinent video clips.

GIFs in their current incarnation are supported by messaging tools and social platforms left, right and center, and after including a dedicated GIF button in Facebook Messenger, the social network is now rolling out the same feature in comments.

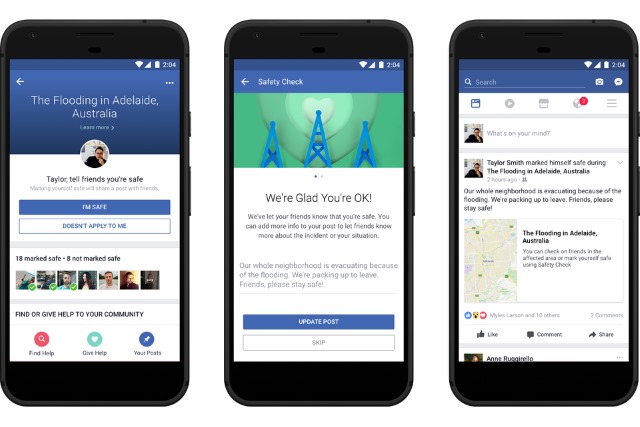

Facebook expands Safety Check with fundraising and more

Facebook's Safety Check feature has become one of the easiest ways of checking up on loved ones when disaster strikes. It also gives people in the affected area the opportunity to let friends and family know that they're OK, and today Facebook is adding a number of new options, including fundraising.

Starting off in the US, people will now be able to start a fundraiser from within Safety Check to help raise money for those in need. These can be for personal or charitable causes, and the feature makes it easy for others to make donations. There are also other changes that make Safety Check more useful.

Instagram rolls out archiving feature so you can hide unwanted photos without deleting them

The eyes may be a gateway to the soul, but the feeds of social media accounts can be even more revealing. Facebook, Instagram, Twitter and their ilk all give people the opportunity to not only share their lives with the world, but present a particular image of themselves. Of course, there are the occasional posts which, well, let the side down.

With this in mind, Instagram is rolling out a new archive feature that makes it possible to remove images from your feed without having to delete them. If you have any embarrassing pictures you'd rather didn’t taint your image, you can hide them without having to lose them completely.

Does Donald Trump tweet too much? America thinks so

Donald Trump may not be the first US president to take to Twitter, but he's certainly proved unique in the way he uses the social platform. Tweets have become his public mouthpiece, used to issue train-of-thought broadcasts, as well as plenty of oddities -- it's going to be some time before "covfefe" is forgotten.

But while many are pleased to see Trump issuing statements through an accessible medium, critics on both sides of the political spectrum have voiced concern about his outpourings. Importantly, the American public now believes that the president tweets too much.

Facebook launches a trio of features to help US constituents connect with elected officials

Donald Trump is just one example of politicians using social media to get their messages out. With President Trump, Twitter acts very much as a one-way means of communications -- Facebook wants to make the channels between elected officials and constituents a two-way street.

As such, the social network is launching three new features -- Constituent Badges, Constituent Insights and District Targeting -- to help users get in touch with their elected representatives. These are the latest attempts by Facebook to increase meaningful civic engagement through its service while fighting back complaints about fake news.

Apple nixes Facebook and Twitter integration from iOS 11

With the arrival of any new operating system, the focus tends to be on what has been added and what has been improved. But it's also important to keep an eye on what has been removed, and this is true of Apple's newly announced iOS 11.

One of the things to have been removed from the upcoming version of Apple's mobile OS is social media integration. Specifically, Facebook, Twitter, Flickr and Vimeo have been dropped from Settings, meaning that these services will no longer be able to offer an easy way to sign into apps and services using social media accounts.

Facebook uses Pride Month to pat itself on the back over LGBTQ support

Social media platforms are, by their very nature, keen to be welcoming to as broad a spectrum of people as possible. Twitter, YouTube, and other services of their ilk like to be seen to be as inclusive as can be imagined and Facebook is no different.

This month is Pride Month, and Facebook is not only joining in the celebrations, but also using it to indulge in a little self-celebration and self-congratulation. The social network is rolling out a rainbow frame, a Pride reaction and Pride-themed masks for photos, Pride stickers in Messenger and more, but the company is also falling over itself to prove how diverse not only its userbase is, but also its workforce.

Tech companies retaliate against Theresa May's claim they offer a 'safe space' for extremists

Following the attacks on London over the weekend, prime minister Theresa May made calls for further regulation of the internet -- despite having already ushered in the snooper's charter, one of the most invasive pieces of online legislation in the world.

Speaking about the attacks, May said: "We cannot allow this ideology the safe space it needs to breed. Yet that is precisely what the internet, and the big companies that provide internet-based services provide." Google, Facebook and Twitter have all lashed out, saying they already do a great deal to combat terrorist and extremist content on their networks.

Police ask people not to share London Bridge and Borough Market attack footage on social media

Last night saw two attacks in London leading to the deaths of seven people and dozens of injuries. A van driven at pedestrians on London Bridge, and stabbings in Borough Market have been labelled as terrorist attacks, and Facebook Safety Check was activated for the incident.

The social network was not just used by people to let loved ones know that they were safe following the attacks, but also to share footage of the shocking aftermath. While police are keen for witnesses to come forward with footage they may have shot on mobile phones, they are pleading with people not to share videos on social media.

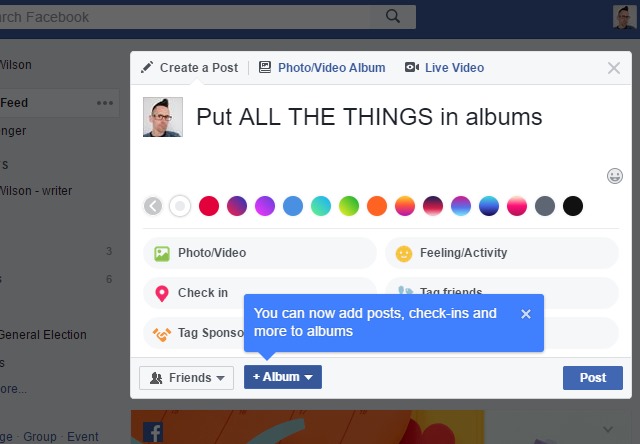

Not just for photos -- now you can add just about anything to Facebook albums

Albums on Facebook have, logically, been a handy way to store and organize photographs. for a while now. But an update to the social network means that albums can now be used to group together posts, photos, check-ins and more.

In a fairly major overhaul of the system, Facebook is in the process of greatly expanding the capabilities of albums, making them far more versatile and useful. As well as increasing the range of content that can be added, Facebook is also surfacing collaborative albums, introducing featured albums, and more.

Facebook's solution to fake news: 'fight information with more information'

It may be Donald Trump who is obsessed with what he perceives as "fake news" (translation: anything which is not in line with his personal views), but there is a genuine problem with the dissemination of false information online, particularly on social media sites such as Facebook.

Just as it has voiced a commitment to tackling its well-known problems with trolling and abuse, Facebook has also made a great deal of noise about fighting fake news. Despite this, Facebook shareholders have rejected proposals that suggested the company should release a report into the impact of fake news. Mark Zuckerberg thinks he has a solution: "fight information with more information."

Trump administration approves visa questionnaire that asks for social media handles

The world may be focused on the US withdrawal from the Paris accord, but the Trump administration is causing plenty of ripples in other areas too. Not content with trying to push through travel bans, the US government is also tightening up on visa applications.

An updated version of the supplemental visa application questionnaire asks would-be visitors for not only details of their travel and address history, but also for the names they use on social media. Applicants are required to provide details dating back five years, but officials are not saying in what circumstances the extra questions will become necessary.

Facebook redesigns security settings page making two-factor authentication easily identifiable

Realizing that its security settings were off-putting to many people due to being a shambolic mess, Facebook has rolled out a redesign which it says helps to improve clarity.

As well as giving greater prominence to the most important security settings, some options have been renamed. This comes after Facebook conducted some research into why users were clicking certain options but not changing them -- it turns out they had no idea what the settings actually did.